【CV-Paper 12】图像分割 01:FCN-2014

论文原文:点击此处

论文下载:点击此处

论文被引:14541

论文年份:2014

论文代码:点击此处

顾名思义,fully convolutional networks 就是全卷积网络,那么它与传统的神经网络架构有什么区别?

- 没有全连接层,只有卷积层,有时还有池化层组成;

- 输入图像,输出也是图像,而不是分类,因为输出层是卷积层。

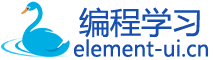

看一下分类网络和FCN分割的对比。

这么做有什么优势?引用Quora上的一个回答1。

Input image size: If you don’t have any fully connected layer in your network, you can apply the network to images of virtually any size. Because only the fully connected layer expects inputs of a certain size, which is why in architectures like AlexNet, you must provide input images of a certain size (224x224).

Spatial information: Fully connected layer generally causes loss of spatial information - because its “fully connected”: all output neurons are connected to all input neurons. This kind of architecture can’t be used for segmentation, if you are working in a huge space of possibilities (e.g. unconstrained real images [1]). Although fully connected layers can still do segmentation if you are restricted to a relatively smaller space e.g. a handful of object categories with limited visual variation, such that the FC activations may act as a sufficient statistic for those images [2,3]. In the latter case, the FC activations are enough to encode both the object type and its spatial arrangement. Whether one or the other happens depends upon the capacity of the FC layer as well as the loss function.

Computational cost and representation power: There is also a distinction in terms of compute vs storage between convolutional layers and fully connected layers that I am a bit confused about. For instance, in AlexNet the convolutional layers comprised of 90% of the weights (~representational capacity) but contributed only to 10% of the computation; and the remaining (10% weights => less representation power, 90% computation) was eaten up by fully connected layers. Thus usually researchers are beginning to favor having a greater number of convolutional layers, tending towards fully convolutional networks for everything.

第二个回答2

Fully convolutional indicates that the neural network is composed of convolutional layers without any fully-connected layers or MLP usually found at the end of the network. A CNN with fully connected layers is just as end-to-end learnable as a fully convolutional one. The main difference is that the fully convolutional net is learning filters every where. Even the decision-making layers at the end of the network are filters.

A fully convolutional net tries to learn representations and make decisions based on local spatial input. Appending a fully connected layer enables the network to learn something using global information where the spatial arrangement of the input falls away and need not apply.

文章目录

- Fully Convolutional Networks for Semantic Segmentation

- Abstract

- 1. Introduction

- 2. Related work

- 3. Fully convolutional networks

- 3.1. Adapting classifiers for dense prediction

- 3.2. Shift-and-stitch is filter rarefaction

- 3.3. Upsampling is backwards strided convolution

- 3.4. Patchwise training is loss sampling

- 4. Segmentation Architecture

- 4.1. From classifier to dense FCN

- 4.2. Combining what and where

- 4.3. Experimental framework

- 5. Results

- 6. Conclusion

- A. Upper Bounds on IU

- B. More Results

- Changelog

Fully Convolutional Networks for Semantic Segmentation

Abstract

Convolutional networks are powerful visual models that yield hierarchies of features. We show that convolutional networks by themselves, trained end-to-end, pixelstopixels, exceed the state-of-the-art in semantic segmentation.Our key insight is to build “fully convolutional” networks that take input of arbitrary size and produce correspondingly-sized output with efficient inference and learning. We define and detail the space of fully convolutional networks, explain their application to spatially dense prediction tasks, and draw connections to prior models. We adapt contemporary classification networks (AlexNet [22], the VGG net [34], and GoogLeNet [35]) into fully convolutional networks and transfer their learned representations by fine-tuning [5] to the segmentation task. We then define a skip architecture that combines semantic information from a deep, coarse layer with appearance information from a shallow, fine layer to produce accurate and detailed segmentations.Our fully convolutional network achieves state-of-the-art segmentation of PASCAL VOC (20% relative improvement to 62.2% mean IU on 2012), NYUDv2, and SIFT Flow, while inference takes less than one fifth of a second for a typical image.

卷积网络是强大的视觉模型,可产生要素层次结构。 我们证明,卷积网络本身(经过端到端训练的像素到像素)在语义分割方面超过了最新技术。我们的主要观点是建立“全卷积”的网络,该网络可以接受任意大小的输入,并通过有效的推理和学习产生相应大小的输出。 我们定义和详细说明了全卷积网络的空间,解释了它们在空间密集的预测任务中的应用,并绘制了与先前模型的对比关系图。 我们将当代分类网络(AlexNet [22],VGG net [34]和GoogLeNet [35])改编为全卷积网络,并通过微调[5]将其学习的表示传递给分割任务。 然后,我们定义一个跳过结构,该结构将来自较深的粗糙层的语义信息与来自较浅的精细层的外观信息相结合,以产生准确而详细的细分。我们的全卷积网络实现了PASCAL VOC(2012年相对改善,平均IU达到62.2%),NYUDv2和SIFT Flow的最新分割,而对于典型图像,推理所需的时间不到五分之一秒。

1. Introduction

Convolutional networks are driving advances in recognition. Convnets are not only improving for whole-image classification [19, 31, 32], but also making progress on local tasks with structured output. These include advances in bounding box object detection [29, 12, 17], part and keypoint prediction [39, 24], and local correspondence [24, 9].

卷积网络正在推动识别技术的进步。卷积不仅改善了全图像分类[22,34,35],而且在具有结构化输出的定位任务上也取得了进展。 这些包括边界框对象检测[32、12、19],部分和关键点预测[42、26]以及局部对应[26、10]方面的进步。

The natural next step in the progression from coarse to fine inference is to make a prediction at every pixel. Prior approaches have used convnets for semantic segmentation [27, 2, 8, 28, 16, 14, 11], in which each pixel is labeled with the class of its enclosing object or region, but with shortcomings that this work addresses.

从粗略推断到精细推断的下一步是对每个像素进行预测。 先前的方法已经使用卷积语义分割[30、3、9、31、17、15、11],其中每个像素都用其封闭的对象或区域的类别标记,但是存在该工作要解决的缺点。

We show that a fully convolutional network (FCN), trained end-to-end, pixels-to-pixels on semantic segmentation exceeds the state-of-the-art without further machinery. To our knowledge, this is the first work to train FCNs end-to-end (1) for pixelwise prediction and (2) from supervised pre-training. Fully convolutional versions of existing networks predict dense outputs from arbitrary-sized inputs. Both learning and inference are performed whole-image-ata-time by dense feedforward computation and backpropagation. In-network upsampling layers enable pixelwise prediction and learning in nets with subsampled pooling.

我们显示,在语义分割上,经过端到端,像素到像素训练的全卷积网络(FCN)超过了最新技术,而无需其他机制。据我们所知,这是端到端训练FCN的第一项工作(1)用于像素预测,而(2)则来自监督式预训练。 现有网络的全卷积版本可以预测任意大小输入的密集输出。学习和推理都是通过密集的前馈计算和反向传播在整个图像时间进行的。网络内上采样层可通过子采样池在网络中实现像素级预测和学习。

This method is efficient, both asymptotically and absolutely, and precludes the need for the complications in other works. Patchwise training is common [27, 2, 8, 28, 11], but lacks the efficiency of fully convolutional training. Our approach does not make use of pre- and post-processing complications, including superpixels [8, 16], proposals [16, 14], or post-hoc refinement by random fields or local classifiers [8, 16]. Our model transfers recent success in classification [19, 31, 32] to dense prediction by reinterpreting classification nets as fully convolutional and fine-tuning from their learned representations. In contrast, previous works have applied small convnets without supervised pre-training [8, 28, 27].

这种方法在渐近性和绝对性上都是有效的,并且不需要其他工作中的复杂性。逐行训练是常见的[27、2、8、28、11],但缺乏完全卷积训练的效率。我们的方法没有利用前后处理的复杂性,包括超像素[8,16],建议[16,14]或通过随机字段或局部分类器进行的事后细化[8,16]。我们的模型通过将分类网络重新解释为全卷积并根据其学习表示进行微调,将最近在分类[19、31、32]中的成功转移到密集预测。相比之下,先前的工作在没有监督预训练的情况下应用了小型卷积网络[8,28,27]。

Semantic segmentation faces an inherent tension between semantics and location: global information resolves what while local information resolves where. Deep feature hierarchies jointly encode location and semantics in a localto-global pyramid. We define a novel “skip” architecture to combine deep, coarse, semantic information and shallow, fine, appearance information in Section 4.2 (see Figure 3).

语义分割面临着语义和位置之间的固有矛盾:全局信息解决了什么,而局部信息解决了什么。深度特征层次结构在局部到全局金字塔中共同编码位置和语义。我们在第4.2节中定义了一种新颖的“跳过”架构,以结合深度,粗略,语义信息和浅,精细,外观信息(参见图3)。

In the next section, we review related work on deep classification nets, FCNs, and recent approaches to semantic segmentation using convnets. The following sections explain FCN design and dense prediction tradeoffs, introduce our architecture with in-network upsampling and multilayer combinations, and describe our experimental framework. Finally, we demonstrate state-of-the-art results on PASCAL VOC 2011-2, NYUDv2, and SIFT Flow.

在下一部分中,我们将回顾有关深度分类网,FCN和使用卷积网络进行语义分割的最新方法的相关工作。 以下各节介绍了FCN设计和密集的预测权衡,介绍了具有网络内上采样和多层组合的结构,并描述了我们的实验框架。最后,我们演示了PASCAL VOC 2011-2,NYUDv2和SIFT Flow的最新结果。

2. Related work

Our approach draws on recent successes of deep nets for image classification [19, 31, 32] and transfer learning [4, 38]. Transfer was first demonstrated on various visual recognition tasks [4, 38], then on detection, and on both instance and semantic segmentation in hybrid proposal classifier models [12, 16, 14]. We now re-architect and finetune classification nets to direct, dense prediction of semantic segmentation. We chart the space of FCNs and situate prior models, both historical and recent, in this framework.

我们的方法借鉴了深层网络在图像分类[19,31,32]和迁移学习[4,38]方面的最新成功。首先在各种视觉识别任务上演示了迁移[4,38],然后在混合提议分类器模型[12,16,14]中的检测以及实例和语义分割上进行了演示。现在,我们重新构造和微调分类网,以进行语义细分的直接,密集的预测。我们在此框架中绘制了FCN的空间并放置了历史模型和最新模型。

Fully convolutional networks. To our knowledge, the idea of extending a convnet to arbitrary-sized inputs first appeared in Matan et al. [25], which extended the classic LeNet [21] to recognize strings of digits. Because their net was limited to one-dimensional input strings, Matan et al. used Viterbi decoding to obtain their outputs. Wolf and Platt [37] expand convnet outputs to 2-dimensional maps of detection scores for the four corners of postal address blocks. Both of these historical works do inference and learning fully convolutionally for detection. Ning et al. [27] define a convnet for coarse multiclass segmentation of C. elegans tissues with fully convolutional inference.

全卷积网络。据我们所知,将卷积网络扩展到任意大小的输入的想法首先出现在Matan等人中[25],它扩展了经典的LeNet [21]以识别数字字符串。因为它们的网络仅限于一维输入字符串,所以Matan等人使用Viterbi解码获得其输出。 Wolf和Platt [37]将convnet输出扩展为邮政地址块四个角的检测分数的二维图。这两个历史著作都进行推理和全卷积学习以进行检测。Ning 等[27]定义了一个卷积网络,用完全卷积推理对秀丽隐杆线虫组织进行粗分类。

Fully convolutional computation has also been exploited in the present era of many-layered nets. Sliding window detection by Sermanet et al. [29], semantic segmentation by Pinheiro and Collobert [28], and image restoration by Eigen et al. [5] do fully convolutional inference. Fully convolutional training is rare, but used effectively by Tompson et al. [35] to learn an end-to-end part detector and spatial model for pose estimation, although they do not exposit on or analyze this method.

在当今的多层网络中,也已经开发了全卷积计算。 Sermanet等人的滑动窗口检测。 [29],由Pinheiro和Collobert [28]进行语义分割,以及由Eigen等人进行图像复原。 [5]做全卷积推理。全卷积训练是很少见的,但是被Tompson等人[35]有效地使用。 学习一个端到端的零件检测器和空间模型来进行姿态估计,尽管它们没有阐述或分析这种方法。

Alternatively, He et al. [17] discard the nonconvolutional portion of classification nets to make a feature extractor. They combine proposals and spatial pyramid pooling to yield a localized, fixed-length feature for classification. While fast and effective, this hybrid model cannot be learned end-to-end.

另外,He等[19]丢弃分类网的非卷积部分以制作特征提取器。 他们将提案和空间金字塔池相结合,以产生用于分类的局部固定长度特征。 虽然快速有效,但无法端对端学习这种混合模型。

Dense prediction with convnets. Several recent works have applied convnets to dense prediction problems, including semantic segmentation by Ning et al. [27], Farabet et al.[8], and Pinheiro and Collobert [28]; boundary prediction for electron microscopy by Ciresan et al. [2] and for natural images by a hybrid neural net/nearest neighbor model by Ganin and Lempitsky [11]; and image restoration and depth estimation by Eigen et al. [5, 6]. Common elements of these approaches include

卷积网络的密集预测。最近有几篇著作将卷积网络应用于密集预测问题,包括Ning等人[27]的语义分割,Farabet等[8],以及Pinheiro和Collobert [28]; Ciresan等人的电子显微镜边界预测。 [2]和Ganin和Lempitsky [11]的混合神经网络/最近邻居模型的自然图像;以及Eigen等人的图像恢复和深度估计。 [5,6]。这些方法的共同要素包括:

- small models restricting capacity and receptive fields;

- patchwise training [27, 2, 8, 28, 11];

- post-processing by superpixel projection, random field regularization, filtering, or local classification [8, 2, 11];

- input shifting and output interlacing for dense output [28, 11] as introduced by OverFeat [29];

- multi-scale pyramid processing [8, 28, 11];

- saturating tanh nonlinearities [8, 5, 28]; and

- ensembles [2, 11],

whereas our method does without this machinery. However, we do study patchwise training 3.4 and “shift-and-stitch” dense output 3.2 from the perspective of FCNs. We also discuss in-network upsampling 3.3, of which the fully connected prediction by Eigen et al. [6] is a special case.

而我们的方法没有这种机制。但是,我们确实从FCN的角度研究了分批训练3.4和“移位和缝合”密集输出3.2。我们还将讨论网络中的上采样3.3,其中Eigen等人[6]的预测完全相关,是一个特例。

Unlike these existing methods, we adapt and extend deep classification architectures, using image classification as supervised pre-training, and fine-tune fully convolutionally to learn simply and efficiently from whole image inputs and whole image ground thruths.

与这些现有方法不同,我们采用图像分类作为监督的预训练来适应和扩展深度分类体系结构,并进行全面卷积微调,以从整个图像输入和整个图像地基中简单有效地学习。

Hariharan et al. [16] and Gupta et al. [14] likewise adapt deep classification nets to semantic segmentation, but do so in hybrid proposal-classifier models. These approaches fine-tune an R-CNN system [12] by sampling bounding boxes and/or region proposals for detection, semantic segmentation, and instance segmentation. Neither method is learned end-to-end.

Hariharan等[16]和Gupta等[14]同样使深度分类网适应语义分割,但在混合提议分类器模型中也是如此。 这些方法通过采样边界框和/或区域建议以进行检测,语义分割和实例分割来微调R-CNN网络[12]。这两种方法都不是端到端学习的。他们分别在PASCAL VOC和NYUDv2上实现了最新的分割结果,因此我们在第5节中直接将我们独立的端到端FCN与它们的语义分割结果进行比较。

They achieve state-of-the-art results on PASCAL VOC segmentation and NYUDv2 segmentation respectively, so we directly compare our standalone, end-to-end FCN to their semantic segmentation results in Section 5.

他们分别在PASCAL VOC分割和NYUDv2分割上获得了最新的结果,因此我们在第5节中直接将我们独立的端到端FCN与它们的语义分割结果进行比较。

3. Fully convolutional networks

Each layer of data in a convnet is a three-dimensional array of size h×w×d, where h and w are spatial dimensions, and d is the feature or channel dimension. The first layer is the image, with pixel size h×w, and d color channels.Locations in higher layers correspond to the locations in the image they are path-connected to, which are called their receptive fields.

卷积网络中的每一层数据都是尺寸为h×w×d的三维数组,其中h和w是空间维,而d是特征或通道维。 第一层是图像,像素大小为h×w,具有d个颜色通道。较高层中的位置对应于它们在路径上连接到的图像中的位置,称为它们的接收场(receptive fields)。

Convnets are built on translation invariance. Their basic components (convolution, pooling, and activation functions) operate on local input regions, and depend only on relative spatial coordinates. Writing xij for the data vector at location (i; j) in a particular layer, and yij for the following layer, these functions compute outputs yij by

卷积建立在翻译不变性上。它们的基本组件(卷积,池化和激活函数)在局部输入区域上运行,并且仅取决于相对空间坐标。将 写入特定层中位置 (i; j) 的数据矢量,并将 写入下一层,这些函数通过以下方式计算输出

where k is called the kernel size, s is the stride or subsampling factor, and fks determines the layer type: a matrix multiplication for convolution or average pooling, a spatial max for max pooling, or an elementwise nonlinearity for an activation function, and so on for other types of layers.

其中 称为核大小, 是跨度或二次采样因子, 确定层类型:用于卷积或平均池化的矩阵乘法,用于最大池化的空间最大值,或用于激活函数的元素非线性,依此类推用于其他类型的层。

This functional form is maintained under composition, with kernel size and stride obeying the transformation rule

该函数形式保持组成不变,内核大小和步幅遵循转换规则

While a general deep net computes a general nonlinear function, a net with only layers of this form computes a nonlinear filter, which we call a deep filter or fully convolutional network. An FCN naturally operates on an input of any size, and produces an output of corresponding (possibly resampled) spatial dimensions.

一般的深层网络计算一般的非线性函数,而仅具有这种形式的层的网络将计算非线性滤波器,我们称其为深层滤波器或全卷积网络。 FCN自然可以在任何大小的输入上运行,并产生对应的(可能是重新采样的)空间尺寸的输出。

A real-valued loss function composed with an FCN defines a task. If the loss function is a sum over the spatial dimensions of the final layer, , its gradient will be a sum over the gradients of each of its spatial components. Thus stochastic gradient descent on computed on whole images will be the same as stochastic gradient descent on , taking all of the final layer receptive fields as a minibatch.

由FCN组成的实值损失函数定义任务。 如果损失函数是最后一层空间维度上的总和,则,其梯度将是其每个空间分量的梯度上的总和。 因此,将所有最终层接受场作为一个小批量,在整个图像上计算出的 上的随机梯度下降将与 上的随机梯度下降相同。

When these receptive fields overlap significantly, both feedforward computation and backpropagation are much more efficient when computed layer-by-layer over an entire image instead of independently patch-by-patch.

当这些接收场显着重叠时,在整个图像上逐层计算而不是逐个补丁地进行时,前馈计算和反向传播都更加有效。

We next explain how to convert classification nets into fully convolutional nets that produce coarse output maps. For pixelwise prediction, we need to connect these coarse outputs back to the pixels. Section 3.2 describes a trick that OverFeat [29] introduced for this purpose. We gain insight into this trick by reinterpreting it as an equivalent network modification. As an efficient, effective alternative, we introduce deconvolution layers for upsampling in Section 3.3. In Section 3.4 we consider training by patchwise sampling, and give evidence in Section 4.3 that our whole image training is faster and equally effective.

接下来,我们将说明如何将分类网络转换为可生成粗糙输出图的全卷积网络。对于逐像素预测,我们需要将这些粗略输出连接回像素。 3.2节描述了OverFeat [29]为此目的引入的一个技巧。通过将其重新解释为等效的网络修改,我们可以深入了解此技巧。作为一种有效的替代方案,我们将在第3.3节中介绍反卷积层以进行上采样。在第3.4节中,我们考虑通过逐点采样进行训练,并在第4.3节中证明我们的整个图像训练更快且同样有效。

3.1. Adapting classifiers for dense prediction

Typical recognition nets, including LeNet [21], AlexNet [19], and its deeper successors [31, 32], ostensibly take fixed-sizedinputsandproducenonspatialoutputs. Thefully connected layers of these nets have fixed dimensions and throw away spatial coordinates. However, these fully connected layers can also be viewed as convolutions with kernels that cover their entire input regions. Doing so casts them into fully convolutional networks that take input of any size and output classification maps. This transformation is illustrated in Figure 2. (By contrast, nonconvolutional nets, such as the one by Le et al. [20], lack this capability.)

典型的识别网络,包括LeNet [21],AlexNet [19]及其更深的后继者[31、32],表面上采用固定大小的输入并产生非空间输出。这些网络的全连接层具有固定的尺寸并丢弃空间坐标。但是,这些全连接层也可以看作是覆盖整个输入区域的内核的卷积。这样做会将它们转换为全卷积网络,该网络可以接收任何大小的输入并输出分类图。这种转换如图2所示。(相比之下,非卷积网络(例如Le等人的文献[20])缺乏这种能力。)

Figure 2. Transforming fully connected layers into convolution layers enables a classification net to output a heatmap. Adding layers and a spatial loss (as in Figure 1) produces an efficient machine for end-to-end dense learning.

图2.将全连接层转换为卷积层使分类网可以输出热图。增加层数和空间损失(如图1所示)将为端到端密集学习提供高效的机器。

Furthermore, while the resulting maps are equivalent to the evaluation of the original net on particular input patches, the computation is highly amortized over the overlapping regions of those patches. For example, while AlexNet takes 1.2 ms (on a typical GPU) to infer the classification scores of a 227×227 image, the fully convolutional net takes 22 ms to produce a 10×10 grid of outputs from a 500×500 image, which is more than 5 times faster than the naive approach1.

此外,虽然生成的映射等效于在特定输入色块上对原始网络的评估,但在这些色块的重叠区域上进行了高额摊销。 例如,虽然AlexNet需要1.2毫秒(在典型的GPU上)来推断227×227图像的分类得分,但全卷积网络却需要22毫秒才能从500×500图像中生成10×10的输出网格。 比单纯的方法快5倍以上。

The spatial output maps of these convolutionalized models make them a natural choice for dense problems like semantic segmentation. With ground truth available at every output cell, both the forward and backward passes are straightforward, and both take advantage of the inherent computational efficiency (and aggressive optimization) of convolution.

这些卷积模型的空间输出图使它们成为诸如语义分割之类的密集问题的自然选择。由于每个输出单元都有可用的基本事实,因此正向和反向传递都很简单,并且都利用了卷积的固有计算效率(和主动优化)。

The corresponding backward times for the AlexNet example are 2.4 ms for a single image and 37 ms for a fully convolutional 10 × 10 output map, resulting in a speedup similar to that of the forward pass. This dense backpropagation is illustrated in Figure 1.

对于AlexNet示例,相应的后退时间对于单个图像为2.4 ms,对于完全卷积的10×10输出映射为37 ms,从而导致加速效果类似于正向传递。这种密集的反向传播如图1所示。

While our reinterpretation of classification nets as fully convolutional yields output maps for inputs of any size, the output dimensions are typically reduced by subsampling. The classification nets subsample to keep filters small and computational requirements reasonable. This coarsens the output of a fully convolutional version of these nets, reducing it from the size of the input by a factor equal to the pixel stride of the receptive fields of the output units.

虽然我们将分类网重新解释为全卷积的输出图,但对于任何大小的输入而言,输出尺寸通常都会通过子采样来降低。分类网子采样可保持过滤器较小且计算要求合理。 这使这些网络的完全卷积形式的输出变得粗糙,从而将其从输入大小中减小到等于输出单元接收场的像素跨度的倍数。

3.2. Shift-and-stitch is filter rarefaction

Input shifting and output interlacing is a trick that yields dense predictions from coarse outputs without interpolation, introduced by OverFeat [29]. If the outputs are downsampled by a factor of , the input is shifted (by left and top padding) pixels to the right and pixels down, once for every value of . These inputs are each run through the convnet, and the outputs are interlaced so that the predictions correspond to the pixels at the centers of their receptive fields.

输入移位和输出隔行扫描是一种技巧,它可以从粗略输出中获得密集预测而无需插值,这是由OverFeat [29]引入的。如果对输出进行下采样 倍,则对于 的每个值,输入将向右移 个像素,并向右移 个像素,向下移 个像素。这些 输入每个都通过卷积网络,并且输出是隔行扫描的,因此预测对应于其接收场中心的像素。

Changing only the filters and layer strides of a convnet can produce the same output as this shift-and-stitch trick. Consider a layer (convolution or pooling) with input stride s, and a following convolution layer with filter weights (eliding the feature dimensions, irrelevant here). Setting the lower layer’s input stride to 1 upsamples its output by a factor of s, just like shift-and-stitch. However, convolving the original filter with the upsampled output does not produce the same result as the trick, because the original filter only sees a reduced portion of its (now upsampled) input. To reproduce the trick, rarefy the filter by enlarging it as

仅更改卷积滤波器的滤镜和跨步可以产生与该移位和缝合技巧相同的输出。考虑具有输入步幅s的层(卷积或池化),以及下一个具有滤波器权重 的卷积层(忽略特征尺寸,在此不相关)。将下层的输入步幅设置为1,就像移位和缝制一样,将其输出上采样s倍。但是,将原始滤波器与上采样输出进行卷积不会产生与技巧相同的结果,因为原始滤波器只会看到其(现在是上采样)输入的减少部分。要重现该技巧,请将滤波器放大为

(with and zero-based). Reproducing the full net output of the trick involves repeating this filter enlargement layerby-layer until all subsampling is removed.

(其中 和 从零开始)。再现技巧的完整净输出涉及逐层重复此滤波器放大,直到删除所有子采样为止。

Simply decreasing subsampling within a net is a tradeoff: the filters see finer information, but have smaller receptive fields and take longer to compute. We have seen that the shift-and-stitch trick is another kind of tradeoff: the output is made denser without decreasing the receptive field sizes of the filters, but the filters are prohibited from accessing information at a finer scale than their original design.

简单地减少网络内的二次采样是一个折衷:滤波器看到的信息更好,但是接收场更小,计算所需的时间更长。我们已经看到,移位和绣制技巧是另一种折衷方案:在不减小过滤器的接收场大小的情况下,使输出更密集,但禁止过滤器以比其原始设计更精细的比例访问信息。

Decreasing subsampling within a net is a tradeoff: the filters see finer information, but have smaller receptive fields and take longer to compute. The shift-and-stitch trick is another kind of tradeoff: the output is denser without decreasing the receptive field sizes of the filters, but the filters are prohibited from accessing information at a finer scale than their original design.

减少网络内的二次采样是一个权衡:滤波器看到的信息更好,但接收场较小,计算所需的时间更长。 移位和缝合技巧是另一种折衷方案:输出更密集而不减小过滤器的接收场大小,但是与原始设计相比,滤波器被禁止以更精细的比例访问信息。

Although we have done preliminary experiments with shift-and-stitch, we do not use it in our model. We find learning through upsampling, as described in the next section, to be more effective and efficient, especially when combined with the skip layer fusion described later on.

尽管我们已经完成了平移和绣制的初步实验,但是我们并未在模型中使用它。我们发现通过下采样进行学习将变得更加有效,这将在下一节中介绍,特别是与稍后描述的跳过层融合结合使用时。

3.3. Upsampling is backwards strided convolution

Another way to connect coarse outputs to dense pixels is interpolation. For instance, simple bilinear interpolation computes each output from the nearest four inputs by a linear map that depends only on the relative positions of the input and output cells.

将粗略输出连接到密集像素的另一种方法是插值。 例如,简单的双线性插值通过仅依赖于输入和输出像元的相对位置的线性映射从最近的四个输入计算每个输出 。

In a sense, upsampling with factor f is convolution with a fractional input stride of . So long as is integral, a natural way to upsample is therefore backwards convolution (sometimes called deconvolution) with an output stride of . Such an operation is trivial to implement, since it simply reverses the forward and backward passes of convolution.

从某种意义上说,因子为 的向上采样是卷积,输入步幅为 。 只要 是整数,向上采样的自然方法就是以输出步幅 向后进行卷积(有时称为反卷积)。 这样的操作很容易实现,因为它简单地反转了卷积的前进和后退。

Thus upsampling is performed in-network for end-to-end learning by backpropagation from the pixelwise loss.Note that the deconvolution filter in such a layer need not be fixed (e.g., to bilinear upsampling), but can be learned.A stack of deconvolution layers and activation functions can even learn a nonlinear upsampling.

因此,通过从像素方向的损失进行反向传播,在网络中执行上采样以进行端到端学习。注意,在这样的层中的去卷积滤波器不必是固定的(例如,固定为双线性上采样),而是可以学习的。一堆反卷积层和激活函数甚至可以学习非线性上采样。

In our experiments, we find that in-network upsampling is fast and effective for learning dense prediction. Our best segmentation architecture uses these layers to learn to upsample for refined prediction in Section 4.2.

在我们的实验中,我们发现网络内上采样对于学习密集预测是快速有效的。 我们最好的分割架构使用这些层来学习上采样,以进行第4.2节中的精确预测。

3.4. Patchwise training is loss sampling

In stochastic optimization, gradient computation is driven by the training distribution. Both patchwise training and fully convolutional training can be made to produce any distribution, although their relative computational efficiency depends on overlap and minibatch size. Whole image fully convolutional training is identical to patchwise training where each batch consists of all the receptive fields of the units below the loss for an image (or collection of images). While this is more efficient than uniform sampling of patches, it reduces the number of possible batches. However, random selection of patches within an image may be recovered simply. Restricting the loss to a randomly sampled subset of its spatial terms (or, equivalently applying a DropConnect mask [36] between the output and the loss) excludes patches from the gradient computation.

在随机优化中,梯度计算由训练分布驱动。 尽管它们的相对计算效率取决于重叠和最小批处理大小,但可以使补丁式(patchwise)训练和完全卷积训练两者都产生任何分布。完整图像的全卷积训练与逐块训练相同,在该训练中,每批都包含低于图像损失(或图像收集)的单位的所有接受场。虽然这比统一补丁采样更为有效,但它减少了可能的批数量。但是,可以简单地恢复图像内补丁的随机选择。将损失限制为其空间项的随机采样子集(或等效地在输出和损失之间应用DropConnect掩码[36])可将色块排除在梯度计算之外。

If the kept patches still have significant overlap, fully convolutional computation will still speed up training. If gradients are accumulated over multiple backward passes, batches can include patches from several images.2

如果保留的色块仍具有明显的重叠,则完全卷积计算仍将加快训练速度。 如果梯度是在多个向后遍历上累积的,则批处理可以包含来自多个图像的补丁。2

Sampling in patchwise training can correct class imbalance [27, 8, 2] and mitigate the spatial correlation of dense patches [28, 16]. In fully convolutional training, class balance can also be achieved by weighting the loss, and loss sampling can be used to address spatial correlation.

逐块训练中的采样可以纠正类不平衡[27、8、2],并减轻密集块的空间相关性[28、16]。在全卷积训练中,类平衡也可以通过加权损失来实现,并且损失采样可以用于解决空间相关性。

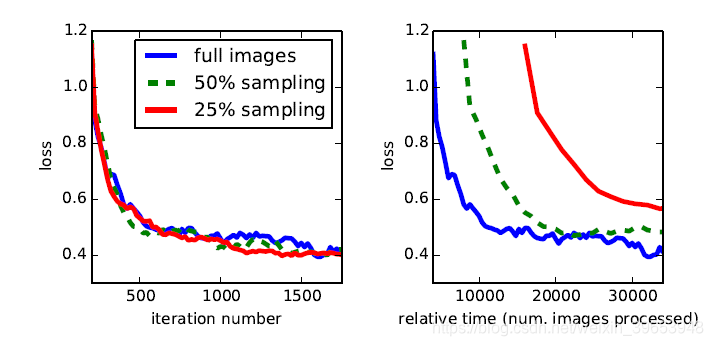

We explore training with sampling in Section 4.3, and do not find that it yields faster or better convergence for dense prediction. Whole image training is effective and efficient.

我们在第4.3节中探讨了采用采样的训练,但没有发现对于密集的预测它会产生更快或更佳的收敛。整个图像训练是有效和高效的。

4. Segmentation Architecture

We cast ILSVRC classifiers into FCNs and augment them for dense prediction with in-network upsampling and a pixelwise loss. We train for segmentation by fine-tuning.Next, we add skips between layers to fuse coarse, semantic and local, appearance information. This skip architecture is learned end-to-end to refine the semantics and spatial precision of the output.

我们将ILSVRC分类器转换为FCN,并通过网络内上采样和逐像素损失对它们进行增强以进行密集的预测。 我们通过微调训练分割。接下来,我们在各层之间添加跳过连接,以融合粗略的,语义的和局部的外观信息。 端到端学习了这种跳过架构,以改进输出的语义和空间精度。

For this investigation, we train and validate on the PASCAL VOC 2011 segmentation challenge [8]. We train with a per-pixel multinomial logistic loss and validate with the standard metric of mean pixel intersection over union, with the mean taken over all classes, including background. The training ignores pixels that are masked out (as ambiguous or difficult) in the ground truth.

对于此调查,我们训练并验证了PASCAL VOC 2011细分挑战[8]。 我们使用每像素多项式逻辑损失进行训练,并使用平均像素相交与并集的标准度量进行验证,并采用所有类别(包括背景)的均值。 训练会忽略在真实情况下被掩盖(模糊或困难)的像素。

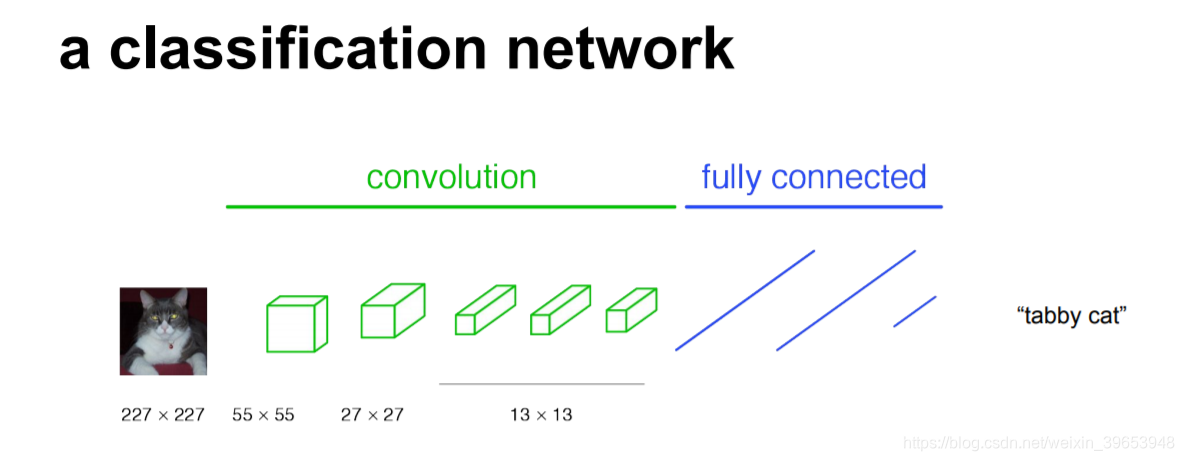

Figure 3. Our DAG nets learn to combine coarse, high layer information with fine, low layer information. Pooling and prediction layers are shown as grids that reveal relative spatial coarseness, while intermediate layers are shown as vertical lines. First row (FCN-32s): Our singlestream net, described in Section 4.1, upsamples stride 32 predictions back to pixels in a single step. Second row (FCN-16s): Combining predictions from both the final layer and the pool4 layer, at stride 16, lets our net predict finer details, while retaining high-level semantic information. Third row (FCN-8s): Additional predictions from pool3, at stride 8, provide further precision.

图3.我们的DAG网络学习将粗糙的高层信息与精细的低层信息相结合。池化和预测层显示为显示相对空间粗糙度的网格,而中间层显示为垂直线。第一行(FCN-32s):如第4.1节所述,我们的单流网络将一步一步将32个预测返回到像素。第二行(FCN-16):在第16步结合来自最后一层和pool4层的预测,使我们的网络可以预测更精细的细节,同时保留高级语义信息。 第三行(FCN-8s):在第8步中来自pool3的其他预测提供了更高的精度。

4.1. From classifier to dense FCN

We begin by convolutionalizing proven classification architectures as in Section 3. We consider the AlexNet3 architecture [22] that won ILSVRC12, as well as the VGG nets [34] and the GoogLeNet4 [35] which did exceptionally well in ILSVRC14. We pick the VGG 16-layer net5, which we found to be equivalent to the 19-layer net on this task. For GoogLeNet, we use only the final loss layer, and improve performance by discarding the final average pooling layer. We decapitate each net by discarding the final classifier layer, and convert all fully connected layers to convolutions. We append a 1×1 convolution with channel dimension 21 to predict scores for each of the PASCAL classes (including background) at each of the coarse output locations, followed by a deconvolution layer to bilinearly upsample the coarse outputs to pixel-dense outputs as described in Section 3.3. Table 1 compares the preliminary validation results along with the basic characteristics of each net. We report the best results achieved after convergence at a fixed learning rate (at least 175 epochs).

首先,如第3节所述,对经过验证的分类架构进行卷积。我们考虑赢得ILSVRC12的AlexNet3架构[22],以及在ILSVRC14中表现出色的VGG网络[34]和GoogLeNet4 [35]。 我们选择了VGG 16层网络5,我们发现它相当于此任务上的19层网络。 对于GoogLeNet,我们仅使用最终的损失层,并通过丢弃最终的平均池化层来提高性能。 我们通过丢弃最终的分类器层来使每个网络断头,并将所有全连接层转换为卷积层。 我们将通道尺寸为21的1×1卷积附加到每个粗略输出位置处的每个PASCAL类(包括背景)的分数预测中,然后进行解卷积层将粗略输出双线性升采样为像素密集输出,如在第3.3节中所述。 表1比较了初步验证结果以及每个网络的基本特征。 我们报告了以固定的学习速度(至少175个epochs)收敛后获得的最佳结果。

Fine-tuning from classification to segmentation gave reasonable predictions for each net. Even the worst model achieved ~75% of state-of-the-art performance. The segmentation-equipped VGG net (FCN-VGG16) already appears to be state-of-the-art at 56.0 mean IU on val, compared to 52.6 on test [17]. Training on extra data raises FCN-VGG16 to 59.4 mean IU and FCN-AlexNet to 48.0 mean IU on a subset of val 7. Despite similar classification accuracy, our implementation of GoogLeNet did not match the VGG16 segmentation result.

从分类到细分的微调为每个网络提供了合理的预测。 即使是最差的型号,也可以达到约75%的最新性能。 配备分段功能的VGG网(FCN-VGG16)在val上的平均IU为56.0时已经是最新技术,而在测试时为52.6 [17]。 对额外数据的训练将val7的子集上的FCN-VGG16平均IU提高到59.4,将FCN-AlexNet的平均IU提高到48.0。 尽管分类精度相似,但我们的GoogLeNet实施与VGG16细分结果不匹配。

Table 1. We adapt and extend three classification convnets. We compare performance by mean intersection over union on the validation set of PASCAL VOC 2011 and by inference time (averaged over 20 trials for a 500×500 input on an NVIDIA Tesla K40c).We detail the architecture of the adapted nets with regard to dense prediction: number of parameter layers, receptive field size of output units, and the coarsest stride within the net. (These numbers give the best performance obtained at a fixed learning rate, not best performance possible.)

表1.我们适应并扩展了三个分类卷积。 我们通过PASCAL VOC 2011验证集上的平均交集与并集以及推理时间(在NVIDIA Tesla K40c上进行500×500输入的20多次试验平均)来比较性能。 预测:参数层数,输出单元的接收场大小以及网内最粗的步幅。(这些数字给出了以固定学习率获得的最佳性能,而不是最佳性能。)

4.2. Combining what and where

We define a new fully convolutional net (FCN) for segmentation that combines layers of the feature hierarchy and refines the spatial precision of the output. See Figure 3.

我们定义了一种用于分割的新的全卷积网(FCN),该网结合了要素层次结构的各个层并完善了输出的空间精度。 参见图3。

While fully convolutionalized classifiers can be finetuned to segmentation as shown in 4.1, and even score highly on the standard metric, their output is dissatisfyingly coarse (see Figure 4). The 32 pixel stride at the final prediction layer limits the scale of detail in the upsampled output.

尽管可以将完全卷积的分类器微调至如4.1所示的分段,甚至在标准度量上得分很高,但它们的输出却令人不满意地粗糙(请参见图4)。 最终预测层的32像素步幅限制了上采样输出中的细节比例。

We address this by adding skips [1] that combine the final prediction layer with lower layers with finer strides.This turns a line topology into a DAG, with edges that skip ahead from lower layers to higher ones (Figure 3). As they see fewer pixels, the finer scale predictions should need fewer layers, so it makes sense to make them from shallower net outputs. Combining fine layers and coarse layers lets the model make local predictions that respect global structure.By analogy to the jet of Koenderick and van Doorn [21], we call our nonlinear feature hierarchy the deep jet.

我们通过添加跳过连接[1]来解决此问题,这些跳过将最终预测层与较低层的步幅相结合。这会将线拓扑变成DAG,其边缘从较低的层向前跳到较高的层(图3)。当他们看到较少的像素时,更精细的比例预测应该需要较少的图层,因此从较浅的净输出中进行选择是有意义的。结合精细层和粗糙层,可以使模型做出尊重整体结构的局部预测。通过类似于Koenderick和van Doorn [21]的射流,我们将非线性特征层次称为深射流(deep jet)。

We first divide the output stride in half by predicting from a 16 pixel stride layer. We add a 1×1 convolution layer on top of pool4 to produce additional class predictions.We fuse this output with the predictions computed on top of conv7 (convolutionalized fc7) at stride 32 by adding a 2× upsampling layer and summing6 both predictions (see Figure 3). We initialize the 2× upsampling to bilinear interpolation, but allow the parameters to be learned as described in Section 3.3. Finally, the stride 16 predictions are upsampled back to the image. We call this net FCN-16s. FCN-16s is learned end-to-end, initialized with the parameters of the last, coarser net, which we now call FCN-32s. The new parameters acting on pool4 are zeroinitialized so that the net starts with unmodified predictions.The learning rate is decreased by a factor of 100.

我们首先根据16个像素的步幅层进行预测,将输出步幅分为两半。 我们在pool4的顶部添加一个1×1卷积层以产生附加的类别预测,并将此输出与在第32步的conv7(卷积化的fc7)顶部计算的预测相融合,方法是添加一个2×上采样层并对两个预测求和(参见图3)。 我们将2x上采样初始化为双线性插值,但允许按照第3.3节中的描述学习参数。 最后,将步幅16的预测上采样回图像。 我们称此为FCN-16s。 通过端到端学习FCN-16,并使用最后一个更粗糙的网络(现在称为FCN-32)的参数进行初始化。 作用于pool4的新参数被初始化为零,因此网络以未修改的预测开始。学习率降低了100倍。

Learning this skip net improves performance on the validation set by 3.0 mean IU to 62.4. Figure 4 shows improvement in the fine structure of the output. We compared this fusion with learning only from the pool4 layer, which resulted in poor performance, and simply decreasing the learning rate without adding the skip, which resulted in an insignificant performance improvement without improving the quality of the output.

学习此跳过连接网络可以将验证集的性能提高3.0个平均IU,达到62.4。 图4显示了输出精细结构的改进。 我们将这种融合与仅从pool4层进行的学习进行了比较,这导致性能较差,并且在不增加跳过连接的情况下简单地降低了学习速度,从而在不提高输出质量的情况下导致了微不足道的性能改进。

We continue in this fashion by fusing predictions from pool3 with a 2× upsampling of predictions fused from pool4 and conv7, building the net FCN-8s. We obtain a minor additional improvement to 62.7 mean IU, and find a slight improvement in the smoothness and detail of our output. At this point our fusion improvements have met diminishing returns, both with respect to the IU metric which emphasizes large-scale correctness, and also in terms of the improvement visible e.g. in Figure 4, so we do not continue fusing even lower layers.

我们以这种方式继续进行工作,将pool3的预测与pool4和conv7的预测进行2倍的上采样融合,构建净FCN-8。 我们将平均IU值略微提高了62.7 IU,并在输出的平滑度和细节上发现了轻微的改进。 在这一点上,我们的融合改进遇到了收益递减的问题,无论是在强调大规模正确性的IU度量方面,还是在可见的改进方面,例如 在图4中,因此我们不会继续融合更低的层。

Refinement by other means Decreasing the stride of pooling layers is the most straightforward way to obtain finer predictions. However, doing so is problematic for our VGG16-based net. Setting the pool5 stride to 1 requires our convolutionalized fc6 to have kernel size 14×14 to maintain its receptive field size. In addition to their computational cost, we had difficulty learning such large filters.We attempted to re-architect the layers above pool5 with smaller filters, but did not achieve comparable performance; one possible explanation is that the ILSVRC initialization of the upper layers is important.

通过其他手段进行优化减小池化层的步幅是获得更精细预测的最直接方法。 但是,这样做对于我们基于VGG16的网络是有问题的。 将pool5的跨度设置为1要求我们的卷积化的fc6具有14×14的内核大小,以维持其接收场大小。 除了计算量之外,我们还很难学习这么大的过滤器。我们试图用较小的过滤器重新构造pool5之上的层,但没有达到可比的性能; 一种可能的解释是高层的ILSVRC初始化很重要。

Another way to obtain finer predictions is to use the shiftandstitch trick described in Section 3.2. In limited experiments, we found the cost to improvement ratio from this method to be worse than layer fusion.

获得更好的预测的另一种方法是使用第3.2节中描述的shiftandstitch技巧。 在有限的实验中,我们发现此方法的成本改进率比层融合差。

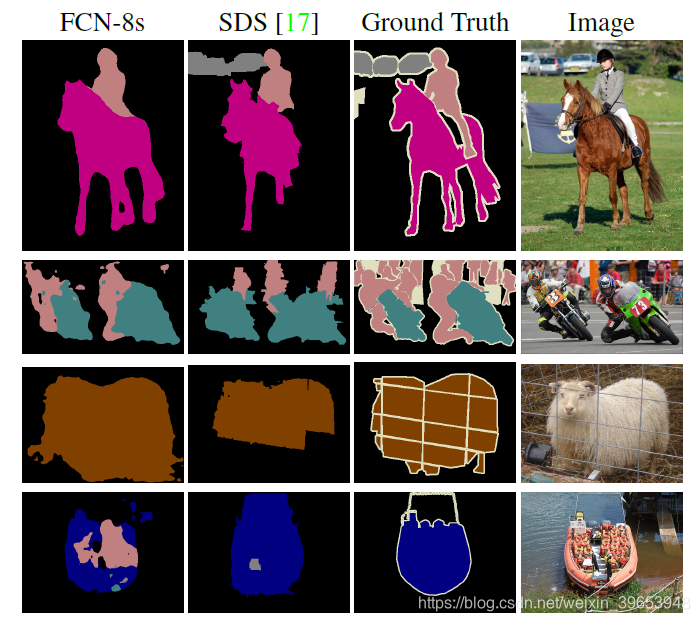

Figure 4. Refining fully convolutional nets by fusing information from layers with different strides improves segmentation detail. The first three images show the output from our 32, 16, and 8 pixel stride nets (see Figure 3).

图4.通过融合来自具有不同跨度的图层的信息来完善全卷积网络,可以改善分割细节。前三个图像显示了我们32、16和8像素步幅网络的输出(请参见图3)。

Table 2. Comparison of skip FCNs on a subset of PASCAL VOC2011 validation7. Learning is end-to-end, except for FCN32s-fixed, where only the last layer is fine-tuned. Note that FCN32s is FCN-VGG16, renamed to highlight stride.

表2.在部分PASCAL VOC2011验证中跳过FCN的比较7。学习是端到端的,除了固定在FCN32s上,只有最后一层是微调的。请注意,FCN32是FCN-VGG16,已重命名以突出显示步幅。

4.3. Experimental framework

Optimization. We train by SGD with momentum. We use a minibatch size of 20 images and fixed learning rates of for FCN-AlexNet, FCN-VGG16, and FCN-GoogLeNet, respectively, chosen by line search. We use momentum , weight decay of or , and doubled learning rate for biases, although we found training to be sensitive to the learning rate alone. We zero-initialize the class scoring layer, as random initialization yielded neither better performance nor faster convergence. Dropout was included where used in the original classifier nets.

优化。我们通过SGD进行有动量的训练。 对于FCN-AlexNet,FCN-VGG16和FCN-GoogLeNet,我们分别使用20张图像的小批量大小和 的固定学习率(按行选择) 搜索。 尽管我们发现训练仅对学习速率敏感,但我们使用的动量为 0.9,权重衰减为 或 ,并将学习率提高了一倍。 我们将类计分层初始化为零,因为随机初始化既不会产生更好的性能,也不会带来更快的收敛。 在原始分类器网络中使用的地方包括了dropout。

Fine-tuning. We fine-tune all layers by backpropagation through the whole net. Fine-tuning the output classifier alone yields only 70% of the full finetuning performance as compared in Table 2. Training from scratch is not feasible considering the time required to learn the base classification nets. (Note that the VGG net is trained in stages, while we initialize from the full 16-layer version.) Fine-tuning takes three days on a single GPU for the coarse FCN-32s version, and about one day each to upgrade to the FCN-16s and FCN-8s versions.

微调。我们通过整个网络的反向传播对所有层进行微调。 与表2相比,仅对输出分类器进行微调只能产生全部微调性能的70%。考虑到学习基础分类网络所需的时间,从头开始训练是不可行的。(注意,VGG网络是分阶段训练的,而我们是从完整的16层版本开始进行初始化的。)对于粗略的FCN-32s版本,微调在单个GPU上花费三天,而在每个GPU上升级FCN-16s和FCN-8s版本大约需要一天。

Figure 5. Training on whole images is just as effective as sampling patches, but results in faster (wall time) convergence by making more efficient use of data. Left shows the effect of sampling on convergence rate for a fixed expected batch size, while right plots the same by relative wall time.

图5.对整个图像进行训练与采样补丁一样有效,但是通过更有效地利用数据可以加快(墙时间)收敛。 左图显示了对于固定的预期批次大小,采样对收敛速度的影响,而右图则通过相对壁时间绘制了相同的结果。

More Training Data The PASCAL VOC 2011 segmentation training set labels 1112 images. Hariharan et al. [16] collected labels for a larger set of 8498 PASCAL training images, which was used to train the previous state-of-theart system, SDS [17]. This training data improves the FCNVGG16 validation score7 by 3.4 points to 59.4 mean IU.

更多培训数据PASCAL VOC 2011细分培训设置了1112张图像标签。 Hariharan等。 [16]收集了更大的8498 PASCAL训练图像集的标签,这些图像用于训练以前的最新系统SDS [17]。 该训练数据将FCNVGG16验证得分7提高了3.4点,至59.4平均IU。

Patch Sampling As explained in Section 3.4, our full image training effectively batches each image into a regular grid of large, overlapping patches. By contrast, prior work randomly samples patches over a full dataset [30, 3, 9, 31, 11], potentially resulting in higher variance batches that may accelerate convergence [24]. We study this tradeoff by spatially sampling the loss in the manner described earlier, making an independent choice to ignore each final layer cell with some probability 1-p. To avoid changing the effective batch size, we simultaneously increase the number of images per batch by a factor 1/p. Note that due to the efficiency of convolution, this form of rejection sampling is still faster than patchwise training for large enough values of p (e.g., at least for p > 0.2 according to the numbers in Section 3.1). Figure 5 shows the effect of this form of sampling on convergence. We find that sampling does not have a significant effect on convergence rate compared to whole image training, but takes significantly more time due to the larger number of images that need to be considered per batch. We therefore choose unsampled, whole image training in our other experiments.

补丁采样如第3.4节所述,我们的完整图像训练将每个图像有效地批量成大块重叠补丁的规则网格。 相比之下,先前的工作在整个数据集上随机采样补丁[30、3、9、31、11],可能会导致更高的方差批次,从而可能加速收敛[24]。 我们通过以前面描述的方式对损失进行空间采样来研究这种折衷,并做出独立选择,以某些概率“ 1-p”忽略每个最终层单元。 为了避免更改有效的批次大小,我们同时将每批次的图像数量增加了“ 1 / p”。 注意,由于卷积的效率,对于足够大的“ p”值(例如,至少根据第3.1节中的“ p> 0.2”而言),这种形式的拒绝采样仍比分片训练更快。 图5显示了这种形式的抽样对收敛的影响。 我们发现,与整个图像训练相比,采样对收敛速度没有显着影响,但是由于每批需要考虑的图像数量更多,因此花费的时间明显更多。 因此,我们在其他实验中选择未采样的整体图像训练。

Class Balancing Fully convolutional training can balance classes by weighting or sampling the loss. Although our labels are mildly unbalanced (about 3/4 are background), we find class balancing unnecessary.

类平衡完全卷积训练可以通过加权或采样损失来平衡类。尽管我们的标签略有不平衡(大约3 /4是背景),但我们发现类平衡是不必要的。

Dense Prediction The scores are upsampled to the input dimensions by deconvolution layers within the net. Final layer deconvolutional filters are fixed to bilinear interpolation, while intermediate upsampling layers are initialized to bilinear upsampling, and then learned.

密集预测通过网络中的反卷积层将分数上采样到输入维度。 最终层反卷积滤波器固定为双线性插值,而中间上采样层则初始化为双线性上采样,然后学习。

Augmentation We tried augmenting the training data by randomly mirroring and “jittering” the images by translating them up to 32 pixels (the coarsest scale of prediction) in each direction. This yielded no noticeable improvement.

增强我们尝试通过随机镜像和“抖动”图像来增强训练数据,方法是将图像在每个方向上最多转换为32个像素(最粗的预测比例)。 这没有产生明显的改善。

Implementation All models are trained and tested with Caffe [20] on a single NVIDIA Tesla K40c. Our models and code are publicly available at http://fcn.berkeleyvision.org.

实施所有模型都在单个NVIDIA Tesla K40c上使用Caffe [20]进行了培训和测试。 我们的模型和代码可在http://fcn.berkeleyvision.org上公开获得。

5. Results

We test our FCN on semantic segmentation and scene parsing, exploring PASCAL VOC, NYUDv2, and SIFT Flow. Although these tasks have historically distinguished between objects and regions, we treat both uniformly as pixel prediction. We evaluate our FCN skip architecture on each of these datasets, and then extend it to multi-modal input for NYUDv2 and multi-task prediction for the semantic and geometric labels of SIFT Flow.

我们在语义分割和场景解析方面测试了FCN,探索了PASCAL VOC,NYUDv2和SIFT Flow。尽管这些任务历来在对象和区域之间有所区别,但我们将两者均视为像素预测。我们在每个数据集上评估FCN跳过体系结构8,然后将其扩展到NYUDv2的多模式输入,以及SIFT Flow的语义和几何标签的多任务预测。

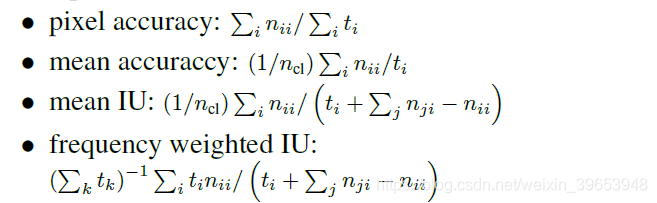

Metrics. We report four metrics from common semantic segmentation and scene parsing evaluations that are variations on pixel accuracy and region intersection over union (IU). Let be the number of pixels of class predicted to belong to class , where there are different classes, and let be the total number of pixels of class . We compute:

度量。我们报告了来自常见语义分割和场景分析评估的四个度量,它们是像素精度和联合区域交集(IU)的变化。令 为类 的预测像素数目属于类别 ,其中存在 个不同的类别,令 类别 的像素总数。我们计算:

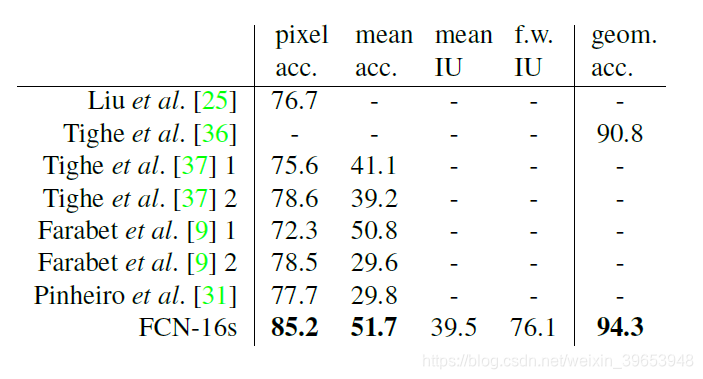

PASCAL VOC Table 3 gives the performance of our FCN-8s on the test sets of PASCAL VOC 2011 and 2012, and compares it to the previous state-of-the-art, SDS [16], and the well-known R-CNN [12]. We achieve the best results on mean IU9by a relative margin of 20%. Inference time is reduced 114× (convnet only, ignoring proposals and refinement) or 286× (overall).

表3给出了在PASCAL VOC 2011和2012测试的FCN-8的性能,并将其与之前的最新技术SDS[16]和著名的R-CNN进行了比较[12]。我们在平均IU9上达到了20%的相对裕度的最佳结果。推理时间减少了114倍(仅对convnet有效,忽略建议和改进)或总体的286倍。

Table 3. Our fully convolutional net gives a 20% relative improvement over the state-of-the-art on the PASCAL VOC 2011 and 2012 test sets, and reduces inference time.

表3.与PASCAL VOC 2011和2012测试集的最新技术相比,我们的全卷积网络提供了20%的相对改进,并减少了推理时间。

Table 4. Results on NYUDv2. RGBD is early-fusion of the RGB and depth channels at the input. HHA is the depth embedding of [14] as horizontal disparity, height above ground, and the angle of the local surface normal with the inferred gravity direction. RGB-HHA is the jointly trained late fusion model that sums RGB and HHA predictions.

表4. NYUDv2上的结果。 RGBD是输入端的RGB和深度通道的早期融合。 HHA是[14]的深度嵌入,它是水平差异,离地面的高度以及局部表面法线与推断重力方向的角度。 RGB-HHA是联合训练的后期融合模型,将RGB和HHA预测相加。

NYUDv2 [30] is an RGB-D dataset collected using the Microsoft Kinect. It has 1449 RGB-D images, with pixelwise labels that have been coalesced into a 40 class semantic segmentation task by Gupta et al. [13]. We report results on the standard split of 795 training images and 654 testing images. (Note: all model selection is performed on PASCAL 2011 val.) Table 4 gives the performance of our model in several variations. First we train our unmodified coarse model (FCN-32s) on RGB images. To add depth information, we train on a model upgraded to take four-channel RGB-D input (early fusion). This provides little benefit, perhaps due to the difficultly of propagating meaningful gradients all the way through the model. Following the success of Gupta et al. [14], we try the three-dimensional HHA encoding of depth, training nets on just this information, as well as a “late fusion” of RGB and HHA where the predictions from both nets are summed at the final layer, and the resulting two-stream net is learned end-to-end. Finally we upgrade this late fusion net to a 16-stride version.

NYUDv2 [30]是使用Microsoft Kinect收集的RGB-D数据集。 它具有1449个RGB-D图像,带有按像素划分的标签,由Gupta等人[13]合并为40类语义分割任务。 我们报告了795张训练图像和654张测试图像的标准分割结果。(注意:所有模型的选择均在PASCAL 2011 val上进行。)表4给出了几种模型的性能。 首先,我们在RGB图像上训练未修改的粗糙模型(FCN-32s)。 为了增加深度信息,我们训练了一个升级后的模型,以采用四通道RGB-D输入(早期融合),这几乎没有好处,这可能是由于难以在模型中一直传播有意义的梯度。 继Gupta等人[13]的成功之后,我们尝试对深度进行三维HHA编码,仅在此信息上训练网络,以及RGB和HHA的“后期融合”,其中来自两个网络的预测在最后一层相加,并得出结果 双流网络(two-stream net)是端到端学习的。 最后,我们将这个后期的融合网升级到16步的版本。

SIFT Flow is a dataset of 2,688 images with pixel labels for 33 semantic categories (“bridge”, “mountain”, “sun”), as well as three geometric categories (“horizontal”, “vertical”, and “sky”). An FCN can naturally learn a joint representation that simultaneously predicts both types of labels.We learn a two-headed version of FCN-16s with semantic and geometric prediction layers and losses. The learned model performs as well on both tasks as two independently trained models, while learning and inference are essentially as fast as each independent model by itself. The results in Table 5, computed on the standard split into 2,488 training and 200 test images,9 show state-of-the-art performance on both tasks.

SIFT Flow是一个包含2688个图像的数据集,带有33个语义类别(“桥”,“山”,“太阳”)以及三个几何类别(“水平”,“垂直”和“天空”)的像素标签。 FCN可以自然地学习可以同时预测两种标签类型的联合表示。我们学习了带有语义和几何预测层以及损失的FCN-16的两头版本。 学习的模型在两个任务上的表现都好于两个独立训练的模型,而学习和推理在本质上与每个独立模型一样快。表5中的结果按标准划分为2488个训练图像和200张测试图像9,显示出这两项任务的最新性能。

Table 5. Results on SIFT Flow9 with class segmentation (center) and geometric segmentation (right). Tighe [33] is a non-parametric transfer method. Tighe 1 is an exemplar SVM while 2 is SVM + MRF. Farabet is a multi-scale convnet trained on class-balanced samples (1) or natural frequency samples (2). Pinheiro is a multi-scale, recurrent convnet, denoted RCNN3 (o3). The metric for geometry is pixel accuracy.

表5. SIFT Flow 9 的结果,包括类分割(中心)和几何分割(右)。 Tighe [33]是一种非参数传递方法。 Tighe 1是示例SVM,而2是SVM + MRF。 Farabet是在类平衡样本(1)或自然频率样本(2)上经过训练的多尺度卷积网络。 Pinheiro是一个多尺度的循环卷积网络,表示为RCNN3(o 3)。 几何指标是像素精度。

Figure 6. Fully convolutional segmentation nets produce stateofthe-art performance on PASCAL. The left column shows the output of our highest performing net, FCN-8s. The second shows the segmentations produced by the previous state-of-the-art system by Hariharan et al. [16]. Notice the fine structures recovered (first row), ability to separate closely interacting objects (second row), and robustness to occluders (third row). The fourth row shows a failure case: the net sees lifejackets in a boat as people.

图6.完全卷积分割网在PASCAL上表现出最先进的性能。 左列显示了性能最高的网络FCN-8的输出。 第二部分显示了Hariharan等人先前的最新系统所产生的分割结果。 [16]。 注意恢复的精细结构(第一行),分离紧密相互作用的对象的能力(第二行)以及对遮挡物的稳健性(第三行)。 第四行显示了一个失败案例:网络将船上的救生衣视为人。

6. Conclusion

Fully convolutional networks are a rich class of models, of which modern classification convnets are a special case. Recognizing this, extending these classification nets to segmentation, and improving the architecture with multi-resolution layer combinations dramatically improves the state-of-the-art, while simultaneously simplifying and speeding up learning and inference.

完全卷积网络是一类丰富的模型,现代分类卷积就是其中的特例。 认识到这一点,将这些分类网扩展到分段,并通过多分辨率图层组合改进体系结构,可以极大地改善现有技术,同时简化并加快学习和推理速度。

Acknowledgements This work was supported in part by DARPA’s MSEE and SMISC programs, NSF awards IIS1427425, IIS-1212798, IIS-1116411, and the NSF GRFP, Toyota, and the Berkeley Vision and Learning Center. We gratefully acknowledge NVIDIA for GPU donation. We thank Bharath Hariharan and Saurabh Gupta for their advice and dataset tools. We thank Sergio Guadarrama for reproducing GoogLeNet in Caffe. We thank Jitendra Malik for his helpful comments. Thanks to Wei Liu for pointing out an issue wth our SIFT Flow mean IU computation and an error in our frequency weighted mean IU formula.

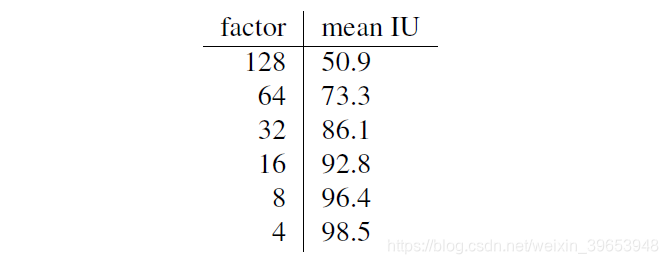

A. Upper Bounds on IU

In this paper, we have achieved good performance on the mean IU segmentation metric even with coarse semantic prediction. To better understand this metric and the limits of this approach with respect to it, we compute approximate upper bounds on performance with prediction at various scales. We do this by downsampling ground truth images and then upsampling them again to simulate the best results obtainable with a particular downsampling factor. The following table gives the mean IU on a subset of PASCAL 2011 val for various downsampling factors.

在本文中,即使使用粗略的语义预测,我们在平均IU分割指标上也取得了良好的性能。 为了更好地理解该指标以及该方法相对于其的局限性,我们使用各种规模的预测来计算性能的近似上限。 为此,我们对带有真实标签的图像进行下采样,然后再次对其进行上采样,以模拟使用特定下采样因子可获得的最佳结果。 下表列出了各种下采样因子下PASCAL 2011 val子集的平均IU。

Pixel-perfect prediction is clearly not necessary to achieve mean IU well above state-of-the-art, and, conversely, mean IU is a not a good measure of fine-scale accuracy.

像素完美预测显然不需要达到远高于最新水平的平均IU,相反,平均IU并不是衡量小尺寸精度的好方法。

B. More Results

We further evaluate our FCN for semantic segmentation.

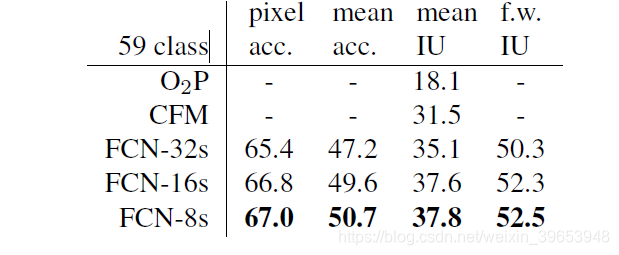

PASCAL-Context [26] provides whole scene annotations of PASCAL VOC 2010. While there are over 400 distinct classes, we follow the 59 class task defined by [26] that picks the most frequent classes. We train and evaluate on the training and val sets respectively. In Table 6, we compare to the joint object + stuff variation of Convolutional Feature Masking [3] which is the previous state-of-the-art on this task. FCN-8s scores 37.8 mean IU for a 20% relative improvement.

我们进一步评估FCN的语义分割。

PASCAL-Context [26]提供了PASCAL VOC 2010的整个场景注释。尽管有400多个不同的类,但我们遵循[26]定义的59类任务,该任务选择最频繁的类。 我们分别训练和评估训练集和评估集。 在表6中,我们将卷积特征蒙版[3]的联合对象+填充变量进行了比较,这是该任务的最新技术。 FCN-8s的平均IU得分为37.8,相对改善了20%。

Changelog

The arXiv version of this paper is kept up-to-date with corrections and additional relevant material. The following gives a brief history of changes.

本文的arXiv版本会进行更正和提供其他相关材料,以保持最新。 以下是更改的简要历史。

Table 6. Results on PASCAL-Context. CFM is the best result of [3] by convolutional feature masking and segment pursuit with the VGG net. O2P is the second order pooling method [1] as reported in the errata of [26]. The 59 class task includes the 59 most frequent classes while the 33 class task consists of an easier subset identified by [26].

表6. PASCAL上下文的结果。通过使用VGG网络进行卷积特征掩蔽和分段追踪,CFM是[3]的最佳结果。 O2P是二阶合并方法[1],如勘误表[26]中所述。 59类任务包括59个最频繁的类,而33类任务则由[26]标识的一个较容易的子集组成。

v2 Add Appendix A giving upper bounds on mean IU and Appendix B with PASCAL-Context results. Correct PASCAL validation numbers (previously, some val images were included in train), SIFT Flow mean IU (which used an inappropriately strict metric), and an error in the frequency weighted mean IU formula. Add link to models and update timing numbers to reflect improved implementation (which is publicly available).

v2 添加附录A,以给出平均IU的上限,附录B为PASCAL-Context结果。 正确的PASCAL验证编号(以前在火车中包含一些val图像),SIFT流量平均IU(使用了不合适的严格度量)以及频率加权平均IU公式中的错误。 将链接添加到模型并更新时间编号以反映改进的实现(已公开)。

如若内容造成侵权/违法违规/事实不符,请联系编程学习网邮箱:809451989@qq.com进行投诉反馈,一经查实,立即删除!

相关文章

- 【2021届】Java面试复习提纲

该文章主要是作为一个Java复习的提纲,采用的并不是对每一个知识点进行讲解的形式,它的目的很纯粹,就是为了检测自己的掌握程度,针对面试题目复习,如果掌握不够好,建议先去专栏学习:面试复习专栏 你可以将其作为模拟面试的问题来源,测试你的熟练程度;也可以当作面试前的…...

2024/4/27 17:14:41 - 极客大学架构师训练营 大数据可视化、机器学习、PageRank算法、KNN分类算法、贝叶斯分类算法、推荐引擎算法、感知机、神经网络 第26课 听课总结

说明 讲师:首席架构师 李智慧 大数据可视化 数据大屏互联网运营常用数据指标 新增用户数 新增用户数是网站增长性的关键指标,指新增加的访问网站的用户数(或者新下载 App 的用户数),对于一个处于爆发期的网站,新增用户数在短期内出现倍增的走势,是网站的战略机遇期,很多…...

2024/4/18 15:54:28 - 【学员心得】如何三招通关云计算HCIE认证

哈喽~大家好! 我是誉天云计算晚班的刘同学,前不久我成功的考到了华为云计算HCIE认证,收到成绩的时候,还是蛮激动的。初识HCIE 我是计算机网络专业出身,了解HCIE、CCIE等认证的时间也比较早。但是在遇到誉天之前,对云计算这三个字还并没有太多的概念。不过幸运的是,和誉天…...

2024/4/20 6:04:59 - 梁耀婵 HLF-间联祖细胞微脂丰胸

梁耀婵 HLF-间联祖细胞微脂丰胸 梁耀婵院长结合24年脂肪专项临床经验。应用自主研发的“联保取脂”技术,通过800目多次物理筛选配合超临界流体压力探取的微脂祖细胞,再经过专利多维度脂肪转移器精准快速将脂肪集落在移植处,实现丰胸+体雕=一举两得。技术核心:ULP祖细胞微脂…...

2024/3/6 7:54:53 - healthCode

项目整体结构配置文件:web.xml, spring.xml, springmvc.xml, mybatis.xml包:controller, dto, service, dao, domainweb.xml<!-- 注册ServletContextListener监听器。当ServletContext被创建时,该监听器会根据spring.xml创建出root ioc容器 --> <listener> &l…...

2024/4/24 4:18:01 - 个人如何发展初稿 - 三国论

赵云: 我想说的是,一个人的成长与环境的关系非常大 刘备: 然后? 赵云: 这就是需要好的平台吗 刘备: 是材料才行 赵云: 然后,如何才能进去这个平台呢 刘备: 都说了。是材料才行。没有尽头,欲望就是不断的膨胀。断不了 赵云: 是的 赵云: 如何顺着平台一步一步往上爬 …...

2024/4/25 19:47:05 - 10-30-010-安全简介-Kafka 安全机制

文章目录1.视界1. 概述2. Kafka身份认证KafkaClient 配置Kafka权限控制权限的内容权限配置权限设置add 操作为用户 alice 在 topic_lcc(topic)上添加读写的权限list 操作remove 操作"producer 和 consumer 的操作")producer 和 consumer 的操作填坑...

2024/5/6 19:16:07 - 马工程西方经济学上册第2版题库考研真题精选章节题库

内容第一部分 考研真题精选一、名词解释1基尼系数[中央财经大学2017研;中南财经政法大学2019研]答:基尼系数是意大利经济学家基尼根据洛伦兹曲线于1922年提出的定量测定收入分配差异程度的指标。它的经济含义是:在全部居民收入中用于不平均分配的百分比。基尼系数最小等于0…...

2024/4/18 16:48:33 - 微观经济学考研真题及答案(二)

微观经济学考研真题及答案(二)想看资料更多内容,请上知择学习网上搜索【微观经济学】!...

2024/4/18 10:41:24 - 高学历能否在计算机行业发展吗?

我在银行做了多年运行操作部。说是在技术部门工作,但是自感非常惭愧十几年工作经验,只是简单负责机器运行维护,无任何技术可言。每周晚班工作,简单输出心态已经麻木,没有挑战性可言。记得去年我们部门一位运行操作员,年轻大学期间已经是党员,本科学历虽然他的学校只是三…...

2024/3/31 1:58:46 - 汇编和可执行文件

程序执行过程:因为软件和硬件之间隔着一个操作系统,我们将我们写好的源代码编译生成了汇编代码,但是不可执行,因为不同的操作系统操作要求不同,这时我们就需要通过链接生成可执行文件来实现不同操作系统需要的要求。可以将源代码理解为是生猪,编译的过程就相当宰杀,汇编…...

2024/3/31 0:37:14 - 从SRCNN到EDSR,总结深度学习端到端超分辨率方法发展历程(转)

超分辨率技术(Super-Resolution, SR)是指从观测到的低分辨率图像重建出相应的高分辨率图像,在监控设备、卫星图像和医学影像等领域都有重要的应用价值。本文针对端到端的基于深度学习的单张图像超分辨率方法(Single Image Super-Resolution, SISR),总结一下从SRCNN到EDSR的…...

2024/4/19 1:46:21 - rosbag录制与回放数据

rosbag录制与回放数据参考网站1运行小乌龟2 rosbag record 录制2.1 录制全部话题2.2 录制某个或某几话题3 rosbag play数据回放等几秒再回放改变消息回放速率 参考网站 cn/ROS/Tutorials/Recording and playing back data - ROS Wiki 1运行小乌龟 启动两个节点——一个turtles…...

2024/3/31 2:32:15 - c++01背包、完全背包、多重背包思路+代码

今天蒟蒻来给大家讲01背包、完全背包、多重背包的思路和代码 一.先来区分一下这三种背包的区别 01背包:有N件物品和一个容量为V的背包。(每种物品均只有一件)第i件物品的费用是c[i],价值是w[i]。求解将哪些物品装入背包可使价值总和最大。 完全背包:有N种物品和一个容量为…...

2024/3/31 4:10:29 - 初学爬虫(5)——Beautiful Soup库基础

今天正式公布开学时间了,放了大半年的假期,终于快结束了。然而回去就要期末考试,看着还未动过的课本,还没开始复习(预习)啊!!!Beautiful Soup库用于解析HTML页面,可用于标记和提取信息。在其官网https://www.crummy.com/software/BeautifulSoup/上的介绍如下:翻译为…...

2024/4/23 18:01:42 - 【Kafka】Flink kafka 报错 Failed to send data to Kafka: Failed to allocate memory within the config

1.背景 [2020-09-05 14:57:51] [INFO] [org.apache.flink...

2024/4/19 6:27:43 - JAVA遇到的错误(error)及解决方案记录

目录1. 错误: 找不到或无法加载主类 1. 错误: 找不到或无法加载主类 在cmd命令窗口运行java+类名。 首先应保证环境变量配置好了,用java -version和javac -version命令,没有报错应当是ok的; 其次应当cd到相应的文件夹下; 还有报错,可能的原因如下: 原因一:多加了class后…...

2024/4/25 2:14:14 - Codeforces528 D. Fuzzy Search(FFT)

题意: 给定长度为n的串S和长度为m的串T,还有一个整数k 串只由ACGT组成,问T串在S串中出现了多少次,匹配机制比较特殊: 定义T[i]能匹配上S[j],当且仅当T[i]在S[j-k,j+k]中出现过,即存在偏移位差k 数据范围:n,m,k<=2e5 解法: 基于字符集的FFT字符串匹配: 假设考虑字母…...

2024/5/8 10:04:36 - 无锡招程序员:只要能做事,语言、方向不限

这几天在重整WP布局。每天虽然正常上下班,也是筋疲力尽,因为用脑量太大。突然说要开始搞苹果(MAC)版本,心里一惊。吾什么问题都能解决,问题是没有三头六臂,顾头不顾尾。所以在这时打个广告,欢迎来公司。会什么不重要,重要的是能做事。首先要笔试,笔试分数太低免…...

2024/4/22 1:18:02 - 判断一个字符串是否是回文串(Leetcode)

给定一个字符串,验证他是否是回文串,只考虑字母和数字字符,可以忽略字母的大小写解法一 使用双指针法和正则 class Solution:def isPalindrome(self, s):new_str = "".join(re.compile(r"[\W_]+").split(s)).lower()end = len(new_str)-1for i in ran…...

2024/4/28 13:02:55

最新文章

- C# SolidWorks 二次开发 -从零开始创建一个插件(3) 发布插件

五一节过完了吧,该上班学习了吧? 如何把自己开发好的程序优雅的给别人使用。 今天我们来简单讲解一下,这个之前不少粉丝咨询过相关问题,自己开发好的东西,如何给同事或者其它人使用。 先列一下使用到的主要工具&am…...

2024/5/9 11:43:02 - 梯度消失和梯度爆炸的一些处理方法

在这里是记录一下梯度消失或梯度爆炸的一些处理技巧。全当学习总结了如有错误还请留言,在此感激不尽。 权重和梯度的更新公式如下: w w − η ⋅ ∇ w w w - \eta \cdot \nabla w ww−η⋅∇w 个人通俗的理解梯度消失就是网络模型在反向求导的时候出…...

2024/5/7 10:36:02 - yolov9直接调用zed相机实现三维测距(python)

yolov9直接调用zed相机实现三维测距(python) 1. 相关配置2. 相关代码2.1 相机设置2.2 测距模块2.2 实验结果 相关链接 此项目直接调用zed相机实现三维测距,无需标定,相关内容如下: 1. yolov4直接调用zed相机实现三维测…...

2024/5/8 15:11:36 - 3d representation的一些基本概念

顶点(Vertex):三维空间中的一个点,可以有多个属性,如位置坐标、颜色、纹理坐标和法线向量。它是构建三维几何形状的基本单元。 边(Edge):连接两个顶点形成的直线段,它定…...

2024/5/8 5:12:31 - 416. 分割等和子集问题(动态规划)

题目 题解 class Solution:def canPartition(self, nums: List[int]) -> bool:# badcaseif not nums:return True# 不能被2整除if sum(nums) % 2 ! 0:return False# 状态定义:dp[i][j]表示当背包容量为j,用前i个物品是否正好可以将背包填满ÿ…...

2024/5/8 19:32:33 - 【Java】ExcelWriter自适应宽度工具类(支持中文)

工具类 import org.apache.poi.ss.usermodel.Cell; import org.apache.poi.ss.usermodel.CellType; import org.apache.poi.ss.usermodel.Row; import org.apache.poi.ss.usermodel.Sheet;/*** Excel工具类** author xiaoming* date 2023/11/17 10:40*/ public class ExcelUti…...

2024/5/9 7:40:42 - Spring cloud负载均衡@LoadBalanced LoadBalancerClient

LoadBalance vs Ribbon 由于Spring cloud2020之后移除了Ribbon,直接使用Spring Cloud LoadBalancer作为客户端负载均衡组件,我们讨论Spring负载均衡以Spring Cloud2020之后版本为主,学习Spring Cloud LoadBalance,暂不讨论Ribbon…...

2024/5/9 2:44:26 - TSINGSEE青犀AI智能分析+视频监控工业园区周界安全防范方案

一、背景需求分析 在工业产业园、化工园或生产制造园区中,周界防范意义重大,对园区的安全起到重要的作用。常规的安防方式是采用人员巡查,人力投入成本大而且效率低。周界一旦被破坏或入侵,会影响园区人员和资产安全,…...

2024/5/8 20:33:13 - VB.net WebBrowser网页元素抓取分析方法

在用WebBrowser编程实现网页操作自动化时,常要分析网页Html,例如网页在加载数据时,常会显示“系统处理中,请稍候..”,我们需要在数据加载完成后才能继续下一步操作,如何抓取这个信息的网页html元素变化&…...

2024/5/9 3:15:57 - 【Objective-C】Objective-C汇总

方法定义 参考:https://www.yiibai.com/objective_c/objective_c_functions.html Objective-C编程语言中方法定义的一般形式如下 - (return_type) method_name:( argumentType1 )argumentName1 joiningArgument2:( argumentType2 )argumentName2 ... joiningArgu…...

2024/5/9 5:40:03 - 【洛谷算法题】P5713-洛谷团队系统【入门2分支结构】

👨💻博客主页:花无缺 欢迎 点赞👍 收藏⭐ 留言📝 加关注✅! 本文由 花无缺 原创 收录于专栏 【洛谷算法题】 文章目录 【洛谷算法题】P5713-洛谷团队系统【入门2分支结构】🌏题目描述🌏输入格…...

2024/5/9 7:40:40 - 【ES6.0】- 扩展运算符(...)

【ES6.0】- 扩展运算符... 文章目录 【ES6.0】- 扩展运算符...一、概述二、拷贝数组对象三、合并操作四、参数传递五、数组去重六、字符串转字符数组七、NodeList转数组八、解构变量九、打印日志十、总结 一、概述 **扩展运算符(...)**允许一个表达式在期望多个参数࿰…...

2024/5/8 20:58:56 - 摩根看好的前智能硬件头部品牌双11交易数据极度异常!——是模式创新还是饮鸩止渴?

文 | 螳螂观察 作者 | 李燃 双11狂欢已落下帷幕,各大品牌纷纷晒出优异的成绩单,摩根士丹利投资的智能硬件头部品牌凯迪仕也不例外。然而有爆料称,在自媒体平台发布霸榜各大榜单喜讯的凯迪仕智能锁,多个平台数据都表现出极度异常…...

2024/5/9 1:35:21 - Go语言常用命令详解(二)

文章目录 前言常用命令go bug示例参数说明 go doc示例参数说明 go env示例 go fix示例 go fmt示例 go generate示例 总结写在最后 前言 接着上一篇继续介绍Go语言的常用命令 常用命令 以下是一些常用的Go命令,这些命令可以帮助您在Go开发中进行编译、测试、运行和…...

2024/5/9 4:12:16 - 用欧拉路径判断图同构推出reverse合法性:1116T4

http://cplusoj.com/d/senior/p/SS231116D 假设我们要把 a a a 变成 b b b,我们在 a i a_i ai 和 a i 1 a_{i1} ai1 之间连边, b b b 同理,则 a a a 能变成 b b b 的充要条件是两图 A , B A,B A,B 同构。 必要性显然࿰…...

2024/5/9 7:40:35 - 【NGINX--1】基础知识

1、在 Debian/Ubuntu 上安装 NGINX 在 Debian 或 Ubuntu 机器上安装 NGINX 开源版。 更新已配置源的软件包信息,并安装一些有助于配置官方 NGINX 软件包仓库的软件包: apt-get update apt install -y curl gnupg2 ca-certificates lsb-release debian-…...

2024/5/8 18:06:50 - Hive默认分割符、存储格式与数据压缩

目录 1、Hive默认分割符2、Hive存储格式3、Hive数据压缩 1、Hive默认分割符 Hive创建表时指定的行受限(ROW FORMAT)配置标准HQL为: ... ROW FORMAT DELIMITED FIELDS TERMINATED BY \u0001 COLLECTION ITEMS TERMINATED BY , MAP KEYS TERMI…...

2024/5/9 7:40:34 - 【论文阅读】MAG:一种用于航天器遥测数据中有效异常检测的新方法

文章目录 摘要1 引言2 问题描述3 拟议框架4 所提出方法的细节A.数据预处理B.变量相关分析C.MAG模型D.异常分数 5 实验A.数据集和性能指标B.实验设置与平台C.结果和比较 6 结论 摘要 异常检测是保证航天器稳定性的关键。在航天器运行过程中,传感器和控制器产生大量周…...

2024/5/9 1:42:21 - --max-old-space-size=8192报错

vue项目运行时,如果经常运行慢,崩溃停止服务,报如下错误 FATAL ERROR: CALL_AND_RETRY_LAST Allocation failed - JavaScript heap out of memory 因为在 Node 中,通过JavaScript使用内存时只能使用部分内存(64位系统&…...

2024/5/9 5:02:59 - 基于深度学习的恶意软件检测

恶意软件是指恶意软件犯罪者用来感染个人计算机或整个组织的网络的软件。 它利用目标系统漏洞,例如可以被劫持的合法软件(例如浏览器或 Web 应用程序插件)中的错误。 恶意软件渗透可能会造成灾难性的后果,包括数据被盗、勒索或网…...

2024/5/9 4:31:45 - JS原型对象prototype

让我简单的为大家介绍一下原型对象prototype吧! 使用原型实现方法共享 1.构造函数通过原型分配的函数是所有对象所 共享的。 2.JavaScript 规定,每一个构造函数都有一个 prototype 属性,指向另一个对象,所以我们也称为原型对象…...

2024/5/8 12:44:41 - C++中只能有一个实例的单例类

C中只能有一个实例的单例类 前面讨论的 President 类很不错,但存在一个缺陷:无法禁止通过实例化多个对象来创建多名总统: President One, Two, Three; 由于复制构造函数是私有的,其中每个对象都是不可复制的,但您的目…...

2024/5/8 9:51:44 - python django 小程序图书借阅源码

开发工具: PyCharm,mysql5.7,微信开发者工具 技术说明: python django html 小程序 功能介绍: 用户端: 登录注册(含授权登录) 首页显示搜索图书,轮播图࿰…...

2024/5/9 6:36:49 - 电子学会C/C++编程等级考试2022年03月(一级)真题解析

C/C++等级考试(1~8级)全部真题・点这里 第1题:双精度浮点数的输入输出 输入一个双精度浮点数,保留8位小数,输出这个浮点数。 时间限制:1000 内存限制:65536输入 只有一行,一个双精度浮点数。输出 一行,保留8位小数的浮点数。样例输入 3.1415926535798932样例输出 3.1…...

2024/5/9 4:33:29 - 配置失败还原请勿关闭计算机,电脑开机屏幕上面显示,配置失败还原更改 请勿关闭计算机 开不了机 这个问题怎么办...

解析如下:1、长按电脑电源键直至关机,然后再按一次电源健重启电脑,按F8健进入安全模式2、安全模式下进入Windows系统桌面后,按住“winR”打开运行窗口,输入“services.msc”打开服务设置3、在服务界面,选中…...

2022/11/19 21:17:18 - 错误使用 reshape要执行 RESHAPE,请勿更改元素数目。

%读入6幅图像(每一幅图像的大小是564*564) f1 imread(WashingtonDC_Band1_564.tif); subplot(3,2,1),imshow(f1); f2 imread(WashingtonDC_Band2_564.tif); subplot(3,2,2),imshow(f2); f3 imread(WashingtonDC_Band3_564.tif); subplot(3,2,3),imsho…...

2022/11/19 21:17:16 - 配置 已完成 请勿关闭计算机,win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机...

win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机”问题的解决方法在win7系统关机时如果有升级系统的或者其他需要会直接进入一个 等待界面,在等待界面中我们需要等待操作结束才能关机,虽然这比较麻烦,但是对系统进行配置和升级…...

2022/11/19 21:17:15 - 台式电脑显示配置100%请勿关闭计算机,“准备配置windows 请勿关闭计算机”的解决方法...

有不少用户在重装Win7系统或更新系统后会遇到“准备配置windows,请勿关闭计算机”的提示,要过很久才能进入系统,有的用户甚至几个小时也无法进入,下面就教大家这个问题的解决方法。第一种方法:我们首先在左下角的“开始…...

2022/11/19 21:17:14 - win7 正在配置 请勿关闭计算机,怎么办Win7开机显示正在配置Windows Update请勿关机...

置信有很多用户都跟小编一样遇到过这样的问题,电脑时发现开机屏幕显现“正在配置Windows Update,请勿关机”(如下图所示),而且还需求等大约5分钟才干进入系统。这是怎样回事呢?一切都是正常操作的,为什么开时机呈现“正…...

2022/11/19 21:17:13 - 准备配置windows 请勿关闭计算机 蓝屏,Win7开机总是出现提示“配置Windows请勿关机”...

Win7系统开机启动时总是出现“配置Windows请勿关机”的提示,没过几秒后电脑自动重启,每次开机都这样无法进入系统,此时碰到这种现象的用户就可以使用以下5种方法解决问题。方法一:开机按下F8,在出现的Windows高级启动选…...

2022/11/19 21:17:12 - 准备windows请勿关闭计算机要多久,windows10系统提示正在准备windows请勿关闭计算机怎么办...

有不少windows10系统用户反映说碰到这样一个情况,就是电脑提示正在准备windows请勿关闭计算机,碰到这样的问题该怎么解决呢,现在小编就给大家分享一下windows10系统提示正在准备windows请勿关闭计算机的具体第一种方法:1、2、依次…...

2022/11/19 21:17:11 - 配置 已完成 请勿关闭计算机,win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机”的解决方法...

今天和大家分享一下win7系统重装了Win7旗舰版系统后,每次关机的时候桌面上都会显示一个“配置Windows Update的界面,提示请勿关闭计算机”,每次停留好几分钟才能正常关机,导致什么情况引起的呢?出现配置Windows Update…...

2022/11/19 21:17:10 - 电脑桌面一直是清理请关闭计算机,windows7一直卡在清理 请勿关闭计算机-win7清理请勿关机,win7配置更新35%不动...

只能是等着,别无他法。说是卡着如果你看硬盘灯应该在读写。如果从 Win 10 无法正常回滚,只能是考虑备份数据后重装系统了。解决来方案一:管理员运行cmd:net stop WuAuServcd %windir%ren SoftwareDistribution SDoldnet start WuA…...

2022/11/19 21:17:09 - 计算机配置更新不起,电脑提示“配置Windows Update请勿关闭计算机”怎么办?

原标题:电脑提示“配置Windows Update请勿关闭计算机”怎么办?win7系统中在开机与关闭的时候总是显示“配置windows update请勿关闭计算机”相信有不少朋友都曾遇到过一次两次还能忍但经常遇到就叫人感到心烦了遇到这种问题怎么办呢?一般的方…...

2022/11/19 21:17:08 - 计算机正在配置无法关机,关机提示 windows7 正在配置windows 请勿关闭计算机 ,然后等了一晚上也没有关掉。现在电脑无法正常关机...

关机提示 windows7 正在配置windows 请勿关闭计算机 ,然后等了一晚上也没有关掉。现在电脑无法正常关机以下文字资料是由(历史新知网www.lishixinzhi.com)小编为大家搜集整理后发布的内容,让我们赶快一起来看一下吧!关机提示 windows7 正在配…...

2022/11/19 21:17:05 - 钉钉提示请勿通过开发者调试模式_钉钉请勿通过开发者调试模式是真的吗好不好用...

钉钉请勿通过开发者调试模式是真的吗好不好用 更新时间:2020-04-20 22:24:19 浏览次数:729次 区域: 南阳 > 卧龙 列举网提醒您:为保障您的权益,请不要提前支付任何费用! 虚拟位置外设器!!轨迹模拟&虚拟位置外设神器 专业用于:钉钉,外勤365,红圈通,企业微信和…...

2022/11/19 21:17:05 - 配置失败还原请勿关闭计算机怎么办,win7系统出现“配置windows update失败 还原更改 请勿关闭计算机”,长时间没反应,无法进入系统的解决方案...

前几天班里有位学生电脑(windows 7系统)出问题了,具体表现是开机时一直停留在“配置windows update失败 还原更改 请勿关闭计算机”这个界面,长时间没反应,无法进入系统。这个问题原来帮其他同学也解决过,网上搜了不少资料&#x…...

2022/11/19 21:17:04 - 一个电脑无法关闭计算机你应该怎么办,电脑显示“清理请勿关闭计算机”怎么办?...

本文为你提供了3个有效解决电脑显示“清理请勿关闭计算机”问题的方法,并在最后教给你1种保护系统安全的好方法,一起来看看!电脑出现“清理请勿关闭计算机”在Windows 7(SP1)和Windows Server 2008 R2 SP1中,添加了1个新功能在“磁…...

2022/11/19 21:17:03 - 请勿关闭计算机还原更改要多久,电脑显示:配置windows更新失败,正在还原更改,请勿关闭计算机怎么办...

许多用户在长期不使用电脑的时候,开启电脑发现电脑显示:配置windows更新失败,正在还原更改,请勿关闭计算机。。.这要怎么办呢?下面小编就带着大家一起看看吧!如果能够正常进入系统,建议您暂时移…...

2022/11/19 21:17:02 - 还原更改请勿关闭计算机 要多久,配置windows update失败 还原更改 请勿关闭计算机,电脑开机后一直显示以...

配置windows update失败 还原更改 请勿关闭计算机,电脑开机后一直显示以以下文字资料是由(历史新知网www.lishixinzhi.com)小编为大家搜集整理后发布的内容,让我们赶快一起来看一下吧!配置windows update失败 还原更改 请勿关闭计算机&#x…...

2022/11/19 21:17:01 - 电脑配置中请勿关闭计算机怎么办,准备配置windows请勿关闭计算机一直显示怎么办【图解】...

不知道大家有没有遇到过这样的一个问题,就是我们的win7系统在关机的时候,总是喜欢显示“准备配置windows,请勿关机”这样的一个页面,没有什么大碍,但是如果一直等着的话就要两个小时甚至更久都关不了机,非常…...

2022/11/19 21:17:00 - 正在准备配置请勿关闭计算机,正在准备配置windows请勿关闭计算机时间长了解决教程...

当电脑出现正在准备配置windows请勿关闭计算机时,一般是您正对windows进行升级,但是这个要是长时间没有反应,我们不能再傻等下去了。可能是电脑出了别的问题了,来看看教程的说法。正在准备配置windows请勿关闭计算机时间长了方法一…...

2022/11/19 21:16:59 - 配置失败还原请勿关闭计算机,配置Windows Update失败,还原更改请勿关闭计算机...

我们使用电脑的过程中有时会遇到这种情况,当我们打开电脑之后,发现一直停留在一个界面:“配置Windows Update失败,还原更改请勿关闭计算机”,等了许久还是无法进入系统。如果我们遇到此类问题应该如何解决呢࿰…...

2022/11/19 21:16:58 - 如何在iPhone上关闭“请勿打扰”

Apple’s “Do Not Disturb While Driving” is a potentially lifesaving iPhone feature, but it doesn’t always turn on automatically at the appropriate time. For example, you might be a passenger in a moving car, but your iPhone may think you’re the one dri…...

2022/11/19 21:16:57