基于tensorflow的语音唤醒实践

基于tensorflow的语音唤醒实践

语音唤醒简单的来说就是一个分类任务,将样本分为唤醒词与非唤醒词(这次唤醒词为"hello, xiaogua"),这次实践所完成的任务是对给出的多段音频,通过训练的模型给出其分类。中间通过数据预处理,模型搭建与训练,后处理三个步骤。

使用训练集11000余条音频,测试集4000余条音频,均为他人自制。文中代码均是在python3.7环境下。

笔者刚刚入门tensorflow,分类任务是通过mnist手写数据集上的代码学习的,搭建网络,传入参数的方式都与mnist上的代码相似。

整个任务流程参考论文:SMALL-FOOTPRINT KEYWORD SPOTTING USING DEEP NEURAL NETWORKS

目录

- 基于tensorflow的语音唤醒实践

- 数据的预处理(特征提取)

- 模型搭建和训练

- 数据读入

- 模型搭建

- 模型训练

- 后处理

- 平滑&置信度计算

- 完整代码

- 实验结果

数据的预处理(特征提取)

- 预加重:消除频谱倾斜,提升高频段

- 分帧:截取一段音频进行处理

- 加窗:消除吉布斯效应,使音频信号具备一些周期函数的特性

- 快速傅里叶变换:将时域信号转换到频域

- 通过梅尔滤波器组:模拟人耳听觉特征

- 拼帧:将连续几帧拼接起来作为一个训练(测试)样本

'''

fbank_reader在这段代码块下方

fbank即提取出的特征,每一帧的shape为(, 40)

这里选择对当前帧之前30帧与之后10帧进行拼帧

当前帧之前不足30帧则从第一帧向后取41帧拼帧作为当前帧拼帧结果

当前帧之后不足10帧则从最后一帧向后取41帧拼帧作为当前帧拼帧结果

如果整段拼帧区域不足41帧,前面不足则重复第一帧,后面不足则重复最后一帧

'''

def frame_combine(frame, file_path, start, end):fbank = fbank_reader.HTKFeat_read(file_path).getall()if end - start + 1 < 41:if frame - start <= 30 and end - frame <= 10:frame_to_combine = []front_rest = 30 - (frame - start)back_rest = 10 - (end - frame)for i in range(front_rest):frame_to_combine.append(fbank[start].tolist())for i in range(start, end + 1):frame_to_combine.append(fbank[i].tolist())for i in range(back_rest):frame_to_combine.append(fbank[end].tolist())elif end - frame >= 10:frame_to_combine = []front_rest = 30 - (frame - start)for i in range(front_rest):frame_to_combine.append(fbank[start].tolist())for i in range(start, frame+11):frame_to_combine.append(fbank[i].tolist())else:frame_to_combine = []back_rest = 10 - (end - frame)for i in range(frame - 30, end + 1):frame_to_combine.append(fbank[i].tolist())for i in range(back_rest):frame_to_combine.append(fbank[end].tolist())combined = np.array(frame_to_combine).reshape(-1)else:if frame - start >= 30 and end - frame >= 10:frame_to_combine = fbank[frame - 30: frame + 11]combined = frame_to_combine.reshape(-1)elif frame - start < 30:frame_to_combine = fbank[start: start+41]combined = frame_to_combine.reshape(-1)else:frame_to_combine = fbank[end - 40: end+1]combined = frame_to_combine.reshape(-1)return combined.tolist()

# fbank_reader.py

# Copyright (c) 2007 Carnegie Mellon University

#

# You may copy and modify this freely under the same terms as

# Sphinx-III

"""Read HTK feature files.

This module reads the acoustic feature files used by HTK

"""__author__ = "David Huggins-Daines <dhuggins@cs.cmu.edu>"

__version__ = "$Revision $"from struct import unpack, pack

import numpyLPC = 1

LPCREFC = 2

LPCEPSTRA = 3

LPCDELCEP = 4

IREFC = 5

MFCC = 6

FBANK = 7

MELSPEC = 8

USER = 9

DISCRETE = 10

PLP = 11_E = 0o0000100 # has energy

_N = 0o0000200 # absolute energy supressed

_D = 0o0000400 # has delta coefficients

_A = 0o0001000 # has acceleration (delta-delta) coefficients

_C = 0o0002000 # is compressed

_Z = 0o0004000 # has zero mean static coefficients

_K = 0o0010000 # has CRC checksum

_O = 0o0020000 # has 0th cepstral coefficient

_V = 0o0040000 # has VQ data

_T = 0o0100000 # has third differential coefficientsclass HTKFeat_read(object):"Read HTK format feature files"def __init__(self, filename=None):self.swap = (unpack('=i', pack('>i', 42))[0] != 42)if (filename != None):self.open(filename)def __iter__(self):self.fh.seek(12, 0)return selfdef open(self, filename):self.filename = filename# To run in python2, change the "open" to "file"self.fh = open(filename, "rb")self.readheader()def readheader(self):self.fh.seek(0, 0)spam = self.fh.read(12)self.nSamples, self.sampPeriod, self.sampSize, self.parmKind = unpack(">IIHH", spam)# Get coefficients for compressed dataif self.parmKind & _C:self.dtype = 'h'self.veclen = self.sampSize / 2if self.parmKind & 0x3f == IREFC:self.A = 32767self.B = 0else:self.A = numpy.fromfile(self.fh, 'f', self.veclen)self.B = numpy.fromfile(self.fh, 'f', self.veclen)if self.swap:self.A = self.A.byteswap()self.B = self.B.byteswap()else:self.dtype = 'f'self.veclen = self.sampSize / 4self.hdrlen = self.fh.tell()def seek(self, idx):self.fh.seek(self.hdrlen + idx * self.sampSize, 0)def next(self):vec = numpy.fromfile(self.fh, self.dtype, self.veclen)if len(vec) == 0:raise StopIterationif self.swap:vec = vec.byteswap()# Uncompress data to floats if requiredif self.parmKind & _C:vec = (vec.astype('f') + self.B) / self.Areturn vecdef readvec(self):return self.next()def getall(self):self.seek(0)data = numpy.fromfile(self.fh, self.dtype)if self.parmKind & _K: # Remove and ignore checksumdata = data[:-1]data = data.reshape(int(len(data)/self.veclen), int(self.veclen))if self.swap:data = data.byteswap()# Uncompress data to floats if requiredif self.parmKind & _C:data = (data.astype('f') + self.B) / self.Areturn data

模型搭建和训练

数据读入

由给定的训练集、测试集列表读入数据,进行拼帧后进行训练、测试,由于训练集要循环使用,测试集只要测试一次。而且训练集不仅每段音频顺序要打乱,同一段音频内的每一帧拼帧后的结果也要打乱,而测试集由于需要进行后处理,要求不打乱顺序,还要知道每段音频的位置。两个数据集的操作相差很多,所以分别定义为两个类:TestSet和TrainSet:

class TestSet(object):def __init__(self, exampls, labels, num_examples, fbank_end_frame):self._exampls = examplsself._labels = labelsself._index_in_epochs = 0 # 调用next_batch()函数后记住上一次位置self.num_examples = num_examples # 训练样本数self.fbank_end_frame = fbank_end_framedef next_batch(self, batch_size):start = self._index_in_epochsif start + batch_size > self.num_examples:self._index_in_epochs = self.num_examplesend = self._index_in_epochsreturn self._exampls[start:end], self._labels[start:end]else:self._index_in_epochs += batch_sizeend = self._index_in_epochsreturn self._exampls[start:end], self._labels[start:end]class TrainSet(object):def __init__(self, examples_list, position_data):self.examples_list = examples_listself.position_data = position_dataself.fbank_position = 0 # 记住训练集读取到了什么位置self.index_in_epochs = 0 # 调用next_batch()函数后记住上一次位置self.example = []self.labels = []self.num_examples = 0# 每次读入十个fbank拼帧,样本列表用类似循环列表的方式存储def read_train_set(self):self.example = []self.labels = []self.num_examples = 0step_length = 10start = self.fbank_position % len(self.examples_list)end = (self.fbank_position + step_length) % len(self.examples_list)if start < end:fbank_list = self.examples_list[start: end]self.fbank_position += step_lengthelse:fbank_list = self.examples_list[start: len(self.examples_list)]self.fbank_position = 0index = np.arange(len(self.examples_list))np.random.shuffle(index)self.examples_list = np.array(self.examples_list)[index]for example in fbank_list:if example == '':continuefile_path = "E://aslp_wake_up_word_data/data/positive/train/" + \example + ".fbank"if os.path.exists(file_path):start = self.position_data.find(example)end = self.position_data.find("positive", start + 1)if end != -1:position_str = self.position_data[start + 15: end - 1]else:position_str = self.position_data[start + 15: end]# start and end position of "hello" & start and end position of "xiao gua"keyword_position = position_str.split(" ")file_path = "E://aslp_wake_up_word_data/data/positive/train/" + \example + ".fbank"keyword_frame_position = []for i in range(4):fbank = fbank_reader.HTKFeat_read(file_path).getall()length = fbank.shape[0]frame_position = int(keyword_position[i]) // 160if frame_position >= length:frame_position = length - 1keyword_frame_position.append(frame_position)print(example)for frame in range(keyword_frame_position[0], keyword_frame_position[1] + 1):self.example.append(frame_combine(frame, file_path, keyword_frame_position[0], keyword_frame_position[1]))self.labels.append('0')self.num_examples += 1for frame in range(keyword_frame_position[2], keyword_frame_position[3] + 1):self.example.append(frame_combine(frame, file_path, keyword_frame_position[2], keyword_frame_position[3]))self.labels.append('1')self.num_examples += 1else:file_path = "E://aslp_wake_up_word_data/data/negative/train/" + \example + ".fbank"fbank = fbank_reader.HTKFeat_read(file_path).getall()frame_number = fbank.shape[0]print(example)for frame in range(frame_number):self.example.append(frame_combine(frame, file_path, 0, frame_number - 1))self.labels.append('2')self.num_examples += 1def next_batch(self, batch_size):start = self.index_in_epochsif start == 0:self.read_train_set()index0 = np.arange(self.num_examples)np.random.shuffle(index0)self.example = np.array(self.example)[index0]self.labels = np.array(self.labels)[index0]if start + batch_size > self.num_examples:examples_rest_part = self.example[start: self.num_examples]labels_rest_part = self.labels[start: self.num_examples]self.index_in_epochs = 0return examples_rest_part, labels_rest_partelse:self.index_in_epochs += batch_sizeend = self.index_in_epochsreturn self.example[start:end], self.labels[start:end]

模型搭建

这里搭建的是全连接神经网络,隐层大小为3×128:

# tensor_build.py

import tensorflow as tfNUM_CLASSES = 3def inference(speeches, hidden1_units, hidden2_units, hidden3_units):# 搭建网络hidden1 = tf.contrib.layers.fully_connected(speeches, hidden1_units)tf.nn.dropout(hidden1, keep_prob=0.9)hidden2 = tf.contrib.layers.fully_connected(hidden1, hidden2_units)tf.nn.dropout(hidden2, keep_prob=0.9)hidden3 = tf.contrib.layers.fully_connected(hidden2, hidden3_units)tf.nn.dropout(hidden3, keep_prob=0.9)output_logits = tf.contrib.layers.fully_connected(hidden3, NUM_CLASSES)return output_logitsdef loss(logits, labels):# 计算交叉熵,作为损失函数labels = tf.to_int64(labels)return tf.losses.sparse_softmax_cross_entropy(labels=labels, logits=logits)def training(loss, learning_rate):tf.summary.scalar('loss', loss)optimizer = tf.train.AdamOptimizer(learning_rate)global_step = tf.Variable(0, name='global_step', trainable=False)train_op = optimizer.minimize(loss, global_step=global_step)return train_op

模型训练

def run_training():train, test = input_data.read_data_sets()with tf.Graph().as_default():speeches_placeholder, labels_placeholder = placeholder_inputs()logits = tensor_build.inference(speeches_placeholder, FLAGS.hidden1, FLAGS.hidden2, FLAGS.hidden3)outputs = tf.nn.softmax(logits=logits)loss = tensor_build.loss(logits, labels_placeholder)train_op = tensor_build.training(loss, FLAGS.learning_rate)summary = tf.summary.merge_all()init = tf.global_variables_initializer()saver = tf.train.Saver()sess = tf.Session()summary_writer = tf.summary.FileWriter(FLAGS.log_dir, sess.graph)sess.run(init)test_false_alarm_rate_list = []test_false_reject_rate_list = []loss_list = []total_loss = []for step in range(FLAGS.max_steps):feed_dict = fill_feed_dict(train, speeches_placeholder, labels_placeholder)_, loss_value = sess.run([train_op, loss], feed_dict=feed_dict)loss_list.append(loss_value)if step % 25897 == 0 and step != 0:total_loss.append(sum(loss_list[step - 25897: step]) / 25897)if step % 100 == 0:summary_str = sess.run(summary, feed_dict=feed_dict)summary_writer.add_summary(summary_str, step)summary_writer.flush()if step + 1 == FLAGS.max_steps:checkpoint_file = os.path.join(FLAGS.log_dir, 'model.ckpt')saver.save(sess, checkpoint_file, global_step=step)# 以下可以暂时忽略。进行测试,并且对测试结果进行评估,计算误唤醒率与误拒绝率test_false_alarm_rate_list, test_false_reject_rate_list = do_eval(sess, speeches_placeholder,labels_placeholder, test, outputs)print(total_loss)# 画出ROC曲线plot(test_false_alarm_rate_list, test_false_reject_rate_list)

后处理

平滑&置信度计算

平滑公式如下(第j帧的第i个标签的平滑后概率)

pij′={1j∑k=0jpik,ifj≤30130∑k=j−29jpik,ifj>30p_{ij}^{'}= \begin{cases} \frac{1}{j}\sum\limits_{k=0}^jp_{ik}, & \text{if} \; j \leq 30\\[3ex] \frac{1}{30}\sum\limits_{k=j-29}^jp_{ik}, & \text{if} \; j > 30 \end{cases} pij′=⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧j1k=0∑jpik,301k=j−29∑jpik,ifj≤30ifj>30

置信度公式如下(第j帧的置信度)

confidence={∏i=121jmax1≤k≤jpikifj≤100∏i=121100maxj−99≤k≤jpikifj>100confidence= \begin{cases} \sqrt{\prod\limits_{i =1}^2\frac{1}{j}\max\limits_{1\leq k \leq j}p_{ik}} & \text{if} \; j \leq 100\\[4ex] \sqrt{\prod\limits_{i =1}^2\frac{1}{100}\max\limits_{j-99 \leq k\leq j}p_{ik}} & \text{if} \; j > 100 \end{cases} confidence=⎩⎪⎪⎪⎪⎪⎨⎪⎪⎪⎪⎪⎧i=1∏2j11≤k≤jmaxpiki=1∏21001j−99≤k≤jmaxpikifj≤100ifj>100

整个音频文件的置信度就是其每一帧对应的置信度中的最大值,与唤醒的阈值比较,就能得到是否唤醒的判断

def find_max(smooth_probability):length = len(smooth_probability)max1 = smooth_probability[0][0]max2 = smooth_probability[0][1]for i in range(length):if smooth_probability[i][0] > max1:max1 = smooth_probability[i][0]if smooth_probability[i][1] > max2:max2 = smooth_probability[i][1]return max1, max2def posterior_handling(probability, fbank_end_frame):confidence = []for i in range(len(fbank_end_frame)):if i == 0:fbank_probability = probability[0: fbank_end_frame[0] - 1]else:fbank_probability = probability[fbank_end_frame[i-1]: fbank_end_frame[i] - 1]smooth_probability = []frame_confidence = []for j in range(len(fbank_probability)):if j + 1 <= 30:smooth_probability.append(np.sum((np.array(fbank_probability[0: j + 1])/(j + 1)), axis=0).tolist())else:smooth_probability.append(np.sum((np.array(fbank_probability[j - 30: j + 1])/30), axis=0).tolist())for j in range(len(fbank_probability)):if j + 1 <= 100:max1, max2 = find_max(smooth_probability[0: j + 1])frame_confidence.append(max1 * max2)else:max1, max2 = find_max(smooth_probability[j - 100: j + 1])frame_confidence.append(max1 * max2)confidence.append(math.sqrt(max(frame_confidence)))return confidence

完整代码

# tensor_build.py

import tensorflow as tfNUM_CLASSES = 3def inference(speeches, hidden1_units, hidden2_units, hidden3_units):hidden1 = tf.contrib.layers.fully_connected(speeches, hidden1_units)tf.nn.dropout(hidden1, keep_prob=0.9)hidden2 = tf.contrib.layers.fully_connected(hidden1, hidden2_units)tf.nn.dropout(hidden2, keep_prob=0.9)hidden3 = tf.contrib.layers.fully_connected(hidden2, hidden3_units)tf.nn.dropout(hidden3, keep_prob=0.9)output_logits = tf.contrib.layers.fully_connected(hidden3, NUM_CLASSES)return output_logitsdef loss(logits, labels):# 计算交叉熵,作为损失函数labels = tf.to_int64(labels)return tf.losses.sparse_softmax_cross_entropy(labels=labels, logits=logits)def training(loss, learning_rate):tf.summary.scalar('loss', loss)optimizer = tf.train.AdamOptimizer(learning_rate)global_step = tf.Variable(0, name='global_step', trainable=False)train_op = optimizer.minimize(loss, global_step=global_step)return train_op

# fbank_reader.py

# Copyright (c) 2007 Carnegie Mellon University

#

# You may copy and modify this freely under the same terms as

# Sphinx-III

"""Read HTK feature files.This module reads the acoustic feature files used by HTK

"""__author__ = "David Huggins-Daines <dhuggins@cs.cmu.edu>"

__version__ = "$Revision $"from struct import unpack, pack

import numpyLPC = 1

LPCREFC = 2

LPCEPSTRA = 3

LPCDELCEP = 4

IREFC = 5

MFCC = 6

FBANK = 7

MELSPEC = 8

USER = 9

DISCRETE = 10

PLP = 11_E = 0o0000100 # has energy

_N = 0o0000200 # absolute energy supressed

_D = 0o0000400 # has delta coefficients

_A = 0o0001000 # has acceleration (delta-delta) coefficients

_C = 0o0002000 # is compressed

_Z = 0o0004000 # has zero mean static coefficients

_K = 0o0010000 # has CRC checksum

_O = 0o0020000 # has 0th cepstral coefficient

_V = 0o0040000 # has VQ data

_T = 0o0100000 # has third differential coefficientsclass HTKFeat_read(object):"Read HTK format feature files"def __init__(self, filename=None):self.swap = (unpack('=i', pack('>i', 42))[0] != 42)if (filename != None):self.open(filename)def __iter__(self):self.fh.seek(12, 0)return selfdef open(self, filename):self.filename = filename# To run in python2, change the "open" to "file"self.fh = open(filename, "rb")self.readheader()def readheader(self):self.fh.seek(0, 0)spam = self.fh.read(12)self.nSamples, self.sampPeriod, self.sampSize, self.parmKind = unpack(">IIHH", spam)# Get coefficients for compressed dataif self.parmKind & _C:self.dtype = 'h'self.veclen = self.sampSize / 2if self.parmKind & 0x3f == IREFC:self.A = 32767self.B = 0else:self.A = numpy.fromfile(self.fh, 'f', self.veclen)self.B = numpy.fromfile(self.fh, 'f', self.veclen)if self.swap:self.A = self.A.byteswap()self.B = self.B.byteswap()else:self.dtype = 'f'self.veclen = self.sampSize / 4self.hdrlen = self.fh.tell()def seek(self, idx):self.fh.seek(self.hdrlen + idx * self.sampSize, 0)def next(self):vec = numpy.fromfile(self.fh, self.dtype, self.veclen)if len(vec) == 0:raise StopIterationif self.swap:vec = vec.byteswap()# Uncompress data to floats if requiredif self.parmKind & _C:vec = (vec.astype('f') + self.B) / self.Areturn vecdef readvec(self):return self.next()def getall(self):self.seek(0)data = numpy.fromfile(self.fh, self.dtype)if self.parmKind & _K: # Remove and ignore checksumdata = data[:-1]data = data.reshape(int(len(data)/self.veclen), int(self.veclen))if self.swap:data = data.byteswap()# Uncompress data to floats if requiredif self.parmKind & _C:data = (data.astype('f') + self.B) / self.Areturn data

# input_data.py

import fbank_reader

import numpy as np

import os# 测试集类

class TestSet(object):def __init__(self, exampls, labels, num_examples, fbank_end_frame):self._exampls = examplsself._labels = labelsself._index_in_epochs = 0 # 调用next_batch()函数后记住上一次位置self.num_examples = num_examples # 训练样本数self.fbank_end_frame = fbank_end_framedef next_batch(self, batch_size):start = self._index_in_epochsif start + batch_size > self.num_examples:self._index_in_epochs = self.num_examplesend = self._index_in_epochsreturn self._exampls[start:end], self._labels[start:end]else:self._index_in_epochs += batch_sizeend = self._index_in_epochsreturn self._exampls[start:end], self._labels[start:end]# 训练集类

class TrainSet(object):def __init__(self, examples_list, position_data):self.examples_list = examples_listself.position_data = position_dataself.fbank_position = 0 # 记住训练集读取到了什么位置self.index_in_epochs = 0 # 调用next_batch()函数后记住上一次位置self.example = []self.labels = []self.num_examples = 0def read_train_set(self):self.example = []self.labels = []self.num_examples = 0step_length = 10start = self.fbank_position % len(self.examples_list)end = (self.fbank_position + step_length) % len(self.examples_list)if start < end:fbank_list = self.examples_list[start: end]self.fbank_position += step_lengthelse:fbank_list = self.examples_list[start: len(self.examples_list)]self.fbank_position = 0index = np.arange(len(self.examples_list))np.random.shuffle(index)self.examples_list = np.array(self.examples_list)[index]for example in fbank_list:if example == '':continuefile_path = "/home/disk2/internship_anytime/aslp_hotword_data/aslp_wake_up_word_data/data/positive/train/" + \example + ".fbank"if os.path.exists(file_path):start = self.position_data.find(example)end = self.position_data.find("positive", start + 1)if end != -1:position_str = self.position_data[start + 15: end - 1]else:position_str = self.position_data[start + 15: end]# start and end position of "hello" & start and end position of "xiao gua"keyword_position = position_str.split(" ")file_path = "E://aslp_wake_up_word_data/data/positive/train/" + \example + ".fbank"keyword_frame_position = []for i in range(4):fbank = fbank_reader.HTKFeat_read(file_path).getall()length = fbank.shape[0]frame_position = int(keyword_position[i]) // 160if frame_position >= length:frame_position = length - 1keyword_frame_position.append(frame_position)print(example)for frame in range(keyword_frame_position[0], keyword_frame_position[1] + 1):self.example.append(frame_combine(frame, file_path, keyword_frame_position[0], keyword_frame_position[1]))self.labels.append('0')self.num_examples += 1for frame in range(keyword_frame_position[2], keyword_frame_position[3] + 1):self.example.append(frame_combine(frame, file_path, keyword_frame_position[2], keyword_frame_position[3]))self.labels.append('1')self.num_examples += 1else:file_path = "E://aslp_wake_up_word_data/data/negative/train/" + \example + ".fbank"fbank = fbank_reader.HTKFeat_read(file_path).getall()frame_number = fbank.shape[0]print(example)for frame in range(frame_number):self.example.append(frame_combine(frame, file_path, 0, frame_number - 1))self.labels.append('2')self.num_examples += 1def next_batch(self, batch_size):start = self.index_in_epochsif start == 0:self.read_train_set()index0 = np.arange(self.num_examples)np.random.shuffle(index0)self.example = np.array(self.example)[index0]self.labels = np.array(self.labels)[index0]if start + batch_size > self.num_examples:examples_rest_part = self.example[start: self.num_examples]labels_rest_part = self.labels[start: self.num_examples]self.index_in_epochs = 0return examples_rest_part, labels_rest_partelse:self.index_in_epochs += batch_sizeend = self.index_in_epochsreturn self.example[start:end], self.labels[start:end]# 用于拼帧

def frame_combine(frame, file_path, start, end):fbank = fbank_reader.HTKFeat_read(file_path).getall()if end - start + 1 < 41:if frame - start <= 30 and end - frame <= 10:frame_to_combine = []front_rest = 30 - (frame - start)back_rest = 10 - (end - frame)for i in range(front_rest):frame_to_combine.append(fbank[start].tolist())for i in range(start, end + 1):frame_to_combine.append(fbank[i].tolist())for i in range(back_rest):frame_to_combine.append(fbank[end].tolist())elif end - frame >= 10:frame_to_combine = []front_rest = 30 - (frame - start)for i in range(front_rest):frame_to_combine.append(fbank[start].tolist())for i in range(start, frame+11):frame_to_combine.append(fbank[i].tolist())else:frame_to_combine = []back_rest = 10 - (end - frame)for i in range(frame - 30, end + 1):frame_to_combine.append(fbank[i].tolist())for i in range(back_rest):frame_to_combine.append(fbank[end].tolist())combined = np.array(frame_to_combine).reshape(-1)else:if frame - start >= 30 and end - frame >= 10:frame_to_combine = fbank[frame - 30: frame + 11]combined = frame_to_combine.reshape(-1)elif frame - start < 30:frame_to_combine = fbank[start: start+41]combined = frame_to_combine.reshape(-1)else:frame_to_combine = fbank[end - 40: end+1]combined = frame_to_combine.reshape(-1)return combined.tolist()# 制作可以直接获取下一批样本的数据集

def read_data_sets():f = open("E://aslp_wake_up_word_data/positiveKeywordPosition.txt", "r")position_data = f.read()f.close()f = open("E://aslp_wake_up_word_data/train_positive.list", "r")temp = f.read()train_positive_list = temp.split('\n')f.close()f = open("E://aslp_wake_up_word_data/test_positive.list", "r")temp = f.read()test_positive_list = temp.split('\n')f.close()f = open("E://aslp_wake_up_word_data/train_negative.list", "r")temp = f.read()train_negative_list = temp.split('\n')f.close()f = open("E://aslp_wake_up_word_data/test_negative.list", "r")temp = f.read()test_negative_list = temp.split('\n')f.close()test_examples = []test_labels = []test_length = []test_num = 0for example in test_positive_list:if example == '':continuestart = position_data.find(example)end = position_data.find("positive", start + 1)if end != -1:position_str = position_data[start + 15: end - 1]else:position_str = position_data[start + 15: end]# start and end position of "hello" & start and end position of "xiao gua"keyword_position = position_str.split(" ")file_path = "E://aslp_wake_up_word_data/data/positive/test/" + \example + ".fbank"keyword_frame_position = []for i in range(4):fbank = fbank_reader.HTKFeat_read(file_path).getall()length = fbank.shape[0]frame_position = int(keyword_position[i]) // 160if frame_position >= length:frame_position = length - 1keyword_frame_position.append(frame_position)test_length.append(keyword_frame_position[1] - keyword_frame_position[0] + 1 +keyword_frame_position[3] - keyword_frame_position[2] + 1)print(example)for frame in range(keyword_frame_position[0], keyword_frame_position[1] + 1):test_examples.append(frame_combine(frame, file_path, keyword_frame_position[0], keyword_frame_position[1]))test_labels.append('0')test_num += 1for frame in range(keyword_frame_position[2], keyword_frame_position[3] + 1):test_examples.append(frame_combine(frame, file_path, keyword_frame_position[2], keyword_frame_position[3]))test_labels.append('1')test_num += 1for example in test_negative_list:if example == '':continuefile_path = "/E://aslp_wake_up_word_data/data/negative/test/" + \example + ".fbank"fbank = fbank_reader.HTKFeat_read(file_path).getall()frame_number = fbank.shape[0]test_length.append(frame_number)print(example)for frame in range(frame_number):test_examples.append(frame_combine(frame, file_path, 0, frame_number - 1))test_labels.append('2')test_num += 1fbank_end_frame = []for i in range(len(test_length)):fbank_end_frame.append(sum(test_length[0: i+1]))train_list = train_positive_list + train_negative_listtrain = TrainSet(train_list, position_data)test = TestSet(test_examples, test_labels, test_num, fbank_end_frame)return train, test# main.py

import argparse

import os

import sys

import tensorflow as tf

import input_data

import tensor_build

import matplotlib.pyplot as plt

import numpy as np

import mathFLAGS = None# 用于绘制ROC曲线

def plot(false_alarm_rate_list, false_reject_rate_list):plt.figure(figsize=(8, 4))plt.plot(false_alarm_rate_list, false_reject_rate_list)plt.xlabel('false_alarm_rate')plt.ylabel('false_reject_rate')plt.title('ROC')plt.show()def placeholder_inputs():speeches_placeholder = tf.placeholder(tf.float32, shape=(None, 1640))labels_placeholder = tf.placeholder(tf.int32, shape=(None))return speeches_placeholder, labels_placeholder# 用于为placeholder赋值

def fill_feed_dict(data_set, examples_pl, labels_pl):examples_feed, labels_feed = data_set.next_batch(FLAGS.batch_size)feed_dict = {examples_pl: examples_feed,labels_pl: labels_feed,}return feed_dictdef find_max(smooth_probability):length = len(smooth_probability)max1 = smooth_probability[0][0]max2 = smooth_probability[0][1]for i in range(length):if smooth_probability[i][0] > max1:max1 = smooth_probability[i][0]if smooth_probability[i][1] > max2:max2 = smooth_probability[i][1]return max1, max2# 用于进行数据的后处理

def posterior_handling(probability, fbank_end_frame):confidence = []for i in range(len(fbank_end_frame)):if i == 0:fbank_probability = probability[0: fbank_end_frame[0] - 1]else:fbank_probability = probability[fbank_end_frame[i-1]: fbank_end_frame[i] - 1]smooth_probability = []frame_confidence = []for j in range(len(fbank_probability)):if j + 1 <= 30:smooth_probability.append(np.sum((np.array(fbank_probability[0: j + 1])/(j + 1)), axis=0).tolist())else:smooth_probability.append(np.sum((np.array(fbank_probability[j - 30: j + 1])/30), axis=0).tolist())for j in range(len(fbank_probability)):if j + 1 <= 100:max1, max2 = find_max(smooth_probability[0: j + 1])frame_confidence.append(max1 * max2)else:max1, max2 = find_max(smooth_probability[j - 100: j + 1])frame_confidence.append(max1 * max2)confidence.append(math.sqrt(max(frame_confidence)))return confidence# 用于计算不同唤醒阈值下的误唤醒率与误拒绝率作为评估指标

def do_eval(sess, speeches_placeholder, labels_placeholder, data_set, outputs):threshold_part = 10000steps_per_epoch = data_set.num_examples // FLAGS.batch_sizeprobability = []label = []false_alarm_rate_list = []false_reject_rate_list = []for step in range(steps_per_epoch + 1):feed_dict = fill_feed_dict(data_set, speeches_placeholder, labels_placeholder)result_to_compare = sess.run([outputs, labels_placeholder], feed_dict=feed_dict)probability.extend(result_to_compare[0].tolist())label.extend(result_to_compare[1].tolist())fbank_end_frame = data_set.fbank_end_frameconfidence = posterior_handling(probability, fbank_end_frame)for i in range(threshold_part):threshold = float(i) / threshold_partif threshold == 0:continuetrue_alarm = true_reject = false_reject = false_alarm = 0for j in range(len(confidence)):if j == 0:if confidence[j] < threshold:if label[0] == 2:true_reject += 1else:false_reject += 1if confidence[j] >= threshold:if label[0] == 2:false_alarm += 1else:true_alarm += 1continueif confidence[j] < threshold:if label[fbank_end_frame[j-1]] == 2:true_reject += 1else:false_reject += 1if confidence[j] >= threshold:if label[fbank_end_frame[j-1]] == 2:false_alarm += 1else:true_alarm += 1if false_reject + true_reject == 0 or false_alarm + true_alarm == 0:continuefalse_alarm_rate = float(false_alarm) / (false_alarm + true_alarm)false_reject_rate = float(false_reject) / (false_reject + true_reject)false_alarm_rate_list.append(false_alarm_rate)false_reject_rate_list.append(false_reject_rate)return false_alarm_rate_list, false_reject_rate_listdef run_training():train, test = input_data.read_data_sets()with tf.Graph().as_default():speeches_placeholder, labels_placeholder = placeholder_inputs()logits = tensor_build.inference(speeches_placeholder, FLAGS.hidden1, FLAGS.hidden2, FLAGS.hidden3)outputs = tf.nn.softmax(logits=logits)loss = tensor_build.loss(logits, labels_placeholder)train_op = tensor_build.training(loss, FLAGS.learning_rate)summary = tf.summary.merge_all()init = tf.global_variables_initializer()saver = tf.train.Saver()sess = tf.Session()summary_writer = tf.summary.FileWriter(FLAGS.log_dir, sess.graph)sess.run(init)test_false_alarm_rate_list = []test_false_reject_rate_list = []loss_list = []total_loss = []for step in range(FLAGS.max_steps):feed_dict = fill_feed_dict(train, speeches_placeholder, labels_placeholder)_, loss_value = sess.run([train_op, loss], feed_dict=feed_dict)loss_list.append(loss_value)if step % 25897 == 0 and step != 0:total_loss.append(sum(loss_list[step - 25897: step]) / 25897)if step % 300 == 0:summary_str = sess.run(summary, feed_dict=feed_dict)summary_writer.add_summary(summary_str, step)summary_writer.flush()if step + 1 == FLAGS.max_steps:checkpoint_file = os.path.join(FLAGS.log_dir, 'model.ckpt')saver.save(sess, checkpoint_file, global_step=step)test_false_alarm_rate_list, test_false_reject_rate_list = do_eval(sess, speeches_placeholder,labels_placeholder, test, outputs)print(total_loss)plot(test_false_alarm_rate_list, test_false_reject_rate_list)def main(_):if tf.gfile.Exists(FLAGS.log_dir):tf.gfile.DeleteRecursively(FLAGS.log_dir)tf.gfile.MakeDirs(FLAGS.log_dir)run_training()if __name__ == '__main__':parser = argparse.ArgumentParser()parser.add_argument('--learning_rate',type=float,default=0.001,help='Initial learning rate.')parser.add_argument('--max_steps',type=int,default=78000,help='Number of steps to run trainer.')parser.add_argument('--hidden1',type=int,default=128,help='Number of units in hidden layer 1.')parser.add_argument('--hidden2',type=int,default=128,help='Number of units in hidden layer 2.')parser.add_argument('--hidden3',type=int,default=128,help='Number of units in hidden layer 3.')parser.add_argument('--batch_size',type=int,default=100,help='Batch size. Must divide evenly into the dataset sizes.')parser.add_argument('--log_dir',type=str,default=os.path.join(os.getenv('TEST_TMPDIR', 'E:\\'),'wake_up/logs/fully_connected_feed_lyh'),help='Directory to put the log data.')FLAGS, unparsed = parser.parse_known_args()tf.app.run(main=main, argv=[sys.argv[0]] + unparsed)

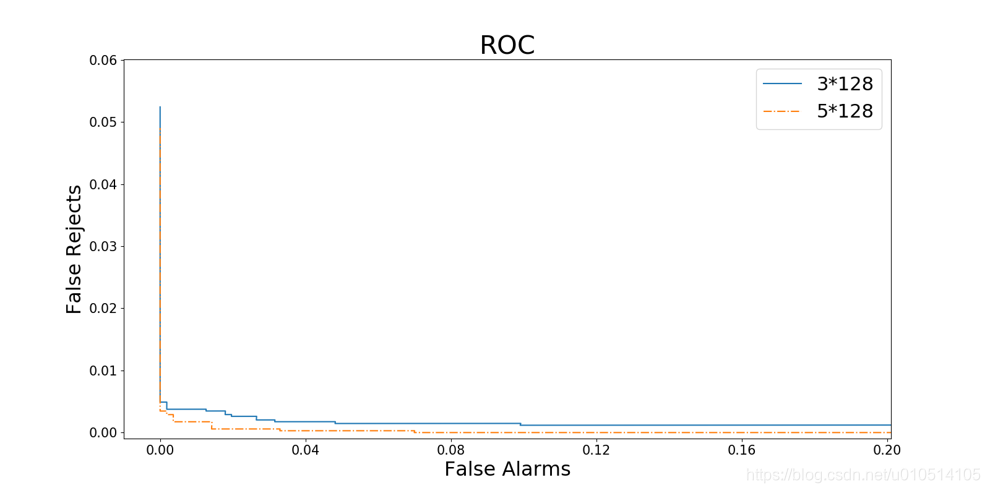

实验结果

评估指标用的ROC曲线,分别以误唤醒率与误拒绝率为横纵坐标(对比了3×128与5×128):

如若内容造成侵权/违法违规/事实不符,请联系编程学习网邮箱:809451989@qq.com进行投诉反馈,一经查实,立即删除!

相关文章

- 《MyCat权威指南》学习笔记

1. OLTP和OLAP 对于海量数据处理,按照使用场景,主要分为两种类型:联机事务处理(OLTP)和联机分析处理(OLAP)。 (1)OLTP 联机事务处理(OLTP)也称…...

2024/4/7 14:47:04 - P3275 [SCOI2011]糖果

HyperlinkHyperlinkHyperlink https://www.luogu.com.cn/problem/P3275 DescriptionDescriptionDescription 有nnn个未知数,给定mmm个限制条件(包括xix_ixi与xjx_jxj的大小或相等关系),求满足这些条件的最小正整数解 数据范…...

2024/4/7 14:47:04 - C++运算符重载

1 定义一个复数类Complex,重载运算符“”使之用于复数的加法运算。给出源程序分别求出复数之和,整数与复数之和,复数与整数之和并运行结果。 #include // 包含头文件 using namespace std; //使用标准命名空间 class Complex //声明一个复数类…...

2024/5/4 7:49:09 - 根据年龄段统计数量

根据年龄段统计数量 select m.,count() from ( select case when extract (year from age(CURRENT_DATE,birthday)) < 18 then ‘0-17’ when extract (year from age(CURRENT_DATE,birthday)) between 18 and 30 then ‘18-30’ when extract (year from age(CURRENT_DATE…...

2024/4/27 8:58:35 - 黑板风毕业论文答辩PPT模板_9

ppt模版【毕业答辩】黑板风毕业论文答辩PPT模板_9 简介如下: 百度云盘:https://pan.baidu.com/s/1dhhvivWtszX4qMnmaqW_JQ;提取码:abc1 PPT演讲模板的重要性,不用多说各位职场人士都应该知道;正所谓《台上三…...

2024/4/10 21:34:38 - 多重背包

急!灾区的食物依然短缺! 为了挽救灾区同胞的生命,心系灾区同胞的你准备自己采购一些粮食支援灾区,现在假设你一共有资金n元,而市场有m种大米,每种大米都是袋装产品,其价格不等,并且…...

2024/5/2 19:09:19 - 93.砖石旋转背景动画特效

效果 (源码网盘地址在最后) 大家都说简历没项目写,我就帮大家找了一个项目,还附赠【搭建教程】。 演示视频 【前端特效 93】砖石旋转背景动画特效 视频地址一:https://www.ixigua.com/6875990675544867331/ 视频地址二:https://www.bilibili.com/video/BV1yv411C7NJ/ 源…...

2024/4/7 9:23:51 - 大二上,计组原理笔记(3)2.6数据校验码

前言: 我的个人听课记录,毕竟是初学,错误在所难免,我知道了错误会改正更新,欢迎指导也欢迎一起讨论学习。 2.6 数据校验码 {n,r}即{kr,r} 2.6.1 奇偶校验码(k1,k) 1.简单奇偶校验…...

2024/4/28 7:55:15 - 【项目总结】汽车之家

项目概述: 汽车之家是为联车公司开发的o2o模式一个项目。它是以汽车为中心,提供汽车及二手车买卖和租赁的基本功能,还提供了车辆服务,相关周边配件物品购买,汽车相关知识学习等功能一个综合性平台。支持商家入驻&…...

2024/4/30 13:20:08 - Python打卡第八天

求{‘A’,‘B’,‘C’}中元素在{‘B’,‘C’,‘D’}中出现的次数 for elema in a:if elema in b:print("{0}出现1次".format(elema))else:print("{0}出现0次".format(elema))求两个集合{6,7,8},{7,8,9}中不重复的元素(差集指的是两…...

2024/4/25 9:54:04 - 域适应方法:解决目标任务数据不足

域适应是对于存在一些有少量或者没有标注数据的领域完成针对性任务的一个有效手段,目前对于很多任务只要有大量标注数据都能达到比较好的效果,然而标注数据的成本是高昂的,尤其是对某些专业性强的术语多的领域,标注就更困难。因此…...

2024/4/22 15:16:40 - 在ubuntu系统下用Makefile方式编程主程序

在ubuntu系统下用Makefile方式编程主程序操作目的操作步骤小结操作目的 请编写一个主程序文件 main1.c 和一个子程序文件 sub.c, 要求:子程序sub.c 包含一个算术运算函数 float x2x(int a,int b),此函数功…...

2024/4/20 5:56:59 - Java | 使用JNA在Java中实现cls(命令行清屏)功能

前言 本文将通过示例代码讲解如何在Java中通过使用JNA来调用cls命令行清屏功能,代码已同步到GitHub,此外本文不是专门讲解JNA的使用的,如需学习可到GitHub查看官网示例,下面我就开始通过编写实例代码来展示如何通过JNA调用本地dl…...

2024/5/2 7:58:59 - locust性能测试环境搭建

第一步:安装python。参考(https://www.cnblogs.com/jainy/p/10461995.html) 第二步:安装pycharm。参考(https://www.cnblogs.com/du-hong/p/10244304.html) 第三步:下载locust源代码。命令行:pip install locustio。 第四步&#…...

2024/4/27 7:28:23 - 质效率访谈第1期:面试经验--讲讲我是如何进大厂的

最近恰巧和招聘我进阿里的HR聊天,聊到为什么很多面试者没能最终通过面试,她半开玩笑跟我说:能不能给大家辅导一下,我是很懂怎么表现能让leader们兴奋的那个点。我第一反应当然是开心,同时又思考了一下,其实…...

2024/4/19 10:28:50 - java零基础自学第一天②,运算符:表达式,算术运算符,+操作,赋值运算符,自增自减运算符,关系运算符,逻辑运算符,三元运算符

总:预算符 一:运算符和表达式! 算术运算符 算术运算符 字符操作 赋值运算符 自增自减运算符 关系运算符 逻辑运算符 短路逻辑运算符 三元运算符...

2024/4/7 14:46:57 - Swagger介绍及使用

作者:wuqke 链接:https://www.jianshu.com/p/349e130e40d5 来源:简书 著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。 一、导语 相信无论是前端还是后端开发,都或多或少地被接口文档折磨过。前…...

2024/4/13 22:25:14 - 基于UDP编程

基于UDP编程 1 UDP是数据报协议,无连接的,不可靠,追求传输效率的一种通信协议数据的发送和接收是同步的.在进行通信之前,不需要建立连接.其传输效率比TCP高.对其服务器而言,并没有三次握手的过程.因此和TCP相比,少了被动监听(listen)和(accept).只需要创建通信设备,绑定IP地址和…...

2024/4/24 4:17:05 - Python3 (基础练习)猴子吃桃

猴子吃桃问题:猴子第一天摘下若干个桃子,当即吃了一半,还不过瘾,又多吃了一个,第二天早上又将剩下的桃子吃掉一半,又多吃了一个。以后每天早上都吃了前一天剩下的一半加一个。到第10天早上想再吃时…...

2024/4/7 14:46:54 - 常规方法上传论文到arXiv平台

前几天,我们使用 暴力上传latex生成的PDF到arXiv 上传pdf文档,arXiv发邮件说违规,并给退了回来。木办法,只能按老办法,一步一步地来。 一些才考链接如下: arXiv提交文章遇到的问题 https://blog.csdn.net/sunzhao100…...

2024/4/25 8:42:11

最新文章

- java JMH 学习

JMH 是什么? JMH(Java Microbenchmark Harness)是一款专用于代码微基准测试的工具集,其主要聚焦于方法层面的基准测试,精度可达纳秒级别。此工具由 Oracle 内部负责实现 JIT 的杰出人士编写,他们对 JIT 及…...

2024/5/7 16:38:35 - 梯度消失和梯度爆炸的一些处理方法

在这里是记录一下梯度消失或梯度爆炸的一些处理技巧。全当学习总结了如有错误还请留言,在此感激不尽。 权重和梯度的更新公式如下: w w − η ⋅ ∇ w w w - \eta \cdot \nabla w ww−η⋅∇w 个人通俗的理解梯度消失就是网络模型在反向求导的时候出…...

2024/5/7 10:36:02 - 【APUE】网络socket编程温度采集智能存储与上报项目技术------多路复用

作者简介: 一个平凡而乐于分享的小比特,中南民族大学通信工程专业研究生在读,研究方向无线联邦学习 擅长领域:驱动开发,嵌入式软件开发,BSP开发 作者主页:一个平凡而乐于分享的小比特的个人主页…...

2024/5/6 2:28:08 - 【图论】知识点集合

边的类型 neighbors(邻居):两个顶点有一条共同边 loop:链接自身 link:两个顶点有一条边 parallel edges:两个顶点有两条及以上条边 无向图 必要条件:删掉顶点数一定大于等于剩下的顶点数 设无向图G<V,E>是…...

2024/5/5 8:44:55 - 416. 分割等和子集问题(动态规划)

题目 题解 class Solution:def canPartition(self, nums: List[int]) -> bool:# badcaseif not nums:return True# 不能被2整除if sum(nums) % 2 ! 0:return False# 状态定义:dp[i][j]表示当背包容量为j,用前i个物品是否正好可以将背包填满ÿ…...

2024/5/6 18:23:10 - 【Java】ExcelWriter自适应宽度工具类(支持中文)

工具类 import org.apache.poi.ss.usermodel.Cell; import org.apache.poi.ss.usermodel.CellType; import org.apache.poi.ss.usermodel.Row; import org.apache.poi.ss.usermodel.Sheet;/*** Excel工具类** author xiaoming* date 2023/11/17 10:40*/ public class ExcelUti…...

2024/5/6 18:40:38 - Spring cloud负载均衡@LoadBalanced LoadBalancerClient

LoadBalance vs Ribbon 由于Spring cloud2020之后移除了Ribbon,直接使用Spring Cloud LoadBalancer作为客户端负载均衡组件,我们讨论Spring负载均衡以Spring Cloud2020之后版本为主,学习Spring Cloud LoadBalance,暂不讨论Ribbon…...

2024/5/6 23:37:19 - TSINGSEE青犀AI智能分析+视频监控工业园区周界安全防范方案

一、背景需求分析 在工业产业园、化工园或生产制造园区中,周界防范意义重大,对园区的安全起到重要的作用。常规的安防方式是采用人员巡查,人力投入成本大而且效率低。周界一旦被破坏或入侵,会影响园区人员和资产安全,…...

2024/5/7 14:19:30 - VB.net WebBrowser网页元素抓取分析方法

在用WebBrowser编程实现网页操作自动化时,常要分析网页Html,例如网页在加载数据时,常会显示“系统处理中,请稍候..”,我们需要在数据加载完成后才能继续下一步操作,如何抓取这个信息的网页html元素变化&…...

2024/5/7 0:32:52 - 【Objective-C】Objective-C汇总

方法定义 参考:https://www.yiibai.com/objective_c/objective_c_functions.html Objective-C编程语言中方法定义的一般形式如下 - (return_type) method_name:( argumentType1 )argumentName1 joiningArgument2:( argumentType2 )argumentName2 ... joiningArgu…...

2024/5/6 6:01:13 - 【洛谷算法题】P5713-洛谷团队系统【入门2分支结构】

👨💻博客主页:花无缺 欢迎 点赞👍 收藏⭐ 留言📝 加关注✅! 本文由 花无缺 原创 收录于专栏 【洛谷算法题】 文章目录 【洛谷算法题】P5713-洛谷团队系统【入门2分支结构】🌏题目描述🌏输入格…...

2024/5/7 14:58:59 - 【ES6.0】- 扩展运算符(...)

【ES6.0】- 扩展运算符... 文章目录 【ES6.0】- 扩展运算符...一、概述二、拷贝数组对象三、合并操作四、参数传递五、数组去重六、字符串转字符数组七、NodeList转数组八、解构变量九、打印日志十、总结 一、概述 **扩展运算符(...)**允许一个表达式在期望多个参数࿰…...

2024/5/7 1:54:46 - 摩根看好的前智能硬件头部品牌双11交易数据极度异常!——是模式创新还是饮鸩止渴?

文 | 螳螂观察 作者 | 李燃 双11狂欢已落下帷幕,各大品牌纷纷晒出优异的成绩单,摩根士丹利投资的智能硬件头部品牌凯迪仕也不例外。然而有爆料称,在自媒体平台发布霸榜各大榜单喜讯的凯迪仕智能锁,多个平台数据都表现出极度异常…...

2024/5/6 20:04:22 - Go语言常用命令详解(二)

文章目录 前言常用命令go bug示例参数说明 go doc示例参数说明 go env示例 go fix示例 go fmt示例 go generate示例 总结写在最后 前言 接着上一篇继续介绍Go语言的常用命令 常用命令 以下是一些常用的Go命令,这些命令可以帮助您在Go开发中进行编译、测试、运行和…...

2024/5/7 0:32:51 - 用欧拉路径判断图同构推出reverse合法性:1116T4

http://cplusoj.com/d/senior/p/SS231116D 假设我们要把 a a a 变成 b b b,我们在 a i a_i ai 和 a i 1 a_{i1} ai1 之间连边, b b b 同理,则 a a a 能变成 b b b 的充要条件是两图 A , B A,B A,B 同构。 必要性显然࿰…...

2024/5/7 16:05:05 - 【NGINX--1】基础知识

1、在 Debian/Ubuntu 上安装 NGINX 在 Debian 或 Ubuntu 机器上安装 NGINX 开源版。 更新已配置源的软件包信息,并安装一些有助于配置官方 NGINX 软件包仓库的软件包: apt-get update apt install -y curl gnupg2 ca-certificates lsb-release debian-…...

2024/5/7 16:04:58 - Hive默认分割符、存储格式与数据压缩

目录 1、Hive默认分割符2、Hive存储格式3、Hive数据压缩 1、Hive默认分割符 Hive创建表时指定的行受限(ROW FORMAT)配置标准HQL为: ... ROW FORMAT DELIMITED FIELDS TERMINATED BY \u0001 COLLECTION ITEMS TERMINATED BY , MAP KEYS TERMI…...

2024/5/6 19:38:16 - 【论文阅读】MAG:一种用于航天器遥测数据中有效异常检测的新方法

文章目录 摘要1 引言2 问题描述3 拟议框架4 所提出方法的细节A.数据预处理B.变量相关分析C.MAG模型D.异常分数 5 实验A.数据集和性能指标B.实验设置与平台C.结果和比较 6 结论 摘要 异常检测是保证航天器稳定性的关键。在航天器运行过程中,传感器和控制器产生大量周…...

2024/5/7 16:05:05 - --max-old-space-size=8192报错

vue项目运行时,如果经常运行慢,崩溃停止服务,报如下错误 FATAL ERROR: CALL_AND_RETRY_LAST Allocation failed - JavaScript heap out of memory 因为在 Node 中,通过JavaScript使用内存时只能使用部分内存(64位系统&…...

2024/5/7 0:32:49 - 基于深度学习的恶意软件检测

恶意软件是指恶意软件犯罪者用来感染个人计算机或整个组织的网络的软件。 它利用目标系统漏洞,例如可以被劫持的合法软件(例如浏览器或 Web 应用程序插件)中的错误。 恶意软件渗透可能会造成灾难性的后果,包括数据被盗、勒索或网…...

2024/5/6 21:25:34 - JS原型对象prototype

让我简单的为大家介绍一下原型对象prototype吧! 使用原型实现方法共享 1.构造函数通过原型分配的函数是所有对象所 共享的。 2.JavaScript 规定,每一个构造函数都有一个 prototype 属性,指向另一个对象,所以我们也称为原型对象…...

2024/5/7 11:08:22 - C++中只能有一个实例的单例类

C中只能有一个实例的单例类 前面讨论的 President 类很不错,但存在一个缺陷:无法禁止通过实例化多个对象来创建多名总统: President One, Two, Three; 由于复制构造函数是私有的,其中每个对象都是不可复制的,但您的目…...

2024/5/7 7:26:29 - python django 小程序图书借阅源码

开发工具: PyCharm,mysql5.7,微信开发者工具 技术说明: python django html 小程序 功能介绍: 用户端: 登录注册(含授权登录) 首页显示搜索图书,轮播图࿰…...

2024/5/7 0:32:47 - 电子学会C/C++编程等级考试2022年03月(一级)真题解析

C/C++等级考试(1~8级)全部真题・点这里 第1题:双精度浮点数的输入输出 输入一个双精度浮点数,保留8位小数,输出这个浮点数。 时间限制:1000 内存限制:65536输入 只有一行,一个双精度浮点数。输出 一行,保留8位小数的浮点数。样例输入 3.1415926535798932样例输出 3.1…...

2024/5/6 16:50:57 - 配置失败还原请勿关闭计算机,电脑开机屏幕上面显示,配置失败还原更改 请勿关闭计算机 开不了机 这个问题怎么办...

解析如下:1、长按电脑电源键直至关机,然后再按一次电源健重启电脑,按F8健进入安全模式2、安全模式下进入Windows系统桌面后,按住“winR”打开运行窗口,输入“services.msc”打开服务设置3、在服务界面,选中…...

2022/11/19 21:17:18 - 错误使用 reshape要执行 RESHAPE,请勿更改元素数目。

%读入6幅图像(每一幅图像的大小是564*564) f1 imread(WashingtonDC_Band1_564.tif); subplot(3,2,1),imshow(f1); f2 imread(WashingtonDC_Band2_564.tif); subplot(3,2,2),imshow(f2); f3 imread(WashingtonDC_Band3_564.tif); subplot(3,2,3),imsho…...

2022/11/19 21:17:16 - 配置 已完成 请勿关闭计算机,win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机...

win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机”问题的解决方法在win7系统关机时如果有升级系统的或者其他需要会直接进入一个 等待界面,在等待界面中我们需要等待操作结束才能关机,虽然这比较麻烦,但是对系统进行配置和升级…...

2022/11/19 21:17:15 - 台式电脑显示配置100%请勿关闭计算机,“准备配置windows 请勿关闭计算机”的解决方法...

有不少用户在重装Win7系统或更新系统后会遇到“准备配置windows,请勿关闭计算机”的提示,要过很久才能进入系统,有的用户甚至几个小时也无法进入,下面就教大家这个问题的解决方法。第一种方法:我们首先在左下角的“开始…...

2022/11/19 21:17:14 - win7 正在配置 请勿关闭计算机,怎么办Win7开机显示正在配置Windows Update请勿关机...

置信有很多用户都跟小编一样遇到过这样的问题,电脑时发现开机屏幕显现“正在配置Windows Update,请勿关机”(如下图所示),而且还需求等大约5分钟才干进入系统。这是怎样回事呢?一切都是正常操作的,为什么开时机呈现“正…...

2022/11/19 21:17:13 - 准备配置windows 请勿关闭计算机 蓝屏,Win7开机总是出现提示“配置Windows请勿关机”...

Win7系统开机启动时总是出现“配置Windows请勿关机”的提示,没过几秒后电脑自动重启,每次开机都这样无法进入系统,此时碰到这种现象的用户就可以使用以下5种方法解决问题。方法一:开机按下F8,在出现的Windows高级启动选…...

2022/11/19 21:17:12 - 准备windows请勿关闭计算机要多久,windows10系统提示正在准备windows请勿关闭计算机怎么办...

有不少windows10系统用户反映说碰到这样一个情况,就是电脑提示正在准备windows请勿关闭计算机,碰到这样的问题该怎么解决呢,现在小编就给大家分享一下windows10系统提示正在准备windows请勿关闭计算机的具体第一种方法:1、2、依次…...

2022/11/19 21:17:11 - 配置 已完成 请勿关闭计算机,win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机”的解决方法...

今天和大家分享一下win7系统重装了Win7旗舰版系统后,每次关机的时候桌面上都会显示一个“配置Windows Update的界面,提示请勿关闭计算机”,每次停留好几分钟才能正常关机,导致什么情况引起的呢?出现配置Windows Update…...

2022/11/19 21:17:10 - 电脑桌面一直是清理请关闭计算机,windows7一直卡在清理 请勿关闭计算机-win7清理请勿关机,win7配置更新35%不动...

只能是等着,别无他法。说是卡着如果你看硬盘灯应该在读写。如果从 Win 10 无法正常回滚,只能是考虑备份数据后重装系统了。解决来方案一:管理员运行cmd:net stop WuAuServcd %windir%ren SoftwareDistribution SDoldnet start WuA…...

2022/11/19 21:17:09 - 计算机配置更新不起,电脑提示“配置Windows Update请勿关闭计算机”怎么办?

原标题:电脑提示“配置Windows Update请勿关闭计算机”怎么办?win7系统中在开机与关闭的时候总是显示“配置windows update请勿关闭计算机”相信有不少朋友都曾遇到过一次两次还能忍但经常遇到就叫人感到心烦了遇到这种问题怎么办呢?一般的方…...

2022/11/19 21:17:08 - 计算机正在配置无法关机,关机提示 windows7 正在配置windows 请勿关闭计算机 ,然后等了一晚上也没有关掉。现在电脑无法正常关机...

关机提示 windows7 正在配置windows 请勿关闭计算机 ,然后等了一晚上也没有关掉。现在电脑无法正常关机以下文字资料是由(历史新知网www.lishixinzhi.com)小编为大家搜集整理后发布的内容,让我们赶快一起来看一下吧!关机提示 windows7 正在配…...

2022/11/19 21:17:05 - 钉钉提示请勿通过开发者调试模式_钉钉请勿通过开发者调试模式是真的吗好不好用...

钉钉请勿通过开发者调试模式是真的吗好不好用 更新时间:2020-04-20 22:24:19 浏览次数:729次 区域: 南阳 > 卧龙 列举网提醒您:为保障您的权益,请不要提前支付任何费用! 虚拟位置外设器!!轨迹模拟&虚拟位置外设神器 专业用于:钉钉,外勤365,红圈通,企业微信和…...

2022/11/19 21:17:05 - 配置失败还原请勿关闭计算机怎么办,win7系统出现“配置windows update失败 还原更改 请勿关闭计算机”,长时间没反应,无法进入系统的解决方案...

前几天班里有位学生电脑(windows 7系统)出问题了,具体表现是开机时一直停留在“配置windows update失败 还原更改 请勿关闭计算机”这个界面,长时间没反应,无法进入系统。这个问题原来帮其他同学也解决过,网上搜了不少资料&#x…...

2022/11/19 21:17:04 - 一个电脑无法关闭计算机你应该怎么办,电脑显示“清理请勿关闭计算机”怎么办?...

本文为你提供了3个有效解决电脑显示“清理请勿关闭计算机”问题的方法,并在最后教给你1种保护系统安全的好方法,一起来看看!电脑出现“清理请勿关闭计算机”在Windows 7(SP1)和Windows Server 2008 R2 SP1中,添加了1个新功能在“磁…...

2022/11/19 21:17:03 - 请勿关闭计算机还原更改要多久,电脑显示:配置windows更新失败,正在还原更改,请勿关闭计算机怎么办...

许多用户在长期不使用电脑的时候,开启电脑发现电脑显示:配置windows更新失败,正在还原更改,请勿关闭计算机。。.这要怎么办呢?下面小编就带着大家一起看看吧!如果能够正常进入系统,建议您暂时移…...

2022/11/19 21:17:02 - 还原更改请勿关闭计算机 要多久,配置windows update失败 还原更改 请勿关闭计算机,电脑开机后一直显示以...

配置windows update失败 还原更改 请勿关闭计算机,电脑开机后一直显示以以下文字资料是由(历史新知网www.lishixinzhi.com)小编为大家搜集整理后发布的内容,让我们赶快一起来看一下吧!配置windows update失败 还原更改 请勿关闭计算机&#x…...

2022/11/19 21:17:01 - 电脑配置中请勿关闭计算机怎么办,准备配置windows请勿关闭计算机一直显示怎么办【图解】...

不知道大家有没有遇到过这样的一个问题,就是我们的win7系统在关机的时候,总是喜欢显示“准备配置windows,请勿关机”这样的一个页面,没有什么大碍,但是如果一直等着的话就要两个小时甚至更久都关不了机,非常…...

2022/11/19 21:17:00 - 正在准备配置请勿关闭计算机,正在准备配置windows请勿关闭计算机时间长了解决教程...

当电脑出现正在准备配置windows请勿关闭计算机时,一般是您正对windows进行升级,但是这个要是长时间没有反应,我们不能再傻等下去了。可能是电脑出了别的问题了,来看看教程的说法。正在准备配置windows请勿关闭计算机时间长了方法一…...

2022/11/19 21:16:59 - 配置失败还原请勿关闭计算机,配置Windows Update失败,还原更改请勿关闭计算机...

我们使用电脑的过程中有时会遇到这种情况,当我们打开电脑之后,发现一直停留在一个界面:“配置Windows Update失败,还原更改请勿关闭计算机”,等了许久还是无法进入系统。如果我们遇到此类问题应该如何解决呢࿰…...

2022/11/19 21:16:58 - 如何在iPhone上关闭“请勿打扰”

Apple’s “Do Not Disturb While Driving” is a potentially lifesaving iPhone feature, but it doesn’t always turn on automatically at the appropriate time. For example, you might be a passenger in a moving car, but your iPhone may think you’re the one dri…...

2022/11/19 21:16:57