cs231n:assignment2——Q1: Fully-connected Neural Network

视频里 Andrej Karpathy上课的时候说,这次的作业meaty but educational,确实很meaty,作业一般是由.ipynb文件和.py文件组成,这次因为每个.ipynb文件涉及到的.py文件较多,且互相之间有交叉,所以每篇博客只贴出一个.ipynb或者一个.py文件.(因为之前的作业由于是一个.ipynb文件对应一个.py文件,所以就整合到一篇博客里)

还是那句话,有错误希望帮我指出来,多多指教,谢谢

FullyConnectedNets.ipynb内容:

- Fully-Connected Neural Nets

- Affine layer foward

- Affine layer backward

- ReLU layer forward

- ReLU layer backward

- Sandwich layers

- Loss layers Softmax and SVM

- Two-layer network

- Solver

- Multilayer network

- Initial loss and gradient check

- Inline question

- Answer

- Update rules

- SGDMomentum

- RMSProp and Adam

- Train a good model

- Test you model

Fully-Connected Neural Nets

In the previous homework you implemented a fully-connected two-layer neural network on CIFAR-10. The implementation was simple but not very modular since the loss and gradient were computed in a single monolithic function. This is manageable for a simple two-layer network, but would become impractical as we move to bigger models. Ideally we want to build networks using a more modular design so that we can implement different layer types in isolation and then snap them together into models with different architectures.

In this exercise we will implement fully-connected networks using a more modular approach. For each layer we will implement a forward and a backward function. The forward function will receive inputs, weights, and other parameters and will return both an output and a cache object storing data needed for the backward pass, like this:

def layer_forward(x, w):""" Receive inputs x and weights w """# Do some computations ...z = # ... some intermediate value# Do some more computations ...out = # the outputcache = (x, w, z, out) # Values we need to compute gradientsreturn out, cacheThe backward pass will receive upstream derivatives and the cache object, and will return gradients with respect to the inputs and weights, like this:

def layer_backward(dout, cache):"""Receive derivative of loss with respect to outputs and cache,and compute derivative with respect to inputs."""# Unpack cache valuesx, w, z, out = cache# Use values in cache to compute derivativesdx = # Derivative of loss with respect to xdw = # Derivative of loss with respect to wreturn dx, dwAfter implementing a bunch of layers this way, we will be able to easily combine them to build classifiers with different architectures.

In addition to implementing fully-connected networks of arbitrary depth, we will also explore different update rules for optimization, and introduce Dropout as a regularizer and Batch Normalization as a tool to more efficiently optimize deep networks.

# As usual, a bit of setupimport time

import numpy as np

import matplotlib.pyplot as plt

from cs231n.classifiers.fc_net import *

from cs231n.data_utils import get_CIFAR10_data

from cs231n.gradient_check import eval_numerical_gradient, eval_numerical_gradient_array

from cs231n.solver import Solver%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'# for auto-reloading external modules

# see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython

%load_ext autoreload

%autoreload 2def rel_error(x, y):""" returns relative error """return np.max(np.abs(x - y) / (np.maximum(1e-8, np.abs(x) + np.abs(y))))# Load the (preprocessed) CIFAR10 data.data = get_CIFAR10_data()

for k, v in data.iteritems():print '%s: ' % k, v.shapeX_val: (1000, 3, 32, 32)

X_train: (49000, 3, 32, 32)

X_test: (1000, 3, 32, 32)

y_val: (1000,)

y_train: (49000,)

y_test: (1000,)

Affine layer: foward

Open the file cs231n/layers.py and implement the affine_forward function.

Once you are done you can test your implementaion by running the following:

# Test the affine_forward functionnum_inputs = 2

input_shape = (4, 5, 6)

output_dim = 3input_size = num_inputs * np.prod(input_shape)

weight_size = output_dim * np.prod(input_shape)x = np.linspace(-0.1, 0.5, num=input_size).reshape(num_inputs, *input_shape)

w = np.linspace(-0.2, 0.3, num=weight_size).reshape(np.prod(input_shape), output_dim)

b = np.linspace(-0.3, 0.1, num=output_dim)out, _ = affine_forward(x, w, b)

correct_out = np.array([[ 1.49834967, 1.70660132, 1.91485297],[ 3.25553199, 3.5141327, 3.77273342]])# Compare your output with ours. The error should be around 1e-9.

print 'Testing affine_forward function:'

print 'difference: ', rel_error(out, correct_out)Testing affine_forward function:

difference: 9.76985004799e-10

Affine layer: backward

Now implement the affine_backward function and test your implementation using numeric gradient checking.

# Test the affine_backward functionx = np.random.randn(10, 2, 3)

w = np.random.randn(6, 5)

b = np.random.randn(5)

dout = np.random.randn(10, 5)dx_num = eval_numerical_gradient_array(lambda x: affine_forward(x, w, b)[0], x, dout)

dw_num = eval_numerical_gradient_array(lambda w: affine_forward(x, w, b)[0], w, dout)

db_num = eval_numerical_gradient_array(lambda b: affine_forward(x, w, b)[0], b, dout)_, cache = affine_forward(x, w, b)

dx, dw, db = affine_backward(dout, cache)# The error should be around 1e-10

print 'Testing affine_backward function:'

print 'dx error: ', rel_error(dx_num, dx)

print 'dw error: ', rel_error(dw_num, dw)

print 'db error: ', rel_error(db_num, db)Testing affine_backward function:

dx error: 5.82176848644e-11

dw error: 1.69054721917e-10

db error: 1.40577633097e-11

ReLU layer: forward

Implement the forward pass for the ReLU activation function in the relu_forward function and test your implementation using the following:

# Test the relu_forward functionx = np.linspace(-0.5, 0.5, num=12).reshape(3, 4)out, _ = relu_forward(x)

correct_out = np.array([[ 0., 0., 0., 0., ],[ 0., 0., 0.04545455, 0.13636364,],[ 0.22727273, 0.31818182, 0.40909091, 0.5, ]])# Compare your output with ours. The error should be around 1e-8

print 'Testing relu_forward function:'

print 'difference: ', rel_error(out, correct_out)Testing relu_forward function:

difference: 4.99999979802e-08

ReLU layer: backward

Now implement the backward pass for the ReLU activation function in the relu_backward function and test your implementation using numeric gradient checking:

x = np.random.randn(10, 10)

dout = np.random.randn(*x.shape)dx_num = eval_numerical_gradient_array(lambda x: relu_forward(x)[0], x, dout)_, cache = relu_forward(x)

dx = relu_backward(dout, cache)# The error should be around 1e-12

print 'Testing relu_backward function:'

print 'dx error: ', rel_error(dx_num, dx)Testing relu_backward function:

dx error: 3.27562740606e-12

“Sandwich” layers

There are some common patterns of layers that are frequently used in neural nets. For example, affine layers are frequently followed by a ReLU nonlinearity. To make these common patterns easy, we define several convenience layers in the file cs231n/layer_utils.py.

For now take a look at the affine_relu_forward and affine_relu_backward functions, and run the following to numerically gradient check the backward pass:

from cs231n.layer_utils import affine_relu_forward, affine_relu_backwardx = np.random.randn(2, 3, 4)

w = np.random.randn(12, 10)

b = np.random.randn(10)

dout = np.random.randn(2, 10)out, cache = affine_relu_forward(x, w, b)

dx, dw, db = affine_relu_backward(dout, cache)dx_num = eval_numerical_gradient_array(lambda x: affine_relu_forward(x, w, b)[0], x, dout)

dw_num = eval_numerical_gradient_array(lambda w: affine_relu_forward(x, w, b)[0], w, dout)

db_num = eval_numerical_gradient_array(lambda b: affine_relu_forward(x, w, b)[0], b, dout)print 'Testing affine_relu_forward:'

print 'dx error: ', rel_error(dx_num, dx)

print 'dw error: ', rel_error(dw_num, dw)

print 'db error: ', rel_error(db_num, db)Testing affine_relu_forward:

dx error: 3.60036208641e-10

dw error: 2.61229361266e-09

db error: 4.99397627854e-12

Loss layers: Softmax and SVM

You implemented these loss functions in the last assignment, so we’ll give them to you for free here. You should still make sure you understand how they work by looking at the implementations in cs231n/layers.py.

You can make sure that the implementations are correct by running the following:

num_classes, num_inputs = 10, 50

x = 0.001 * np.random.randn(num_inputs, num_classes)

y = np.random.randint(num_classes, size=num_inputs)dx_num = eval_numerical_gradient(lambda x: svm_loss(x, y)[0], x, verbose=False)

loss, dx = svm_loss(x, y)# Test svm_loss function. Loss should be around 9 and dx error should be 1e-9

print 'Testing svm_loss:'

print 'loss: ', loss

print 'dx error: ', rel_error(dx_num, dx)dx_num = eval_numerical_gradient(lambda x: softmax_loss(x, y)[0], x, verbose=False)

loss, dx = softmax_loss(x, y)# Test softmax_loss function. Loss should be 2.3 and dx error should be 1e-8

print '\nTesting softmax_loss:'

print 'loss: ', loss

print 'dx error: ', rel_error(dx_num, dx)Testing svm_loss:

loss: 9.00052703662

dx error: 1.40215660067e-09Testing softmax_loss:

loss: 2.30263822083

dx error: 1.0369484028e-08

Two-layer network

In the previous assignment you implemented a two-layer neural network in a single monolithic class. Now that you have implemented modular versions of the necessary layers, you will reimplement the two layer network using these modular implementations.

Open the file cs231n/classifiers/fc_net.py and complete the implementation of the TwoLayerNet class. This class will serve as a model for the other networks you will implement in this assignment, so read through it to make sure you understand the API. You can run the cell below to test your implementation.

N, D, H, C = 3, 5, 50, 7

X = np.random.randn(N, D)

y = np.random.randint(C, size=N)std = 1e-2

model = TwoLayerNet(input_dim=D, hidden_dim=H, num_classes=C, weight_scale=std)print 'Testing initialization ... '

W1_std = abs(model.params['W1'].std() - std)

b1 = model.params['b1']

W2_std = abs(model.params['W2'].std() - std)

b2 = model.params['b2']

assert W1_std < std / 10, 'First layer weights do not seem right'

assert np.all(b1 == 0), 'First layer biases do not seem right'

assert W2_std < std / 10, 'Second layer weights do not seem right'

assert np.all(b2 == 0), 'Second layer biases do not seem right'print 'Testing test-time forward pass ... '

model.params['W1'] = np.linspace(-0.7, 0.3, num=D*H).reshape(D, H)

model.params['b1'] = np.linspace(-0.1, 0.9, num=H)

model.params['W2'] = np.linspace(-0.3, 0.4, num=H*C).reshape(H, C)

model.params['b2'] = np.linspace(-0.9, 0.1, num=C)

X = np.linspace(-5.5, 4.5, num=N*D).reshape(D, N).T

scores = model.loss(X)

correct_scores = np.asarray([[11.53165108, 12.2917344, 13.05181771, 13.81190102, 14.57198434, 15.33206765, 16.09215096],[12.05769098, 12.74614105, 13.43459113, 14.1230412, 14.81149128, 15.49994135, 16.18839143],[12.58373087, 13.20054771, 13.81736455, 14.43418138, 15.05099822, 15.66781506, 16.2846319 ]])

scores_diff = np.abs(scores - correct_scores).sum()

assert scores_diff < 1e-6, 'Problem with test-time forward pass'print 'Testing training loss (no regularization)'

y = np.asarray([0, 5, 1])

loss, grads = model.loss(X, y)

correct_loss = 3.4702243556

assert abs(loss - correct_loss) < 1e-10, 'Problem with training-time loss'model.reg = 1.0

loss, grads = model.loss(X, y)

correct_loss = 26.5948426952

assert abs(loss - correct_loss) < 1e-10, 'Problem with regularization loss'for reg in [0.0, 0.7]:print 'Running numeric gradient check with reg = ', regmodel.reg = regloss, grads = model.loss(X, y)for name in sorted(grads):f = lambda _: model.loss(X, y)[0]grad_num = eval_numerical_gradient(f, model.params[name], verbose=False)print '%s relative error: %.2e' % (name, rel_error(grad_num, grads[name]))Testing initialization ...

Testing test-time forward pass ...

Testing training loss (no regularization)

Running numeric gradient check with reg = 0.0

W1 relative error: 1.22e-08

W2 relative error: 3.34e-10

b1 relative error: 4.73e-09

b2 relative error: 4.33e-10

Running numeric gradient check with reg = 0.7

W1 relative error: 2.53e-07

W2 relative error: 1.37e-07

b1 relative error: 1.56e-08

b2 relative error: 9.09e-10

Solver

In the previous assignment, the logic for training models was coupled to the models themselves. Following a more modular design, for this assignment we have split the logic for training models into a separate class.

Open the file cs231n/solver.py and read through it to familiarize yourself with the API. After doing so, use a Solver instance to train a TwoLayerNet that achieves at least 50% accuracy on the validation set.

model = TwoLayerNet()

solver = None##############################################################################

# TODO: Use a Solver instance to train a TwoLayerNet that achieves at least #

# 50% accuracy on the validation set. #

##############################################################################

solver = Solver(model, data,update_rule='sgd',optim_config={'learning_rate': 1e-3,},lr_decay=0.95,num_epochs=10, batch_size=100,print_every=100)

solver.train()

solver.best_val_acc

##############################################################################

# END OF YOUR CODE #

##############################################################################(Iteration 1 / 4900) loss: 2.309509

(Epoch 0 / 10) train acc: 0.111000; val_acc: 0.124000

(Iteration 101 / 4900) loss: 2.031418

(Iteration 201 / 4900) loss: 1.712236

(Iteration 301 / 4900) loss: 1.747420

(Iteration 401 / 4900) loss: 1.549451

(Epoch 1 / 10) train acc: 0.450000; val_acc: 0.414000

(Iteration 501 / 4900) loss: 1.630659

(Iteration 601 / 4900) loss: 1.491387

(Iteration 701 / 4900) loss: 1.442918

(Iteration 801 / 4900) loss: 1.351634

(Iteration 901 / 4900) loss: 1.453418

(Epoch 2 / 10) train acc: 0.491000; val_acc: 0.484000

(Iteration 1001 / 4900) loss: 1.485202

(Iteration 1101 / 4900) loss: 1.383021

(Iteration 1201 / 4900) loss: 1.346942

(Iteration 1301 / 4900) loss: 1.252413

(Iteration 1401 / 4900) loss: 1.537722

(Epoch 3 / 10) train acc: 0.521000; val_acc: 0.480000

(Iteration 1501 / 4900) loss: 1.365271

(Iteration 1601 / 4900) loss: 1.123946

(Iteration 1701 / 4900) loss: 1.315114

(Iteration 1801 / 4900) loss: 1.597782

(Iteration 1901 / 4900) loss: 1.416204

(Epoch 4 / 10) train acc: 0.546000; val_acc: 0.494000

(Iteration 2001 / 4900) loss: 1.114552

(Iteration 2101 / 4900) loss: 1.377966

(Iteration 2201 / 4900) loss: 1.121448

(Iteration 2301 / 4900) loss: 1.306290

(Iteration 2401 / 4900) loss: 1.404830

(Epoch 5 / 10) train acc: 0.559000; val_acc: 0.500000

(Iteration 2501 / 4900) loss: 1.123347

(Iteration 2601 / 4900) loss: 1.449507

(Iteration 2701 / 4900) loss: 1.308397

(Iteration 2801 / 4900) loss: 1.375048

(Iteration 2901 / 4900) loss: 1.259040

(Epoch 6 / 10) train acc: 0.572000; val_acc: 0.491000

(Iteration 3001 / 4900) loss: 1.119232

(Iteration 3101 / 4900) loss: 1.270312

(Iteration 3201 / 4900) loss: 1.204007

(Iteration 3301 / 4900) loss: 1.214074

(Iteration 3401 / 4900) loss: 1.110863

(Epoch 7 / 10) train acc: 0.566000; val_acc: 0.514000

(Iteration 3501 / 4900) loss: 1.253669

(Iteration 3601 / 4900) loss: 1.354838

(Iteration 3701 / 4900) loss: 1.299770

(Iteration 3801 / 4900) loss: 1.184324

(Iteration 3901 / 4900) loss: 1.154244

(Epoch 8 / 10) train acc: 0.594000; val_acc: 0.498000

(Iteration 4001 / 4900) loss: 0.911092

(Iteration 4101 / 4900) loss: 1.154072

(Iteration 4201 / 4900) loss: 1.106225

(Iteration 4301 / 4900) loss: 1.279295

(Iteration 4401 / 4900) loss: 1.046316

(Epoch 9 / 10) train acc: 0.611000; val_acc: 0.503000

(Iteration 4501 / 4900) loss: 1.172954

(Iteration 4601 / 4900) loss: 1.040094

(Iteration 4701 / 4900) loss: 1.369539

(Iteration 4801 / 4900) loss: 1.106506

(Epoch 10 / 10) train acc: 0.588000; val_acc: 0.51500.51500000000000001

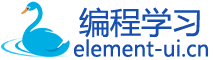

# Run this cell to visualize training loss and train / val accuracyplt.subplot(2, 1, 1)

plt.title('Training loss')

plt.plot(solver.loss_history, 'o')

plt.xlabel('Iteration')plt.subplot(2, 1, 2)

plt.title('Accuracy')

plt.plot(solver.train_acc_history, '-o', label='train')

plt.plot(solver.val_acc_history, '-o', label='val')

plt.plot([0.5] * len(solver.val_acc_history), 'k--')

plt.xlabel('Epoch')

plt.legend(loc='lower right')

plt.gcf().set_size_inches(15, 12)

plt.show()Multilayer network

Next you will implement a fully-connected network with an arbitrary number of hidden layers.

Read through the FullyConnectedNet class in the file cs231n/classifiers/fc_net.py.

Implement the initialization, the forward pass, and the backward pass. For the moment don’t worry about implementing dropout or batch normalization; we will add those features soon.

Initial loss and gradient check

As a sanity check, run the following to check the initial loss and to gradient check the network both with and without regularization. Do the initial losses seem reasonable?

For gradient checking, you should expect to see errors around 1e-6 or less.

# 有的时候relative error会比较大,能达到1e-2的数量级,但是多运行几次,所有参数的relative error都比较小,应该是随机初始化参数的影响

N, D, H1, H2, C = 2, 15, 20, 30, 10

X = np.random.randn(N, D)

y = np.random.randint(C, size=(N,))for reg in [0, 3.14,0.02]:print 'Running check with reg = ', regmodel = FullyConnectedNet([H1, H2], input_dim=D, num_classes=C,reg=reg, weight_scale=5e-2, dtype=np.float64)loss, grads = model.loss(X, y)print 'Initial loss: ', lossfor name in sorted(grads):f = lambda _: model.loss(X, y)[0]grad_num = eval_numerical_gradient(f, model.params[name], verbose=False, h=1e-5)print '%s relative error: %.2e' % (name, rel_error(grad_num, grads[name]))Running check with reg = 0

Initial loss: 2.29966459663

W1 relative error: 2.92e-07

W2 relative error: 2.17e-05

W3 relative error: 4.38e-08

b1 relative error: 3.54e-08

b2 relative error: 1.45e-08

b3 relative error: 1.31e-10

Running check with reg = 3.14

Initial loss: 6.71836699258

W1 relative error: 2.65e-07

W2 relative error: 2.28e-07

W3 relative error: 3.79e-06

b1 relative error: 7.94e-09

b2 relative error: 1.73e-08

b3 relative error: 2.05e-10

Running check with reg = 0.02

Initial loss: 2.32843212504

W1 relative error: 1.19e-07

W2 relative error: 1.47e-06

W3 relative error: 8.67e-06

b1 relative error: 2.08e-08

b2 relative error: 1.21e-02

b3 relative error: 1.39e-10

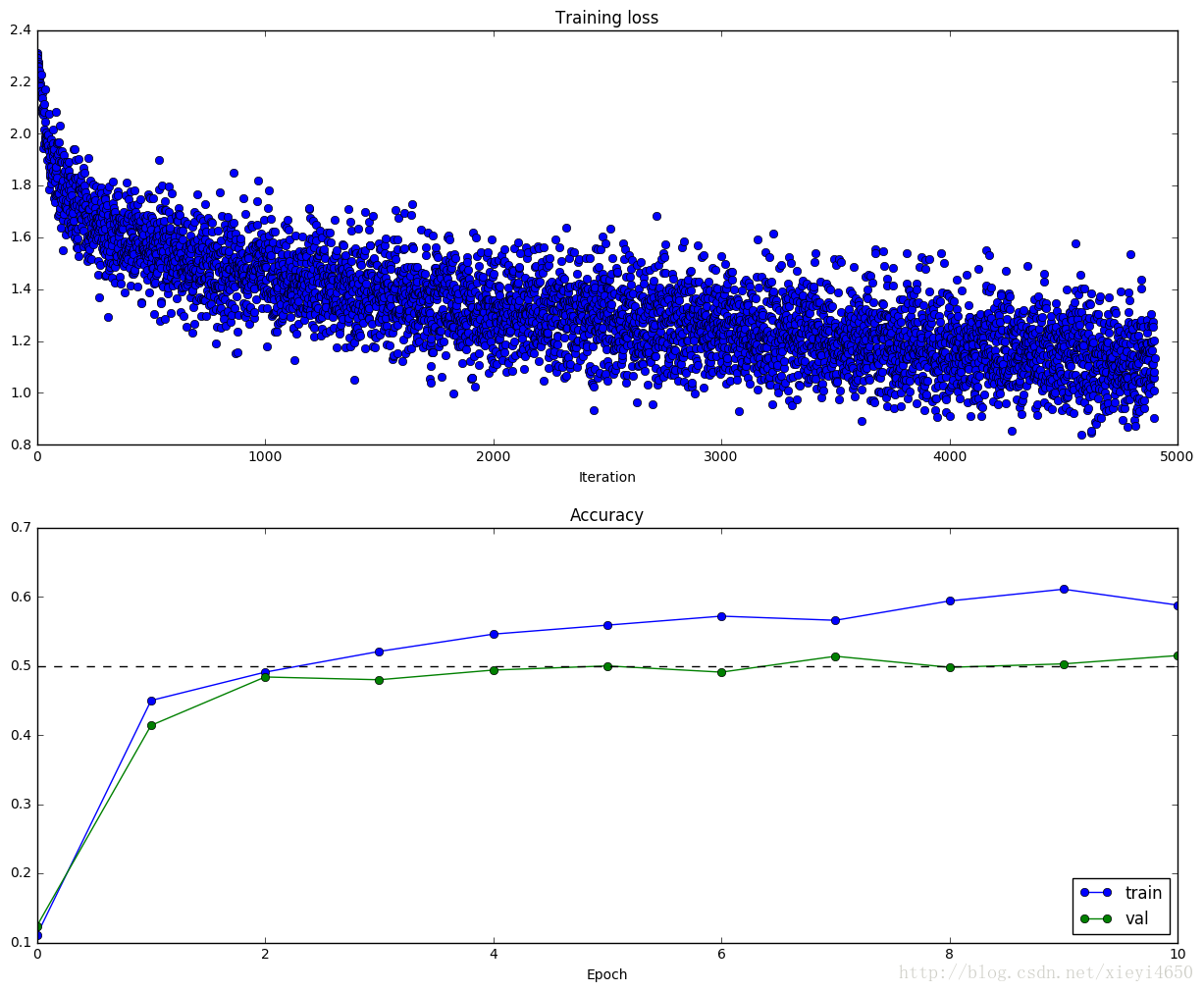

As another sanity check, make sure you can overfit a small dataset of 50 images. First we will try a three-layer network with 100 units in each hidden layer. You will need to tweak the learning rate and initialization scale, but you should be able to overfit and achieve 100% training accuracy within 20 epochs.

# TODO: Use a three-layer Net to overfit 50 training examples.num_train = 50

small_data = {'X_train': data['X_train'][:num_train],'y_train': data['y_train'][:num_train],'X_val': data['X_val'],'y_val': data['y_val'],

}#weight_scale = 1e-2

#learning_rate = 1e-4

weight_scale = 4e-2

learning_rate = 1e-3

model = FullyConnectedNet([100, 100],weight_scale=weight_scale, dtype=np.float64)

solver = Solver(model, small_data,print_every=10, num_epochs=20, batch_size=25,update_rule='sgd',optim_config={'learning_rate': learning_rate,})

solver.train()plt.plot(solver.loss_history, 'o')

plt.title('Training loss history')

plt.xlabel('Iteration')

plt.ylabel('Training loss')

plt.show()(Iteration 1 / 40) loss: 10.016980

(Epoch 0 / 20) train acc: 0.260000; val_acc: 0.110000

(Epoch 1 / 20) train acc: 0.280000; val_acc: 0.131000

(Epoch 2 / 20) train acc: 0.380000; val_acc: 0.130000

(Epoch 3 / 20) train acc: 0.540000; val_acc: 0.114000

(Epoch 4 / 20) train acc: 0.800000; val_acc: 0.110000

(Epoch 5 / 20) train acc: 0.880000; val_acc: 0.121000

(Iteration 11 / 40) loss: 0.474159

(Epoch 6 / 20) train acc: 0.940000; val_acc: 0.136000

(Epoch 7 / 20) train acc: 0.920000; val_acc: 0.143000

(Epoch 8 / 20) train acc: 1.000000; val_acc: 0.141000

(Epoch 9 / 20) train acc: 1.000000; val_acc: 0.140000

(Epoch 10 / 20) train acc: 1.000000; val_acc: 0.138000

(Iteration 21 / 40) loss: 0.049274

(Epoch 11 / 20) train acc: 1.000000; val_acc: 0.139000

(Epoch 12 / 20) train acc: 1.000000; val_acc: 0.141000

(Epoch 13 / 20) train acc: 1.000000; val_acc: 0.142000

(Epoch 14 / 20) train acc: 1.000000; val_acc: 0.141000

(Epoch 15 / 20) train acc: 1.000000; val_acc: 0.141000

(Iteration 31 / 40) loss: 0.011080

(Epoch 16 / 20) train acc: 1.000000; val_acc: 0.139000

(Epoch 17 / 20) train acc: 1.000000; val_acc: 0.138000

(Epoch 18 / 20) train acc: 1.000000; val_acc: 0.138000

(Epoch 19 / 20) train acc: 1.000000; val_acc: 0.134000

(Epoch 20 / 20) train acc: 1.000000; val_acc: 0.13300

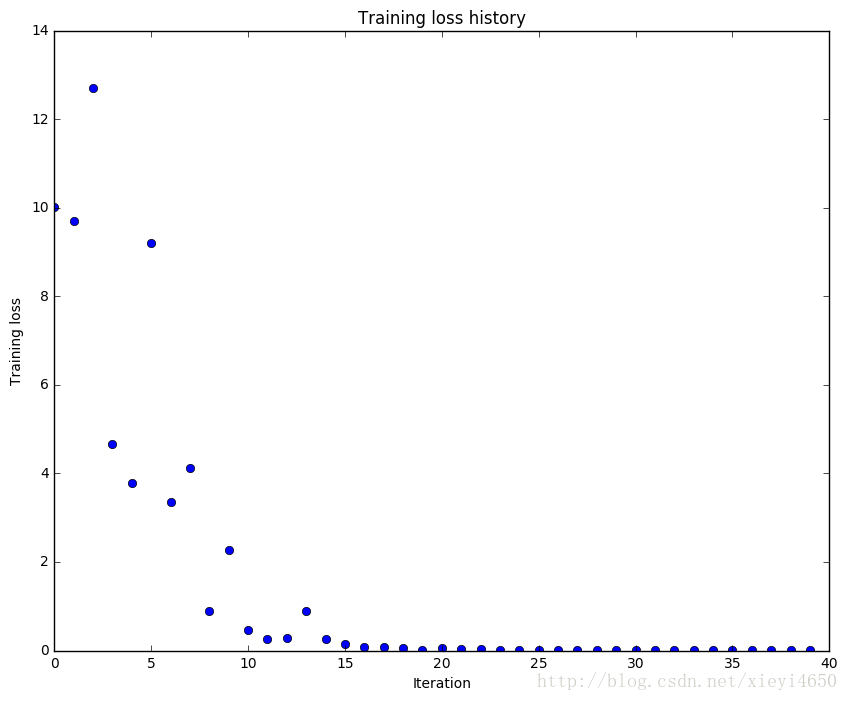

Now try to use a five-layer network with 100 units on each layer to overfit 50 training examples. Again you will have to adjust the learning rate and weight initialization, but you should be able to achieve 100% training accuracy within 20 epochs.

# TODO: Use a five-layer Net to overfit 50 training examples.num_train = 50

small_data = {'X_train': data['X_train'][:num_train],'y_train': data['y_train'][:num_train],'X_val': data['X_val'],'y_val': data['y_val'],

}# learning_rate = 1e-3

# weight_scale = 1e-5

learning_rate = 1e-3

weight_scale = 6e-2

model = FullyConnectedNet([100, 100, 100, 100],weight_scale=weight_scale, dtype=np.float64)

solver = Solver(model, small_data,print_every=10, num_epochs=20, batch_size=25,update_rule='sgd',optim_config={'learning_rate': learning_rate,})

solver.train()plt.plot(solver.loss_history, 'o')

plt.title('Training loss history')

plt.xlabel('Iteration')

plt.ylabel('Training loss')

plt.show()(Iteration 1 / 40) loss: 8.242625

(Epoch 0 / 20) train acc: 0.040000; val_acc: 0.108000

(Epoch 1 / 20) train acc: 0.180000; val_acc: 0.119000

(Epoch 2 / 20) train acc: 0.260000; val_acc: 0.126000

(Epoch 3 / 20) train acc: 0.480000; val_acc: 0.116000

(Epoch 4 / 20) train acc: 0.500000; val_acc: 0.110000

(Epoch 5 / 20) train acc: 0.600000; val_acc: 0.114000

(Iteration 11 / 40) loss: 1.805009

(Epoch 6 / 20) train acc: 0.800000; val_acc: 0.113000

(Epoch 7 / 20) train acc: 0.860000; val_acc: 0.108000

(Epoch 8 / 20) train acc: 0.920000; val_acc: 0.116000

(Epoch 9 / 20) train acc: 0.960000; val_acc: 0.113000

(Epoch 10 / 20) train acc: 0.960000; val_acc: 0.116000

(Iteration 21 / 40) loss: 0.137192

(Epoch 11 / 20) train acc: 0.980000; val_acc: 0.113000

(Epoch 12 / 20) train acc: 0.980000; val_acc: 0.118000

(Epoch 13 / 20) train acc: 0.980000; val_acc: 0.118000

(Epoch 14 / 20) train acc: 0.980000; val_acc: 0.118000

(Epoch 15 / 20) train acc: 0.980000; val_acc: 0.118000

(Iteration 31 / 40) loss: 0.084054

(Epoch 16 / 20) train acc: 1.000000; val_acc: 0.118000

(Epoch 17 / 20) train acc: 1.000000; val_acc: 0.113000

(Epoch 18 / 20) train acc: 1.000000; val_acc: 0.115000

(Epoch 19 / 20) train acc: 1.000000; val_acc: 0.118000

(Epoch 20 / 20) train acc: 1.000000; val_acc: 0.119000

Inline question:

Did you notice anything about the comparative difficulty of training the three-layer net vs training the five layer net?

Answer:

training five-layer net need bigger weight_scale since it has deeper net so five-layer net’s weights get higher probablity to decrease to zero.

As five-layer net initialize weights with higher weight scale, so it needs bigger learning rate.

three-layer net is more robust than five-layer net.

5层网络比三层网络更深,所以计算过程中的值越来越小vanish现象更严重,所以需要讲weight scale调大,因为weight scale调大了,所以同样条件下,学习率也要调大才能在同样步骤内更好的训练网络.5层网络比三层更敏感和脆弱.

其实不太懂他想问啥,感觉很容易就调到了100%

Update rules

So far we have used vanilla stochastic gradient descent (SGD) as our update rule. More sophisticated update rules can make it easier to train deep networks. We will implement a few of the most commonly used update rules and compare them to vanilla SGD.

SGD+Momentum

Stochastic gradient descent with momentum is a widely used update rule that tends to make deep networks converge faster than vanilla stochstic gradient descent.

Open the file cs231n/optim.py and read the documentation at the top of the file to make sure you understand the API. Implement the SGD+momentum update rule in the function sgd_momentum and run the following to check your implementation. You should see errors less than 1e-8.

from cs231n.optim import sgd_momentumN, D = 4, 5

w = np.linspace(-0.4, 0.6, num=N*D).reshape(N, D)

dw = np.linspace(-0.6, 0.4, num=N*D).reshape(N, D)

v = np.linspace(0.6, 0.9, num=N*D).reshape(N, D)config = {'learning_rate': 1e-3, 'velocity': v}

next_w, _ = sgd_momentum(w, dw, config=config)expected_next_w = np.asarray([[ 0.1406, 0.20738947, 0.27417895, 0.34096842, 0.40775789],[ 0.47454737, 0.54133684, 0.60812632, 0.67491579, 0.74170526],[ 0.80849474, 0.87528421, 0.94207368, 1.00886316, 1.07565263],[ 1.14244211, 1.20923158, 1.27602105, 1.34281053, 1.4096 ]])

expected_velocity = np.asarray([[ 0.5406, 0.55475789, 0.56891579, 0.58307368, 0.59723158],[ 0.61138947, 0.62554737, 0.63970526, 0.65386316, 0.66802105],[ 0.68217895, 0.69633684, 0.71049474, 0.72465263, 0.73881053],[ 0.75296842, 0.76712632, 0.78128421, 0.79544211, 0.8096 ]])print 'next_w error: ', rel_error(next_w, expected_next_w)

print 'velocity error: ', rel_error(expected_velocity, config['velocity'])next_w error: 8.88234703351e-09

velocity error: 4.26928774328e-09

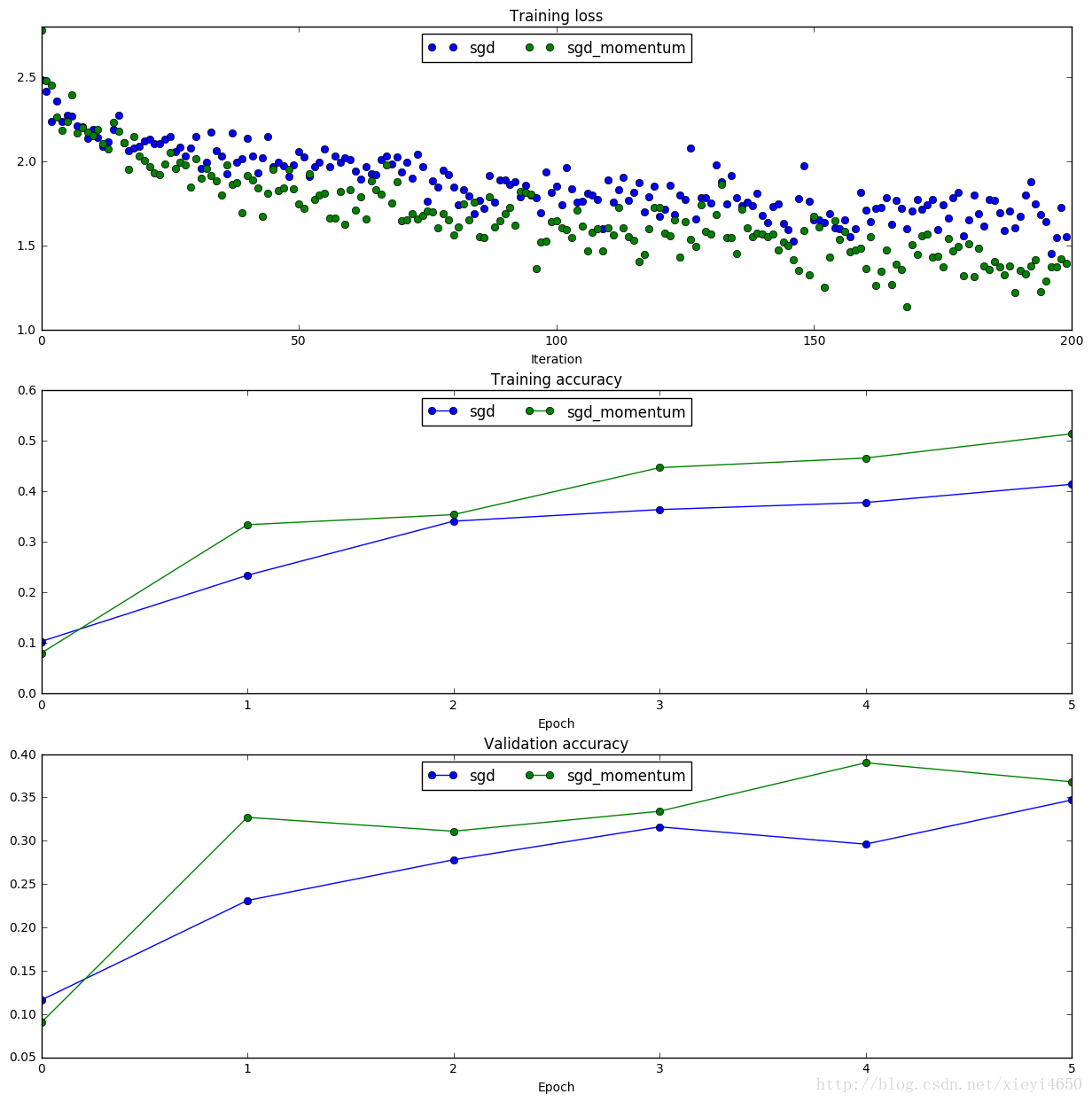

Once you have done so, run the following to train a six-layer network with both SGD and SGD+momentum. You should see the SGD+momentum update rule converge faster.

num_train = 4000

small_data = {'X_train': data['X_train'][:num_train],'y_train': data['y_train'][:num_train],'X_val': data['X_val'],'y_val': data['y_val'],

}solvers = {}for update_rule in ['sgd', 'sgd_momentum']:print 'running with ', update_rulemodel = FullyConnectedNet([100, 100, 100, 100, 100], weight_scale=5e-2)solver = Solver(model, small_data,num_epochs=5, batch_size=100,update_rule=update_rule,optim_config={'learning_rate': 1e-2,},verbose=True)solvers[update_rule] = solversolver.train()printplt.subplot(3, 1, 1)

plt.title('Training loss')

plt.xlabel('Iteration')plt.subplot(3, 1, 2)

plt.title('Training accuracy')

plt.xlabel('Epoch')plt.subplot(3, 1, 3)

plt.title('Validation accuracy')

plt.xlabel('Epoch')for update_rule, solver in solvers.iteritems():plt.subplot(3, 1, 1)plt.plot(solver.loss_history, 'o', label=update_rule)plt.subplot(3, 1, 2)plt.plot(solver.train_acc_history, '-o', label=update_rule)plt.subplot(3, 1, 3)plt.plot(solver.val_acc_history, '-o', label=update_rule)for i in [1, 2, 3]:plt.subplot(3, 1, i)plt.legend(loc='upper center', ncol=4)

plt.gcf().set_size_inches(15, 15)

plt.show()running with sgd

(Iteration 1 / 200) loss: 2.482962

(Epoch 0 / 5) train acc: 0.103000; val_acc: 0.116000

(Iteration 11 / 200) loss: 2.189759

(Iteration 21 / 200) loss: 2.118428

(Iteration 31 / 200) loss: 2.146263

(Epoch 1 / 5) train acc: 0.234000; val_acc: 0.231000

(Iteration 41 / 200) loss: 2.136812

(Iteration 51 / 200) loss: 2.058494

(Iteration 61 / 200) loss: 2.010344

(Iteration 71 / 200) loss: 1.935777

(Epoch 2 / 5) train acc: 0.341000; val_acc: 0.278000

(Iteration 81 / 200) loss: 1.848450

(Iteration 91 / 200) loss: 1.890258

(Iteration 101 / 200) loss: 1.851392

(Iteration 111 / 200) loss: 1.890978

(Epoch 3 / 5) train acc: 0.364000; val_acc: 0.316000

(Iteration 121 / 200) loss: 1.674997

(Iteration 131 / 200) loss: 1.753746

(Iteration 141 / 200) loss: 1.677929

(Iteration 151 / 200) loss: 1.651327

(Epoch 4 / 5) train acc: 0.378000; val_acc: 0.296000

(Iteration 161 / 200) loss: 1.707673

(Iteration 171 / 200) loss: 1.771841

(Iteration 181 / 200) loss: 1.650195

(Iteration 191 / 200) loss: 1.671102

(Epoch 5 / 5) train acc: 0.414000; val_acc: 0.347000running with sgd_momentum

(Iteration 1 / 200) loss: 2.779826

(Epoch 0 / 5) train acc: 0.080000; val_acc: 0.090000

(Iteration 11 / 200) loss: 2.151418

(Iteration 21 / 200) loss: 2.005661

(Iteration 31 / 200) loss: 2.018002

(Epoch 1 / 5) train acc: 0.334000; val_acc: 0.327000

(Iteration 41 / 200) loss: 1.914837

(Iteration 51 / 200) loss: 1.745527

(Iteration 61 / 200) loss: 1.829091

(Iteration 71 / 200) loss: 1.646542

(Epoch 2 / 5) train acc: 0.354000; val_acc: 0.311000

(Iteration 81 / 200) loss: 1.561354

(Iteration 91 / 200) loss: 1.687099

(Iteration 101 / 200) loss: 1.644848

(Iteration 111 / 200) loss: 1.604384

(Epoch 3 / 5) train acc: 0.447000; val_acc: 0.334000

(Iteration 121 / 200) loss: 1.727682

(Iteration 131 / 200) loss: 1.569907

(Iteration 141 / 200) loss: 1.565606

(Iteration 151 / 200) loss: 1.674119

(Epoch 4 / 5) train acc: 0.466000; val_acc: 0.390000

(Iteration 161 / 200) loss: 1.364019

(Iteration 171 / 200) loss: 1.449550

(Iteration 181 / 200) loss: 1.510401

(Iteration 191 / 200) loss: 1.353840

(Epoch 5 / 5) train acc: 0.514000; val_acc: 0.368000

RMSProp and Adam

RMSProp [1] and Adam [2] are update rules that set per-parameter learning rates by using a running average of the second moments of gradients.

In the file cs231n/optim.py, implement the RMSProp update rule in the rmsprop function and implement the Adam update rule in the adam function, and check your implementations using the tests below.

[1] Tijmen Tieleman and Geoffrey Hinton. “Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude.” COURSERA: Neural Networks for Machine Learning 4 (2012).

[2] Diederik Kingma and Jimmy Ba, “Adam: A Method for Stochastic Optimization”, ICLR 2015.

# Test RMSProp implementation; you should see errors less than 1e-7

from cs231n.optim import rmspropN, D = 4, 5

w = np.linspace(-0.4, 0.6, num=N*D).reshape(N, D)

dw = np.linspace(-0.6, 0.4, num=N*D).reshape(N, D)

cache = np.linspace(0.6, 0.9, num=N*D).reshape(N, D)config = {'learning_rate': 1e-2, 'cache': cache}

next_w, _ = rmsprop(w, dw, config=config)expected_next_w = np.asarray([[-0.39223849, -0.34037513, -0.28849239, -0.23659121, -0.18467247],[-0.132737, -0.08078555, -0.02881884, 0.02316247, 0.07515774],[ 0.12716641, 0.17918792, 0.23122175, 0.28326742, 0.33532447],[ 0.38739248, 0.43947102, 0.49155973, 0.54365823, 0.59576619]])

expected_cache = np.asarray([[ 0.5976, 0.6126277, 0.6277108, 0.64284931, 0.65804321],[ 0.67329252, 0.68859723, 0.70395734, 0.71937285, 0.73484377],[ 0.75037008, 0.7659518, 0.78158892, 0.79728144, 0.81302936],[ 0.82883269, 0.84469141, 0.86060554, 0.87657507, 0.8926 ]])print 'next_w error: ', rel_error(expected_next_w, next_w)

print 'cache error: ', rel_error(expected_cache, config['cache'])next_w error: 9.50264522989e-08

cache error: 2.64779558072e-09

# Test Adam implementation; you should see errors around 1e-7 or less

from cs231n.optim import adamN, D = 4, 5

w = np.linspace(-0.4, 0.6, num=N*D).reshape(N, D)

dw = np.linspace(-0.6, 0.4, num=N*D).reshape(N, D)

m = np.linspace(0.6, 0.9, num=N*D).reshape(N, D)

v = np.linspace(0.7, 0.5, num=N*D).reshape(N, D)config = {'learning_rate': 1e-2, 'm': m, 'v': v, 't': 5}

next_w, _ = adam(w, dw, config=config)expected_next_w = np.asarray([[-0.40094747, -0.34836187, -0.29577703, -0.24319299, -0.19060977],[-0.1380274, -0.08544591, -0.03286534, 0.01971428, 0.0722929],[ 0.1248705, 0.17744702, 0.23002243, 0.28259667, 0.33516969],[ 0.38774145, 0.44031188, 0.49288093, 0.54544852, 0.59801459]])

expected_v = np.asarray([[ 0.69966, 0.68908382, 0.67851319, 0.66794809, 0.65738853,],[ 0.64683452, 0.63628604, 0.6257431, 0.61520571, 0.60467385,],[ 0.59414753, 0.58362676, 0.57311152, 0.56260183, 0.55209767,],[ 0.54159906, 0.53110598, 0.52061845, 0.51013645, 0.49966, ]])

expected_m = np.asarray([[ 0.48, 0.49947368, 0.51894737, 0.53842105, 0.55789474],[ 0.57736842, 0.59684211, 0.61631579, 0.63578947, 0.65526316],[ 0.67473684, 0.69421053, 0.71368421, 0.73315789, 0.75263158],[ 0.77210526, 0.79157895, 0.81105263, 0.83052632, 0.85 ]])print 'next_w error: ', rel_error(expected_next_w, next_w)

print 'v error: ', rel_error(expected_v, config['v'])

print 'm error: ', rel_error(expected_m, config['m'])next_w error: 1.13956917985e-07

v error: 4.20831403811e-09

m error: 4.21496319311e-09

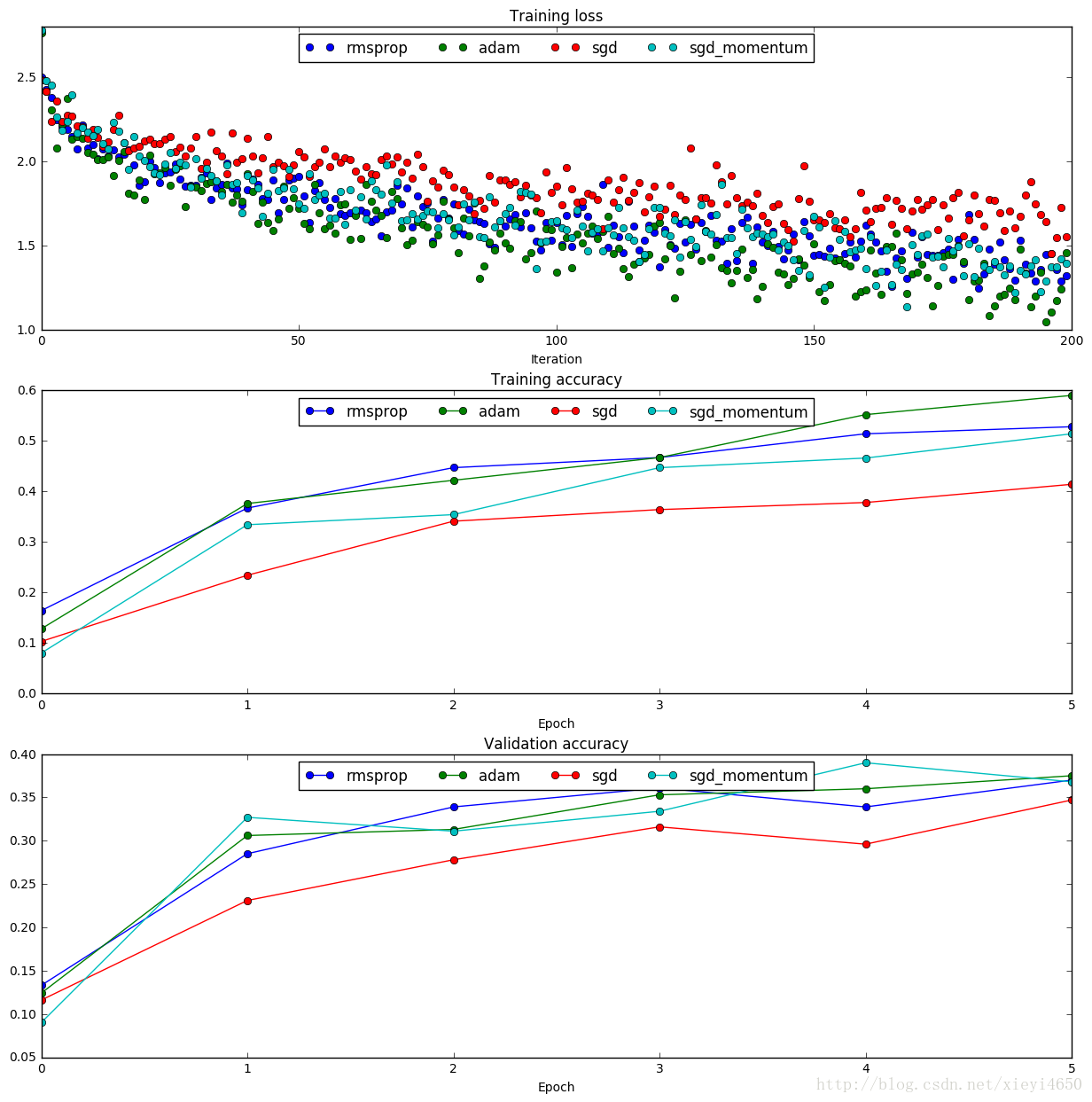

Once you have debugged your RMSProp and Adam implementations, run the following to train a pair of deep networks using these new update rules:

learning_rates = {'rmsprop': 1e-4, 'adam': 1e-3}

for update_rule in ['adam', 'rmsprop']:print 'running with ', update_rulemodel = FullyConnectedNet([100, 100, 100, 100, 100], weight_scale=5e-2)solver = Solver(model, small_data,num_epochs=5, batch_size=100,update_rule=update_rule,optim_config={'learning_rate': learning_rates[update_rule]},verbose=True)solvers[update_rule] = solversolver.train()printplt.subplot(3, 1, 1)

plt.title('Training loss')

plt.xlabel('Iteration')plt.subplot(3, 1, 2)

plt.title('Training accuracy')

plt.xlabel('Epoch')plt.subplot(3, 1, 3)

plt.title('Validation accuracy')

plt.xlabel('Epoch')for update_rule, solver in solvers.iteritems():plt.subplot(3, 1, 1)plt.plot(solver.loss_history, 'o', label=update_rule)plt.subplot(3, 1, 2)plt.plot(solver.train_acc_history, '-o', label=update_rule)plt.subplot(3, 1, 3)plt.plot(solver.val_acc_history, '-o', label=update_rule)for i in [1, 2, 3]:plt.subplot(3, 1, i)plt.legend(loc='upper center', ncol=4)

plt.gcf().set_size_inches(15, 15)

plt.show()running with adam

(Iteration 1 / 200) loss: 2.764716

(Epoch 0 / 5) train acc: 0.128000; val_acc: 0.124000

(Iteration 11 / 200) loss: 2.040898

(Iteration 21 / 200) loss: 1.774376

(Iteration 31 / 200) loss: 1.847699

(Epoch 1 / 5) train acc: 0.376000; val_acc: 0.306000

(Iteration 41 / 200) loss: 1.926563

(Iteration 51 / 200) loss: 1.720461

(Iteration 61 / 200) loss: 1.537673

(Iteration 71 / 200) loss: 1.603966

(Epoch 2 / 5) train acc: 0.422000; val_acc: 0.313000

(Iteration 81 / 200) loss: 1.602464

(Iteration 91 / 200) loss: 1.514707

(Iteration 101 / 200) loss: 1.341900

(Iteration 111 / 200) loss: 1.671358

(Epoch 3 / 5) train acc: 0.467000; val_acc: 0.353000

(Iteration 121 / 200) loss: 1.638983

(Iteration 131 / 200) loss: 1.433005

(Iteration 141 / 200) loss: 1.259506

(Iteration 151 / 200) loss: 1.510506

(Epoch 4 / 5) train acc: 0.552000; val_acc: 0.360000

(Iteration 161 / 200) loss: 1.234063

(Iteration 171 / 200) loss: 1.344069

(Iteration 181 / 200) loss: 1.179093

(Iteration 191 / 200) loss: 1.477335

(Epoch 5 / 5) train acc: 0.590000; val_acc: 0.375000running with rmsprop

(Iteration 1 / 200) loss: 2.498833

(Epoch 0 / 5) train acc: 0.164000; val_acc: 0.133000

(Iteration 11 / 200) loss: 2.099360

(Iteration 21 / 200) loss: 1.879164

(Iteration 31 / 200) loss: 1.856589

(Epoch 1 / 5) train acc: 0.367000; val_acc: 0.285000

(Iteration 41 / 200) loss: 1.831192

(Iteration 51 / 200) loss: 1.911368

(Iteration 61 / 200) loss: 1.693546

(Iteration 71 / 200) loss: 1.745929

(Epoch 2 / 5) train acc: 0.447000; val_acc: 0.339000

(Iteration 81 / 200) loss: 1.748112

(Iteration 91 / 200) loss: 1.613525

(Iteration 101 / 200) loss: 1.635379

(Iteration 111 / 200) loss: 1.491368

(Epoch 3 / 5) train acc: 0.467000; val_acc: 0.361000

(Iteration 121 / 200) loss: 1.372249

(Iteration 131 / 200) loss: 1.678353

(Iteration 141 / 200) loss: 1.521616

(Iteration 151 / 200) loss: 1.441466

(Epoch 4 / 5) train acc: 0.514000; val_acc: 0.339000

(Iteration 161 / 200) loss: 1.619760

(Iteration 171 / 200) loss: 1.432048

(Iteration 181 / 200) loss: 1.684465

(Iteration 191 / 200) loss: 1.532590

(Epoch 5 / 5) train acc: 0.528000; val_acc: 0.370000

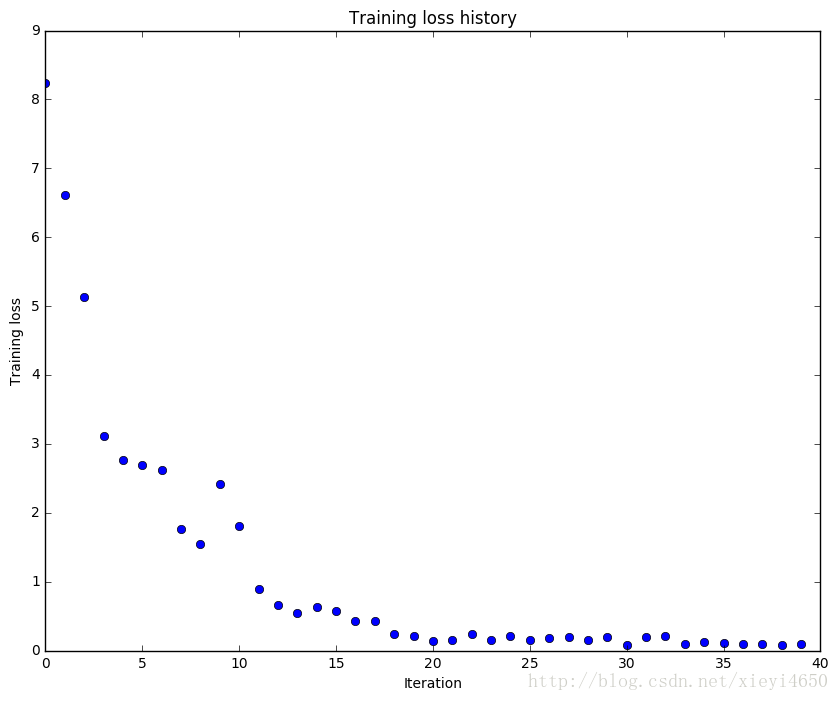

Train a good model!

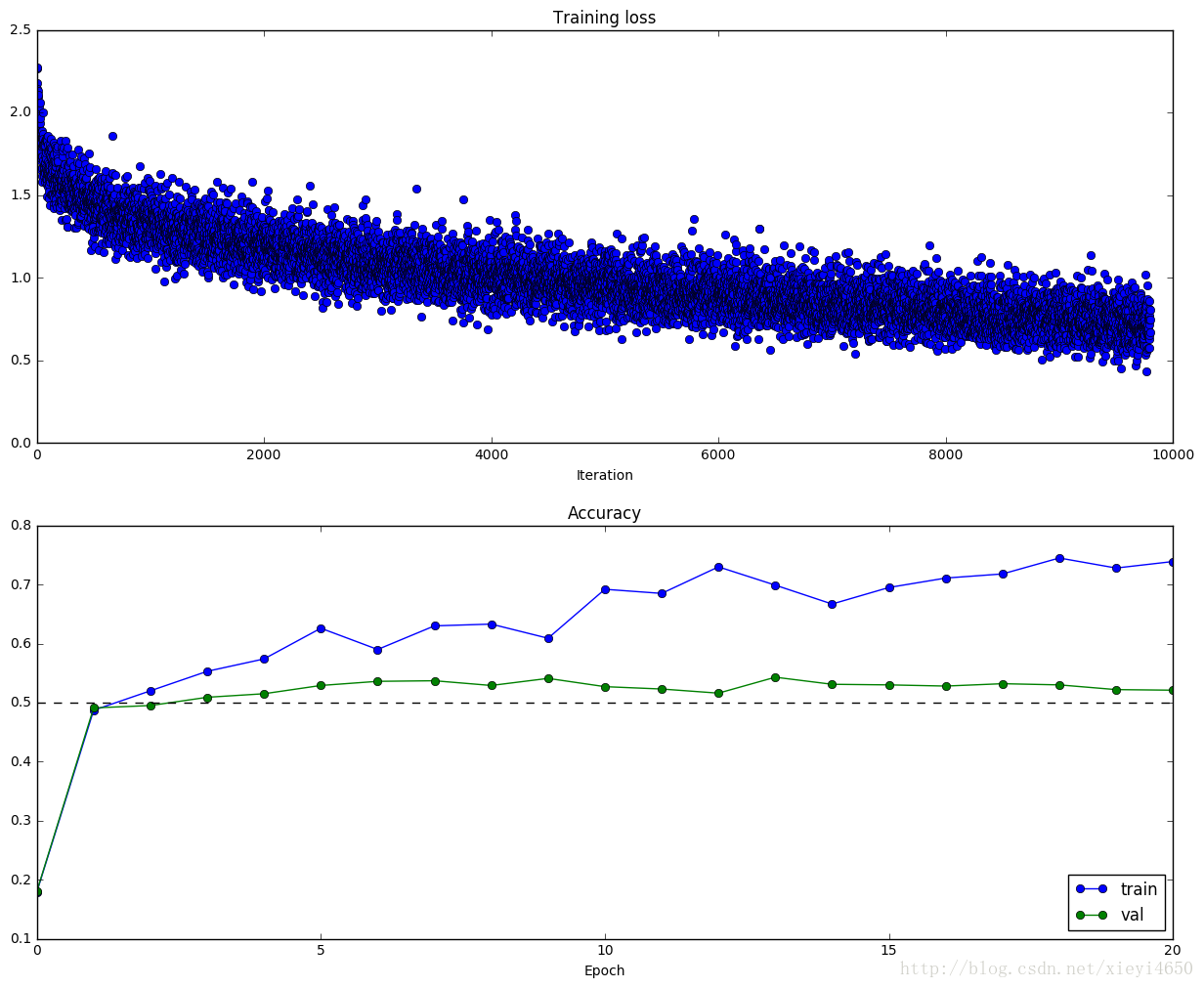

Train the best fully-connected model that you can on CIFAR-10, storing your best model in the best_model variable. We require you to get at least 50% accuracy on the validation set using a fully-connected net.

If you are careful it should be possible to get accuracies above 55%, but we don’t require it for this part and won’t assign extra credit for doing so. Later in the assignment we will ask you to train the best convolutional network that you can on CIFAR-10, and we would prefer that you spend your effort working on convolutional nets rather than fully-connected nets.

You might find it useful to complete the BatchNormalization.ipynb and Dropout.ipynb notebooks before completing this part, since those techniques can help you train powerful models.

best_model = None

best_val_acc = 0

################################################################################

# TODO: Train the best FullyConnectedNet that you can on CIFAR-10. You might #

# batch normalization and dropout useful. Store your best model in the #

# best_model variable. #

################################################################################

reg_choice = [0, 0.02, 0.05]

#dropout_choice = [0.25, 0.5]

#netstructure_choice = [

# [100,100],

# [100, 100, 100],

# [50, 50, 50, 50, 50, 50, 50]]

dropout_choice = [0]

netstructure_choice = [[100, 100]]

for hidden_dim in netstructure_choice:for dropout in dropout_choice:model = FullyConnectedNet(hidden_dim, reg=0, weight_scale=5e-2, dtype=np.float64,use_batchnorm=True, dropout=dropout)solver = Solver(model, data,num_epochs=20, batch_size=100,update_rule='adam',optim_config={'learning_rate': 5e-3},print_every=100,lr_decay=0.95,verbose=True)solver.train() if solver.best_val_acc>best_val_acc:best_model = modelprint plt.subplot(2, 1, 1)plt.title('Training loss')plt.plot(solver.loss_history, 'o')plt.xlabel('Iteration')plt.subplot(2, 1, 2)plt.title('Accuracy')plt.plot(solver.train_acc_history, '-o', label='train')plt.plot(solver.val_acc_history, '-o', label='val')plt.plot([0.5] * len(solver.val_acc_history), 'k--')plt.xlabel('Epoch')plt.legend(loc='lower right')plt.gcf().set_size_inches(15, 12)plt.show()

################################################################################

# END OF YOUR CODE #

################################################################################(Iteration 1 / 9800) loss: 2.263781

(Epoch 0 / 20) train acc: 0.179000; val_acc: 0.180000

(Iteration 101 / 9800) loss: 1.624115

(Iteration 201 / 9800) loss: 1.467661

(Iteration 301 / 9800) loss: 1.591997

(Iteration 401 / 9800) loss: 1.432411

(Epoch 1 / 20) train acc: 0.487000; val_acc: 0.491000

(Iteration 501 / 9800) loss: 1.241822

(Iteration 601 / 9800) loss: 1.546403

(Iteration 701 / 9800) loss: 1.411293

(Iteration 801 / 9800) loss: 1.375881

(Iteration 901 / 9800) loss: 1.242919

(Epoch 2 / 20) train acc: 0.520000; val_acc: 0.495000

(Iteration 1001 / 9800) loss: 1.316806

(Iteration 1101 / 9800) loss: 1.340302

(Iteration 1201 / 9800) loss: 1.335680

(Iteration 1301 / 9800) loss: 1.346994

(Iteration 1401 / 9800) loss: 1.156202

(Epoch 3 / 20) train acc: 0.553000; val_acc: 0.509000

(Iteration 1501 / 9800) loss: 1.111737

(Iteration 1601 / 9800) loss: 1.339837

(Iteration 1701 / 9800) loss: 1.218292

(Iteration 1801 / 9800) loss: 1.344992

(Iteration 1901 / 9800) loss: 1.198010

(Epoch 4 / 20) train acc: 0.574000; val_acc: 0.515000

(Iteration 2001 / 9800) loss: 1.185471

(Iteration 2101 / 9800) loss: 1.245266

(Iteration 2201 / 9800) loss: 1.046663

(Iteration 2301 / 9800) loss: 1.128248

(Iteration 2401 / 9800) loss: 1.100717

(Epoch 5 / 20) train acc: 0.626000; val_acc: 0.529000

(Iteration 2501 / 9800) loss: 1.076717

(Iteration 2601 / 9800) loss: 1.154111

(Iteration 2701 / 9800) loss: 1.077080

(Iteration 2801 / 9800) loss: 0.998500

(Iteration 2901 / 9800) loss: 1.051188

(Epoch 6 / 20) train acc: 0.590000; val_acc: 0.536000

(Iteration 3001 / 9800) loss: 1.004974

(Iteration 3101 / 9800) loss: 1.124638

(Iteration 3201 / 9800) loss: 1.073654

(Iteration 3301 / 9800) loss: 0.970181

(Iteration 3401 / 9800) loss: 1.115142

(Epoch 7 / 20) train acc: 0.630000; val_acc: 0.537000

(Iteration 3501 / 9800) loss: 0.869317

(Iteration 3601 / 9800) loss: 1.109377

(Iteration 3701 / 9800) loss: 1.037178

(Iteration 3801 / 9800) loss: 0.947001

(Iteration 3901 / 9800) loss: 0.989016

(Epoch 8 / 20) train acc: 0.633000; val_acc: 0.529000

(Iteration 4001 / 9800) loss: 0.949825

(Iteration 4101 / 9800) loss: 1.007835

(Iteration 4201 / 9800) loss: 0.894922

(Iteration 4301 / 9800) loss: 1.134644

(Iteration 4401 / 9800) loss: 0.932514

(Epoch 9 / 20) train acc: 0.609000; val_acc: 0.541000

(Iteration 4501 / 9800) loss: 1.117945

(Iteration 4601 / 9800) loss: 1.066002

(Iteration 4701 / 9800) loss: 0.858422

(Iteration 4801 / 9800) loss: 0.799150

(Epoch 10 / 20) train acc: 0.692000; val_acc: 0.527000

(Iteration 4901 / 9800) loss: 1.027588

(Iteration 5001 / 9800) loss: 0.903380

(Iteration 5101 / 9800) loss: 0.950514

(Iteration 5201 / 9800) loss: 0.891470

(Iteration 5301 / 9800) loss: 0.947976

(Epoch 11 / 20) train acc: 0.685000; val_acc: 0.523000

(Iteration 5401 / 9800) loss: 1.161916

(Iteration 5501 / 9800) loss: 1.039629

(Iteration 5601 / 9800) loss: 0.895261

(Iteration 5701 / 9800) loss: 0.855530

(Iteration 5801 / 9800) loss: 0.723047

(Epoch 12 / 20) train acc: 0.730000; val_acc: 0.516000

(Iteration 5901 / 9800) loss: 1.015861

(Iteration 6001 / 9800) loss: 0.921310

(Iteration 6101 / 9800) loss: 1.055507

(Iteration 6201 / 9800) loss: 0.917648

(Iteration 6301 / 9800) loss: 0.767686

(Epoch 13 / 20) train acc: 0.699000; val_acc: 0.543000

(Iteration 6401 / 9800) loss: 1.170058

(Iteration 6501 / 9800) loss: 0.810596

(Iteration 6601 / 9800) loss: 0.920641

(Iteration 6701 / 9800) loss: 0.725889

(Iteration 6801 / 9800) loss: 0.931281

(Epoch 14 / 20) train acc: 0.667000; val_acc: 0.531000

(Iteration 6901 / 9800) loss: 0.701817

(Iteration 7001 / 9800) loss: 0.788107

(Iteration 7101 / 9800) loss: 0.818656

(Iteration 7201 / 9800) loss: 0.888433

(Iteration 7301 / 9800) loss: 0.728136

(Epoch 15 / 20) train acc: 0.695000; val_acc: 0.530000

(Iteration 7401 / 9800) loss: 0.857501

(Iteration 7501 / 9800) loss: 0.867369

(Iteration 7601 / 9800) loss: 0.814501

(Iteration 7701 / 9800) loss: 0.763123

(Iteration 7801 / 9800) loss: 0.835519

(Epoch 16 / 20) train acc: 0.711000; val_acc: 0.528000

(Iteration 7901 / 9800) loss: 0.861891

(Iteration 8001 / 9800) loss: 0.667957

(Iteration 8101 / 9800) loss: 0.678417

(Iteration 8201 / 9800) loss: 0.776296

(Iteration 8301 / 9800) loss: 0.846255

(Epoch 17 / 20) train acc: 0.718000; val_acc: 0.532000

(Iteration 8401 / 9800) loss: 0.821841

(Iteration 8501 / 9800) loss: 0.737560

(Iteration 8601 / 9800) loss: 0.734345

(Iteration 8701 / 9800) loss: 0.789014

(Iteration 8801 / 9800) loss: 0.829744

(Epoch 18 / 20) train acc: 0.745000; val_acc: 0.530000

(Iteration 8901 / 9800) loss: 0.688820

(Iteration 9001 / 9800) loss: 0.726195

(Iteration 9101 / 9800) loss: 0.922960

(Iteration 9201 / 9800) loss: 0.791910

(Iteration 9301 / 9800) loss: 0.891499

(Epoch 19 / 20) train acc: 0.728000; val_acc: 0.522000

(Iteration 9401 / 9800) loss: 0.731820

(Iteration 9501 / 9800) loss: 0.721811

(Iteration 9601 / 9800) loss: 0.600602

(Iteration 9701 / 9800) loss: 0.689157

(Epoch 20 / 20) train acc: 0.739000; val_acc: 0.521000

Test you model

Run your best model on the validation and test sets. You should achieve above 50% accuracy on the validation set.

y_test_pred = np.argmax(best_model.loss(data['X_test']), axis=1)

y_val_pred = np.argmax(best_model.loss(data['X_val']), axis=1)

print 'Validation set accuracy: ', (y_val_pred == data['y_val']).mean()

print 'Test set accuracy: ', (y_test_pred == data['y_test']).mean()Validation set accuracy: 0.554

Test set accuracy: 0.545

如若内容造成侵权/违法违规/事实不符,请联系编程学习网邮箱:809451989@qq.com进行投诉反馈,一经查实,立即删除!

相关文章

- 从29到31:感恩、敬畏、谦卑、珍惜、坚持

工作多年,五年前第一接触TEE和TrustZone时,脑海中对这两个东西是一篇空白,仿佛眼前看见的是一篇蛮荒之地,加之TEE属于闭源部分,属于任何一家芯片公司的机密部分,外界很难接触到其真实源代码,而ARM官方给出的也只是基本的框架,对于具体实现,网络上能找到的资料也是寥寥…...

2024/4/18 14:11:33 - 数据库 Redis window上安装和使用

1、到https://download.csdn.net/user/qq_36511401/uploads去下载我的资源,Redis-x64-3.2.100,我这个安装包是64位。2、下载完之后直接解压到指定目录就可以了,我的是免安装的,目录如下所示。3、其中文件redis-cli.exe是本地客户端,redis-server.exe是本地服务端。我们平时…...

2024/4/27 22:47:09 - xp 终极优化(呕心沥血版)

声明:以上资料均是从从互联网上搜集整理而来,本人未曾测试,所以在改动时要小心,做好备份,有备无患么,呵呵。一、系统优化设置。 1、删除Windows强加的附件: 1) 用记事本NOTEPAD修改/winnt/inf/sysoc.inf,用查找/替换功能,在查找框中输入,hide(一个英文逗号紧…...

2024/4/27 23:43:38 - [Android] 为Android安装BusyBox —— 完整的bash shell

原文地址为:[Android] 为Android安装BusyBox —— 完整的bash shell大家是否有过这样的经历,在命令行里输入adb shell,然后使用命令操作你的手机或模拟器,但是那些命令都是常见Linux命令的阉割缩水版,用起来很不爽。是否想过在Android上使用较完整的shell呢?用BusyBox吧。…...

2024/4/27 21:26:20 - 汇编语言:键盘录入8个16进制数,求出其中最大值并以16进制输出

要求:1. 掌握loop指令及循环程序设计方法。2. 掌握输入/输出代码编写。3. 学习移位指令的应用。代码:assume cs:codesg codesg segment ;键盘输入8个16进制数(每个数二进制8位,即16进制2位),求出其中最大值并以16进制输出mov cx,8hmov bh,0 ;bh是最大值s: mov …...

2024/4/14 22:55:08 - cs231n 卷积神经网络与计算机视觉 5 神经网络基本结构 激活函数总结

1 引入神经网络中的神经元的灵感来源于人脑,人体中大约有860亿个神经元,大约有 10^14 - 10^15 突触(synapses). 每个神经元由树突dendrites接收信号 轴突axon发射信号. 轴突又连接到其他神经单元 的树突.突触强度synaptic strengths (权重w) 可以经过学习控制输入信号的输出…...

2024/4/14 22:55:07 - Java中byte与16进制字符串的互相转换

Java中byte用二进制表示占用8位,而我们知道16进制的每个字符需要用4位二进制位来表示(23 + 22 + 21 + 20 = 15),所以我们就可以把每个byte转换成两个相应的16进制字符,即把byte的高4位和低4位分别转换成相应的16进制字符H和L,并组合起来得到byte转换到16进制字符串的结果…...

2024/4/16 14:59:13 - CS231n第五课:神经网络2学习记录

结合视频5和笔记:https://zhuanlan.zhihu.com/p/21560667?refer=intelligentunit数据预处理数据预处理的手段一般有: 去均值(mean subtraction) 规范化/归一化(normalization) 主成分分析(PCA)和白化(whitening)PCA和白化(Whitening)是另一种预处理形式。在…...

2024/4/14 21:52:32 - 最新系统[防黑屏版]BT及双网盘下载(ZZ)

雨林木风 Ghost XP SP3 装机版 YN9.8 雨林木风 Ghost 封装克隆系统,具有安全、快速、稳定等特点。本系统可以一键无人值守安装、自动识别硬件并安装驱动程序,大大缩短了装机时间。本系统采用雨林木风SysPacker_3.0和Ghost11.0.2 封装,恢复速度更快,效率更高!系统集成了多…...

2024/4/16 15:38:25 - Windows XP 系统优化-百度转载

Windows XP是Microsoft推出的新一代Windows操作系统,虽然Windows XP称得上是至今功能最强大的操作系统,但与以往的Windows操作系统一样,新安装的Windows XP系统并不是处于最佳的状态,存在着一些用户不必使用和不曾使用的程序。大家可以再利用一些技巧来修改原本设定值来优化…...

2024/4/14 21:52:30 - 感恩的心,永远不朽

??我来自偶然,像一颗尘土,有谁看出我的懦弱。我来自何方,情回何处,谁在下一刻召唤我。天地虽宽,这条路难走,我看遍这人间坎坷辛劳, 抗癌新药 。我还有多少爱,我还有多少泪,要苍天知道我不认输。感恩的心,感谢有你,伴我一生,让我有勇气做自己。感恩的心,感谢命运,…...

2024/4/14 21:52:29 - android 安装busybox

android 启动后,会发现很多命令用不了.这个比较郁闷.都怪安装的toolbox功能太少.我们可以手动安装busybox到系统.可以下载busybox源代码自己编译.也可以使用网上别人编译好的二进制的文件.安装上就好了.我下了个别人编译好的.先通过 adb push d:/busybox /data/busy…...

2024/4/14 21:52:28 - 陈情表——中国人的感恩心

今天是西洋人所谓的“感恩节”,收到很多短信,写的很精彩,着实令人感动啊! 首先先谢谢我亲爱的朋友们了! 最近迷上看古文,真的发现古文写的真是言简意赅,回头看我们现代人写的一些文章,真是啰嗦至极。 至于 感恩节 我搞不懂为什么西方人能把这个传播到中国,使得新辈的…...

2024/4/14 21:52:27 - WINDOWSXP全面优化

一、系统属性中的项目∶ 鼠标右健单击桌面上的“我的电脑”,选择“属性”,打开“系统属性”对话框 1.关闭系统还原 找到系统还原选项, 如果你不是老噼里啪啦安装一些软件(难道你比我还厉害),你也可以去掉,这样可以节省好多空间。将“在所有盘中禁用系统还原”前面的囗…...

2024/4/14 21:52:26 - 【原创】busybox使用

首先下载busybox,去[url]http://www.busybox.net/downloads/binaries[/url]下载。然后依次敲入下面命令adb remountchmod 777 ./busyboxadb push busybox /system/xbinadb shell进入手机cd system/xbin./busybox --install .然后就可以用了。...

2024/4/20 13:37:13 - labelImg配置指南(python3版)

查看本文前请确认已安装Python3 若安装的是Python2,请移步另一篇博客 https://blog.csdn.net/qq_34108714/article/details/89315150 若之前电脑从未配置过labelImg,可直接下载使用免安装配置版(无需python) 网盘链接:https://pan.baidu.com/s/180uqsL-nrvQo_-9vnBl3sQ 提…...

2024/4/14 22:55:05 - WebForm.aspx 页面通过 AJAX 访问WebForm.aspx.cs类中的方法,获取数据

WebForm.aspx 页面通过 AJAX 访问WebForm.aspx.cs类中的方法,获取数据 WebForm1.aspx 页面 (原生AJAX请求,写法一)<%@ Page Language="C#" AutoEventWireup="true" CodeBehind="WebForm1.aspx.cs" Inherits="IsPostBack.WebForm1"…...

2024/4/21 18:16:21 - ubuntu安装时出现BusyBox问题解决

光盘(官方寄来的光盘)安装ubuntu,出现提示: BusyBox V1.1.3 (Debian 1:1.1.3-5ubuntu7) Built-in shell (ash)Enter help for a list of built-in Commands. (initramfs) ---------------------- 无法安装下去了。这…...

2024/4/24 20:25:07 - 用juery的ajax方法调用aspx.cs页面中的webmethod方法

首先在 aspx.cs文件里建一个公开的静态方法,然后加上WebMethod属性。 如: [WebMethod] public static string GetUserName() {//... } <pre class="csharp" name="code"> 如果要在这个方法里操作session,那还得将WebMethod的EnableSession 属…...

2024/4/20 15:39:05 - c 盘空间又满了?微信清理神器帮你释放空间

苏生不惑第141篇原创文章,将本公众号设为星标,第一时间看最新文章。除了APP,平常用的最多还是微信桌面版 https://pc.weixin.qq.com/微信默认安装在c盘,微信群里发的图片,视频,文件都会自动保存在安装目录下,时间一长占用空间会越来越大。如果你的c盘空间不够大,就会遇…...

2024/4/14 22:55:02

最新文章

- 解决Milvus官网提供的单机版docker容器无法启动,以及其它容器进程与Milvus容器通信实现方案【Milvus】【pymilvus】【Docker】

文章目录 问题预备知识方案获取pymilvus获取milvus 实例多容器通信 问题 我的需求是做混合检索单机版可以满足,要走Docker容器部署,还需要和另一个容器中的程序做通信。官方文档提供的Milvus安装启动Milvus方案,见文档:传送门 我…...

2024/4/28 8:17:20 - 梯度消失和梯度爆炸的一些处理方法

在这里是记录一下梯度消失或梯度爆炸的一些处理技巧。全当学习总结了如有错误还请留言,在此感激不尽。 权重和梯度的更新公式如下: w w − η ⋅ ∇ w w w - \eta \cdot \nabla w ww−η⋅∇w 个人通俗的理解梯度消失就是网络模型在反向求导的时候出…...

2024/3/20 10:50:27 - 磁盘管理与文件管理

文章目录 一、磁盘结构二、MBR与磁盘分区分区的优势与缺点分区的方式文件系统分区工具挂载与解挂载 一、磁盘结构 1.硬盘结构 硬盘分类: 1.机械硬盘:靠磁头转动找数据 慢 便宜 2.固态硬盘:靠芯片去找数据 快 贵 硬盘的数据结构:…...

2024/4/23 6:16:19 - 3d representation的一些基本概念

顶点(Vertex):三维空间中的一个点,可以有多个属性,如位置坐标、颜色、纹理坐标和法线向量。它是构建三维几何形状的基本单元。 边(Edge):连接两个顶点形成的直线段,它定…...

2024/4/27 1:08:47 - 【外汇早评】美通胀数据走低,美元调整

原标题:【外汇早评】美通胀数据走低,美元调整昨日美国方面公布了新一期的核心PCE物价指数数据,同比增长1.6%,低于前值和预期值的1.7%,距离美联储的通胀目标2%继续走低,通胀压力较低,且此前美国一季度GDP初值中的消费部分下滑明显,因此市场对美联储后续更可能降息的政策…...

2024/4/26 18:09:39 - 【原油贵金属周评】原油多头拥挤,价格调整

原标题:【原油贵金属周评】原油多头拥挤,价格调整本周国际劳动节,我们喜迎四天假期,但是整个金融市场确实流动性充沛,大事频发,各个商品波动剧烈。美国方面,在本周四凌晨公布5月份的利率决议和新闻发布会,维持联邦基金利率在2.25%-2.50%不变,符合市场预期。同时美联储…...

2024/4/28 3:28:32 - 【外汇周评】靓丽非农不及疲软通胀影响

原标题:【外汇周评】靓丽非农不及疲软通胀影响在刚结束的周五,美国方面公布了新一期的非农就业数据,大幅好于前值和预期,新增就业重新回到20万以上。具体数据: 美国4月非农就业人口变动 26.3万人,预期 19万人,前值 19.6万人。 美国4月失业率 3.6%,预期 3.8%,前值 3…...

2024/4/26 23:05:52 - 【原油贵金属早评】库存继续增加,油价收跌

原标题:【原油贵金属早评】库存继续增加,油价收跌周三清晨公布美国当周API原油库存数据,上周原油库存增加281万桶至4.692亿桶,增幅超过预期的74.4万桶。且有消息人士称,沙特阿美据悉将于6月向亚洲炼油厂额外出售更多原油,印度炼油商预计将每日获得至多20万桶的额外原油供…...

2024/4/27 4:00:35 - 【外汇早评】日本央行会议纪要不改日元强势

原标题:【外汇早评】日本央行会议纪要不改日元强势近两日日元大幅走强与近期市场风险情绪上升,避险资金回流日元有关,也与前一段时间的美日贸易谈判给日本缓冲期,日本方面对汇率问题也避免继续贬值有关。虽然今日早间日本央行公布的利率会议纪要仍然是支持宽松政策,但这符…...

2024/4/27 17:58:04 - 【原油贵金属早评】欧佩克稳定市场,填补伊朗问题的影响

原标题:【原油贵金属早评】欧佩克稳定市场,填补伊朗问题的影响近日伊朗局势升温,导致市场担忧影响原油供给,油价试图反弹。此时OPEC表态稳定市场。据消息人士透露,沙特6月石油出口料将低于700万桶/日,沙特已经收到石油消费国提出的6月份扩大出口的“适度要求”,沙特将满…...

2024/4/27 14:22:49 - 【外汇早评】美欲与伊朗重谈协议

原标题:【外汇早评】美欲与伊朗重谈协议美国对伊朗的制裁遭到伊朗的抗议,昨日伊朗方面提出将部分退出伊核协议。而此行为又遭到欧洲方面对伊朗的谴责和警告,伊朗外长昨日回应称,欧洲国家履行它们的义务,伊核协议就能保证存续。据传闻伊朗的导弹已经对准了以色列和美国的航…...

2024/4/28 1:28:33 - 【原油贵金属早评】波动率飙升,市场情绪动荡

原标题:【原油贵金属早评】波动率飙升,市场情绪动荡因中美贸易谈判不安情绪影响,金融市场各资产品种出现明显的波动。随着美国与中方开启第十一轮谈判之际,美国按照既定计划向中国2000亿商品征收25%的关税,市场情绪有所平复,已经开始接受这一事实。虽然波动率-恐慌指数VI…...

2024/4/27 9:01:45 - 【原油贵金属周评】伊朗局势升温,黄金多头跃跃欲试

原标题:【原油贵金属周评】伊朗局势升温,黄金多头跃跃欲试美国和伊朗的局势继续升温,市场风险情绪上升,避险黄金有向上突破阻力的迹象。原油方面稍显平稳,近期美国和OPEC加大供给及市场需求回落的影响,伊朗局势并未推升油价走强。近期中美贸易谈判摩擦再度升级,美国对中…...

2024/4/27 17:59:30 - 【原油贵金属早评】市场情绪继续恶化,黄金上破

原标题:【原油贵金属早评】市场情绪继续恶化,黄金上破周初中国针对于美国加征关税的进行的反制措施引发市场情绪的大幅波动,人民币汇率出现大幅的贬值动能,金融市场受到非常明显的冲击。尤其是波动率起来之后,对于股市的表现尤其不安。隔夜美国股市出现明显的下行走势,这…...

2024/4/25 18:39:16 - 【外汇早评】美伊僵持,风险情绪继续升温

原标题:【外汇早评】美伊僵持,风险情绪继续升温昨日沙特两艘油轮再次发生爆炸事件,导致波斯湾局势进一步恶化,市场担忧美伊可能会出现摩擦生火,避险品种获得支撑,黄金和日元大幅走强。美指受中美贸易问题影响而在低位震荡。继5月12日,四艘商船在阿联酋领海附近的阿曼湾、…...

2024/4/28 1:34:08 - 【原油贵金属早评】贸易冲突导致需求低迷,油价弱势

原标题:【原油贵金属早评】贸易冲突导致需求低迷,油价弱势近日虽然伊朗局势升温,中东地区几起油船被袭击事件影响,但油价并未走高,而是出于调整结构中。由于市场预期局势失控的可能性较低,而中美贸易问题导致的全球经济衰退风险更大,需求会持续低迷,因此油价调整压力较…...

2024/4/26 19:03:37 - 氧生福地 玩美北湖(上)——为时光守候两千年

原标题:氧生福地 玩美北湖(上)——为时光守候两千年一次说走就走的旅行,只有一张高铁票的距离~ 所以,湖南郴州,我来了~ 从广州南站出发,一个半小时就到达郴州西站了。在动车上,同时改票的南风兄和我居然被分到了一个车厢,所以一路非常愉快地聊了过来。 挺好,最起…...

2024/4/28 1:22:35 - 氧生福地 玩美北湖(中)——永春梯田里的美与鲜

原标题:氧生福地 玩美北湖(中)——永春梯田里的美与鲜一觉醒来,因为大家太爱“美”照,在柳毅山庄去寻找龙女而错过了早餐时间。近十点,向导坏坏还是带着饥肠辘辘的我们去吃郴州最富有盛名的“鱼头粉”。说这是“十二分推荐”,到郴州必吃的美食之一。 哇塞!那个味美香甜…...

2024/4/25 18:39:14 - 氧生福地 玩美北湖(下)——奔跑吧骚年!

原标题:氧生福地 玩美北湖(下)——奔跑吧骚年!让我们红尘做伴 活得潇潇洒洒 策马奔腾共享人世繁华 对酒当歌唱出心中喜悦 轰轰烈烈把握青春年华 让我们红尘做伴 活得潇潇洒洒 策马奔腾共享人世繁华 对酒当歌唱出心中喜悦 轰轰烈烈把握青春年华 啊……啊……啊 两…...

2024/4/26 23:04:58 - 扒开伪装医用面膜,翻六倍价格宰客,小姐姐注意了!

原标题:扒开伪装医用面膜,翻六倍价格宰客,小姐姐注意了!扒开伪装医用面膜,翻六倍价格宰客!当行业里的某一品项火爆了,就会有很多商家蹭热度,装逼忽悠,最近火爆朋友圈的医用面膜,被沾上了污点,到底怎么回事呢? “比普通面膜安全、效果好!痘痘、痘印、敏感肌都能用…...

2024/4/27 23:24:42 - 「发现」铁皮石斛仙草之神奇功效用于医用面膜

原标题:「发现」铁皮石斛仙草之神奇功效用于医用面膜丽彦妆铁皮石斛医用面膜|石斛多糖无菌修护补水贴19大优势: 1、铁皮石斛:自唐宋以来,一直被列为皇室贡品,铁皮石斛生于海拔1600米的悬崖峭壁之上,繁殖力差,产量极低,所以古代仅供皇室、贵族享用 2、铁皮石斛自古民间…...

2024/4/28 5:48:52 - 丽彦妆\医用面膜\冷敷贴轻奢医学护肤引导者

原标题:丽彦妆\医用面膜\冷敷贴轻奢医学护肤引导者【公司简介】 广州华彬企业隶属香港华彬集团有限公司,专注美业21年,其旗下品牌: 「圣茵美」私密荷尔蒙抗衰,产后修复 「圣仪轩」私密荷尔蒙抗衰,产后修复 「花茵莳」私密荷尔蒙抗衰,产后修复 「丽彦妆」专注医学护…...

2024/4/26 19:46:12 - 广州械字号面膜生产厂家OEM/ODM4项须知!

原标题:广州械字号面膜生产厂家OEM/ODM4项须知!广州械字号面膜生产厂家OEM/ODM流程及注意事项解读: 械字号医用面膜,其实在我国并没有严格的定义,通常我们说的医美面膜指的应该是一种「医用敷料」,也就是说,医用面膜其实算作「医疗器械」的一种,又称「医用冷敷贴」。 …...

2024/4/27 11:43:08 - 械字号医用眼膜缓解用眼过度到底有无作用?

原标题:械字号医用眼膜缓解用眼过度到底有无作用?医用眼膜/械字号眼膜/医用冷敷眼贴 凝胶层为亲水高分子材料,含70%以上的水分。体表皮肤温度传导到本产品的凝胶层,热量被凝胶内水分子吸收,通过水分的蒸发带走大量的热量,可迅速地降低体表皮肤局部温度,减轻局部皮肤的灼…...

2024/4/27 8:32:30 - 配置失败还原请勿关闭计算机,电脑开机屏幕上面显示,配置失败还原更改 请勿关闭计算机 开不了机 这个问题怎么办...

解析如下:1、长按电脑电源键直至关机,然后再按一次电源健重启电脑,按F8健进入安全模式2、安全模式下进入Windows系统桌面后,按住“winR”打开运行窗口,输入“services.msc”打开服务设置3、在服务界面,选中…...

2022/11/19 21:17:18 - 错误使用 reshape要执行 RESHAPE,请勿更改元素数目。

%读入6幅图像(每一幅图像的大小是564*564) f1 imread(WashingtonDC_Band1_564.tif); subplot(3,2,1),imshow(f1); f2 imread(WashingtonDC_Band2_564.tif); subplot(3,2,2),imshow(f2); f3 imread(WashingtonDC_Band3_564.tif); subplot(3,2,3),imsho…...

2022/11/19 21:17:16 - 配置 已完成 请勿关闭计算机,win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机...

win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机”问题的解决方法在win7系统关机时如果有升级系统的或者其他需要会直接进入一个 等待界面,在等待界面中我们需要等待操作结束才能关机,虽然这比较麻烦,但是对系统进行配置和升级…...

2022/11/19 21:17:15 - 台式电脑显示配置100%请勿关闭计算机,“准备配置windows 请勿关闭计算机”的解决方法...

有不少用户在重装Win7系统或更新系统后会遇到“准备配置windows,请勿关闭计算机”的提示,要过很久才能进入系统,有的用户甚至几个小时也无法进入,下面就教大家这个问题的解决方法。第一种方法:我们首先在左下角的“开始…...

2022/11/19 21:17:14 - win7 正在配置 请勿关闭计算机,怎么办Win7开机显示正在配置Windows Update请勿关机...

置信有很多用户都跟小编一样遇到过这样的问题,电脑时发现开机屏幕显现“正在配置Windows Update,请勿关机”(如下图所示),而且还需求等大约5分钟才干进入系统。这是怎样回事呢?一切都是正常操作的,为什么开时机呈现“正…...

2022/11/19 21:17:13 - 准备配置windows 请勿关闭计算机 蓝屏,Win7开机总是出现提示“配置Windows请勿关机”...

Win7系统开机启动时总是出现“配置Windows请勿关机”的提示,没过几秒后电脑自动重启,每次开机都这样无法进入系统,此时碰到这种现象的用户就可以使用以下5种方法解决问题。方法一:开机按下F8,在出现的Windows高级启动选…...

2022/11/19 21:17:12 - 准备windows请勿关闭计算机要多久,windows10系统提示正在准备windows请勿关闭计算机怎么办...

有不少windows10系统用户反映说碰到这样一个情况,就是电脑提示正在准备windows请勿关闭计算机,碰到这样的问题该怎么解决呢,现在小编就给大家分享一下windows10系统提示正在准备windows请勿关闭计算机的具体第一种方法:1、2、依次…...

2022/11/19 21:17:11 - 配置 已完成 请勿关闭计算机,win7系统关机提示“配置Windows Update已完成30%请勿关闭计算机”的解决方法...

今天和大家分享一下win7系统重装了Win7旗舰版系统后,每次关机的时候桌面上都会显示一个“配置Windows Update的界面,提示请勿关闭计算机”,每次停留好几分钟才能正常关机,导致什么情况引起的呢?出现配置Windows Update…...

2022/11/19 21:17:10 - 电脑桌面一直是清理请关闭计算机,windows7一直卡在清理 请勿关闭计算机-win7清理请勿关机,win7配置更新35%不动...

只能是等着,别无他法。说是卡着如果你看硬盘灯应该在读写。如果从 Win 10 无法正常回滚,只能是考虑备份数据后重装系统了。解决来方案一:管理员运行cmd:net stop WuAuServcd %windir%ren SoftwareDistribution SDoldnet start WuA…...

2022/11/19 21:17:09 - 计算机配置更新不起,电脑提示“配置Windows Update请勿关闭计算机”怎么办?

原标题:电脑提示“配置Windows Update请勿关闭计算机”怎么办?win7系统中在开机与关闭的时候总是显示“配置windows update请勿关闭计算机”相信有不少朋友都曾遇到过一次两次还能忍但经常遇到就叫人感到心烦了遇到这种问题怎么办呢?一般的方…...

2022/11/19 21:17:08 - 计算机正在配置无法关机,关机提示 windows7 正在配置windows 请勿关闭计算机 ,然后等了一晚上也没有关掉。现在电脑无法正常关机...

关机提示 windows7 正在配置windows 请勿关闭计算机 ,然后等了一晚上也没有关掉。现在电脑无法正常关机以下文字资料是由(历史新知网www.lishixinzhi.com)小编为大家搜集整理后发布的内容,让我们赶快一起来看一下吧!关机提示 windows7 正在配…...

2022/11/19 21:17:05 - 钉钉提示请勿通过开发者调试模式_钉钉请勿通过开发者调试模式是真的吗好不好用...

钉钉请勿通过开发者调试模式是真的吗好不好用 更新时间:2020-04-20 22:24:19 浏览次数:729次 区域: 南阳 > 卧龙 列举网提醒您:为保障您的权益,请不要提前支付任何费用! 虚拟位置外设器!!轨迹模拟&虚拟位置外设神器 专业用于:钉钉,外勤365,红圈通,企业微信和…...

2022/11/19 21:17:05 - 配置失败还原请勿关闭计算机怎么办,win7系统出现“配置windows update失败 还原更改 请勿关闭计算机”,长时间没反应,无法进入系统的解决方案...

前几天班里有位学生电脑(windows 7系统)出问题了,具体表现是开机时一直停留在“配置windows update失败 还原更改 请勿关闭计算机”这个界面,长时间没反应,无法进入系统。这个问题原来帮其他同学也解决过,网上搜了不少资料&#x…...

2022/11/19 21:17:04 - 一个电脑无法关闭计算机你应该怎么办,电脑显示“清理请勿关闭计算机”怎么办?...

本文为你提供了3个有效解决电脑显示“清理请勿关闭计算机”问题的方法,并在最后教给你1种保护系统安全的好方法,一起来看看!电脑出现“清理请勿关闭计算机”在Windows 7(SP1)和Windows Server 2008 R2 SP1中,添加了1个新功能在“磁…...

2022/11/19 21:17:03 - 请勿关闭计算机还原更改要多久,电脑显示:配置windows更新失败,正在还原更改,请勿关闭计算机怎么办...

许多用户在长期不使用电脑的时候,开启电脑发现电脑显示:配置windows更新失败,正在还原更改,请勿关闭计算机。。.这要怎么办呢?下面小编就带着大家一起看看吧!如果能够正常进入系统,建议您暂时移…...

2022/11/19 21:17:02 - 还原更改请勿关闭计算机 要多久,配置windows update失败 还原更改 请勿关闭计算机,电脑开机后一直显示以...

配置windows update失败 还原更改 请勿关闭计算机,电脑开机后一直显示以以下文字资料是由(历史新知网www.lishixinzhi.com)小编为大家搜集整理后发布的内容,让我们赶快一起来看一下吧!配置windows update失败 还原更改 请勿关闭计算机&#x…...

2022/11/19 21:17:01 - 电脑配置中请勿关闭计算机怎么办,准备配置windows请勿关闭计算机一直显示怎么办【图解】...

不知道大家有没有遇到过这样的一个问题,就是我们的win7系统在关机的时候,总是喜欢显示“准备配置windows,请勿关机”这样的一个页面,没有什么大碍,但是如果一直等着的话就要两个小时甚至更久都关不了机,非常…...

2022/11/19 21:17:00 - 正在准备配置请勿关闭计算机,正在准备配置windows请勿关闭计算机时间长了解决教程...

当电脑出现正在准备配置windows请勿关闭计算机时,一般是您正对windows进行升级,但是这个要是长时间没有反应,我们不能再傻等下去了。可能是电脑出了别的问题了,来看看教程的说法。正在准备配置windows请勿关闭计算机时间长了方法一…...

2022/11/19 21:16:59 - 配置失败还原请勿关闭计算机,配置Windows Update失败,还原更改请勿关闭计算机...

我们使用电脑的过程中有时会遇到这种情况,当我们打开电脑之后,发现一直停留在一个界面:“配置Windows Update失败,还原更改请勿关闭计算机”,等了许久还是无法进入系统。如果我们遇到此类问题应该如何解决呢࿰…...

2022/11/19 21:16:58 - 如何在iPhone上关闭“请勿打扰”

Apple’s “Do Not Disturb While Driving” is a potentially lifesaving iPhone feature, but it doesn’t always turn on automatically at the appropriate time. For example, you might be a passenger in a moving car, but your iPhone may think you’re the one dri…...

2022/11/19 21:16:57